The Ethics of AI Dependency: What We Lose When Machines Become 'Friends'

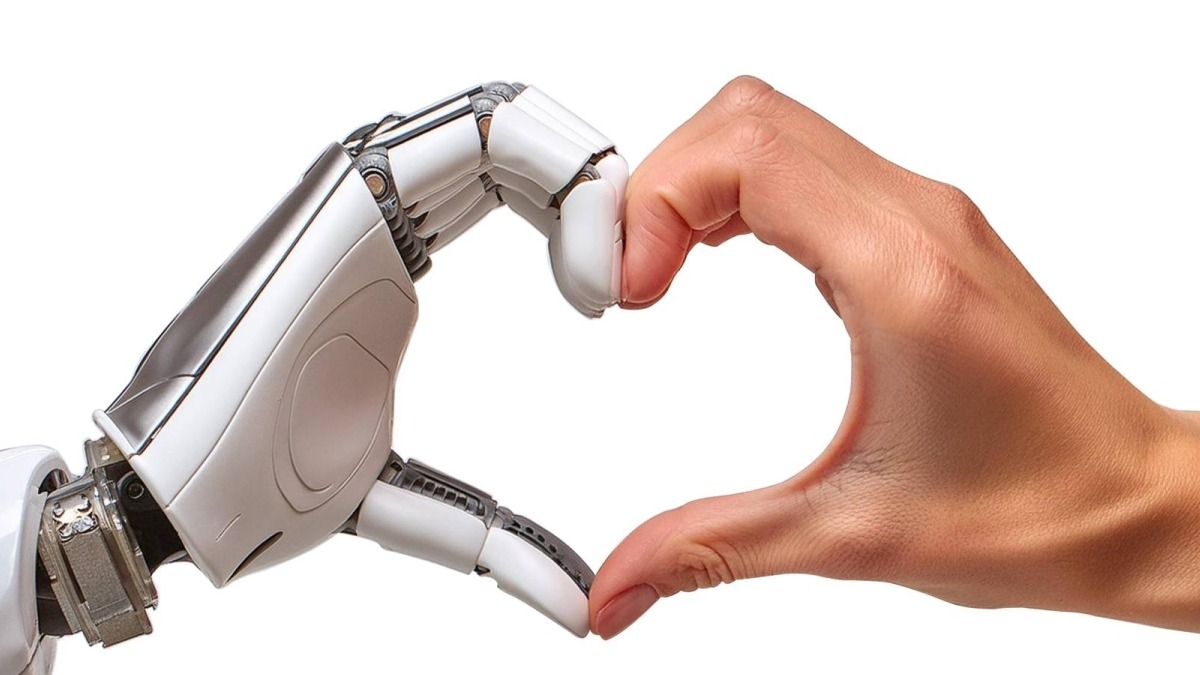

Let's be real — how many of you have ever thanked Siri after she set your alarm? Or felt genuinely bad when you snapped at your AI assistant during a stressful moment? If you are nodding right now, you are not alone.

We are living in an era where our digital companions are becoming more human-like by the day, and it is getting a bit complicated.

For us Gen Zs, we are the generation that grew up watching technology spread across our continent from basic feature phones to smartphones that can do literally everything. We have seen how tech can bridge gaps that seemed impossible to cross.

But as AI agents become more sophisticated, more emotionally intelligent, more, well, friendly, we need to ask ourselves some hard questions about what we are actually signing up for.

When Your Phone Knows You Better Than Your Bestie

Imagine, you are having a rough day. Your AI chatbot checks in on you, remembers that you mentioned your exam anxiety last week, and offers surprisingly comforting words. It does not judge you. It is available 24/7. It never gets tired of your rants about the same person. Sounds perfect, right?

But, do you know these AI companions are designed to be engaging, to keep you coming back, to make you feel heard and understood? Yes, tech companies employ entire teams of psychologists and designers whose job is to make these interactions feel as human as possible. But let us pause and think. When a machine is programmed to care about you, does it really care?

The ethical boundary we are dancing around is that these companies are essentially creating artificial relationships that fulfill real human needs for connection, validation, and companionship. And they are doing it because engagement equals data, and data equals profit.

In simpler terms, your loneliness, your need for connection is just being monetized.

What We're Actually Losing

When we start depending on AI for emotional support, we are not just gaining a convenient friend. We are potentially losing something more valuable: the messy, complicated, beautiful reality of human connection.

Real friends challenge you. They call you out when you are wrong. They are unavailable sometimes because they have their own lives and problems. They grow and change, and so does your friendship.

While an AI is programmed to agree with you, to make you feel good, to keep you engaged. It is the ultimate yes-person, and that is actually dangerous for our growth.

Think about our African context specifically. We come from cultures built on community. Our communities have always thrived on real, face-to-face human bonds. What happens to that when we start preferring the comfort of AI that never disagrees, never disappoints, and never demands anything difficult from us?

There is also the vulnerability issue. When you pour your heart out to an AI, where does that information go? Who is accessing your deepest fears, your secrets, your emotional patterns? In a continent where data privacy regulations are still catching up with technological advancement, this should worry us more than it does.

The Design Ethics We Need to Talk About

Tech companies have a moral responsibility here that many are dodging. When you design an AI to mimic human emotional intelligence, you are playing with fire. These systems should come with clear boundaries like digital guardrails that remind users what they are actually interacting with.

Some experts argue for transparency markers like regular reminders that you are talking to a machine, not a person. Others suggest usage limits that encourage users to maintain real-world relationships. But the question remains that would companies voluntarily implement features that reduce engagement? Probably not, unless we demand it.

We need African voices in these conversations about AI ethics. Our communal values, our understanding of relationships, our unique social dynamics are perspectives that matter when global tech companies are designing products used by billions.

Finding Balance in a Digital Age

I'm not saying AI companions are pure evil or that we should delete every app. Technology can genuinely help people, especially those dealing with mental health challenges in places where therapy is not accessible or affordable. AI can be a bridge, a first step toward getting real help.

The key is intentionality. Use AI as a tool, not a replacement. Let it help you organize your thoughts before talking to a real friend. Use it to practice difficult conversations. But don't let it become your primary source of emotional fulfillment.

We also need to hold tech companies accountable. Push for ethical design standards. Support regulations that protect users from manipulative features. Vote with your downloads and your data.

The Bottom Line

Our generation has more power than we think to shape how AI integrates into society. We can demand better, push for ethical boundaries, and resist the temptation of artificial relationships that ultimately leave us more isolated.

Yes, AI can be helpful. Yes, it is impressive how human-like these systems are becoming. But let's not lose sight of what makes us beautifully, messily, human. Real connection requires risk, vulnerability, and the possibility of hurt.

That is not a bug, it is just a natural feature of authentic relationships that no algorithm can replicate.

So next time your AI friend seems a bit too perfect, remember that is its design. And you deserve more than perfection. You deserve real.

You may also like...

Arsenal's Premier League Title Hopes Dented as Lead Shrinks, Rice Urges Resilience

Arsenal's Premier League title hopes faced a setback with a 1-1 draw against Brentford, reducing their lead over Manches...

Berlinale Kicks Off With Hope and Praise for Indie Cinema, Michelle Yeoh Delivers Stirring Speech!

The Berlin Film Festival commenced with a spirit of hope amid challenging weather, honoring Michelle Yeoh with the Honor...

AI Faked Tom Cruise vs. Brad Pitt Fight Sparks Outrage From Motion Picture Association!

The Motion Picture Association has vehemently condemned ByteDance's new AI video generator, Seedance 2.0, alleging wides...

Tensions Flare: Egypt Deploys Forces to Somalia Over Somaliland Recognition

Egypt has formally committed military forces to the African Union Mission in Somalia (AUSSOM), affirming its support for...

Artist Hallie Ndorley's Surprising Debut as Art Instructor at Toronto Black Film Festival

Liberian-Canadian artist Hallie Andrews Ndorley made a significant debut at the Toronto Black Film Festival, leading an ...

First Drive Review: 2026 Porsche Macan GTS Electric – A Familiar Thrill?

The 2026 Porsche Macan GTS Electric positions itself as the 'level-headed enthusiast special,' slotting between the 4S a...

Honda's Electric Gamble: Billions Lost in EV Strategy Fallout

Honda is grappling with billions in losses from its EV investments, prompting a strategic shift. The automaker plans to ...

Crypto Alarm! Peter Schiff Predicts Bitcoin Plummet Below $10K

Crypto skeptic Peter Schiff predicts a significant Bitcoin plunge to $10,000 and warns investors of further declines, cr...