Sarvam AI's Bold Bet: Open-Source Models Powering Everything from Phones to Cars

Indian AI lab Sarvam has unveiled a new generation of large language models (LLMs), signaling its strategy to capture market share from larger U.S. and Chinese rivals by developing smaller, efficient, open-source AI models. The announcement, made at the India AI Impact Summit in New Delhi, aligns with the Indian government's initiative to decrease reliance on foreign AI platforms and to develop models tailored for local languages and specific use cases.

The new lineup from Sarvam includes 30-billion and 105-billion parameter models, along with a text-to-speech model, a speech-to-text model, and a vision model for document parsing. These represent a significant enhancement compared to the company's previous 2-billion-parameter Sarvam 1 model, which was released in October 2024. The 30-billion and 105-billion parameter models leverage a mixture-of-experts (MoE) architecture. This design activates only a fraction of their total parameters at any given time, which substantially reduces computing costs. The 30B model supports a 32,000-token context window, ideal for real-time conversational applications, while the more substantial 105B model offers a 128,000-token window, enabling more complex, multi-step reasoning tasks.

Sarvam states that its new AI models were trained from scratch, rather than being fine-tuned on existing open-source systems. The 30B model underwent pre-training on approximately 16 trillion tokens of text, while the 105B model was trained on trillions of tokens encompassing multiple Indian languages. These models are engineered to support real-time applications, such as voice-based assistants and chat systems in various Indian languages. Sarvam's 30B model is positioned to compete with models like Google’s Gemma 27B and OpenAI’s GPT-OSS-20B, while its 105B model aims to contend with OpenAI’s GPT-OSS-120B and Alibaba’s Qwen-3-Next-80B.

The startup disclosed that the models were trained using computing resources provided under India’s government-backed IndiaAI Mission, with infrastructure support from data center operator Yotta and technical assistance from Nvidia. Sarvam executives expressed a commitment to a measured approach to scaling their models, prioritizing real-world applications over mere size. Sarvam plans to open-source both the 30B and 105B models, though details regarding the public release of training data or full training code were not specified. The company also intends to develop specialized AI systems, including coding-focused models and enterprise tools under

You may also like...

How to Make LinkedIn Work for You in 2026

Learn how you can actively use LinkedIn strategically in 2026 by aligning your profile with proven trends, patterns and ...

5 Reasons Why Synthetic Wigs Are Bad for You

Are synthetic wigs safe? Learn the 5 hidden risks, including scalp irritation and hair damage, plus safer alternatives f...

Fela Kuti's Son Ignites Fury with 'Racist Country' Slam Amid Vinicius Jr Controversy

A UEFA Champions League match was disrupted by a racist incident involving Vinicius Jr and Gianluca Prestianni. Seun Kut...

Jaylen Brown Alleges 'Targeted' Shutdown as Beverly Hills Issues Apology

Beverly Hills has apologized to NBA star Jaylen Brown for an inaccurate statement concerning the shutdown of his brand e...

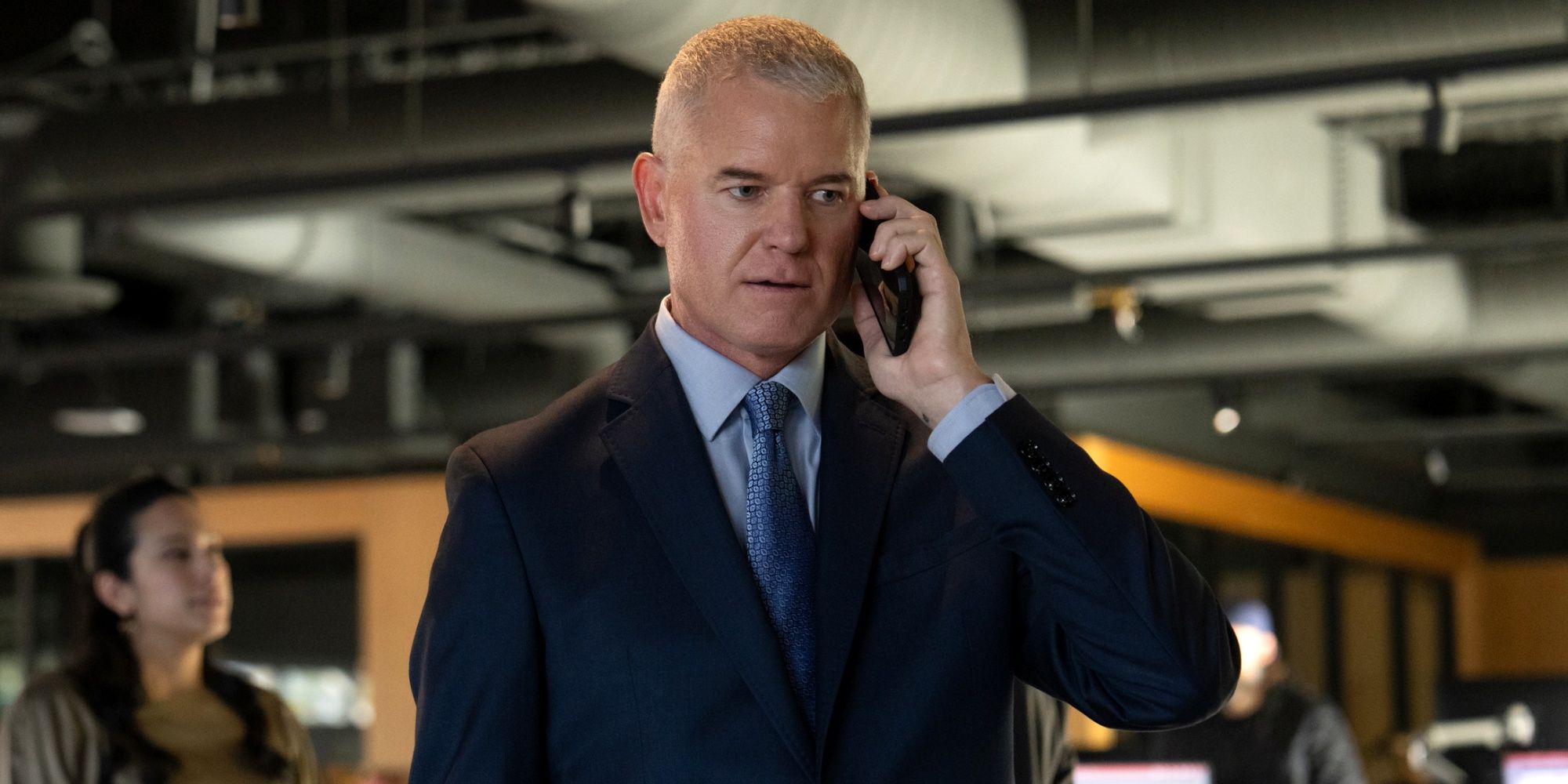

Hollywood Reacts as Tributes Pour In for Veteran Actor Eric Dane

Beloved actor Eric Dane, known for his iconic roles as "McSteamy" in "Grey's Anatomy" and the complex Cal Jacobs in "Eup...

Live54+ Drives Major Consolidation in Africa's Creative Capital Markets

Live54+ has launched to consolidate Africa's fragmented creative industries, uniting East and West African businesses in...

UK to Introduce Digital Visas for Nigerian Travelers by 2026

Nigerian nationals applying for UK visitor visas will transition to a digital eVisa system from February 25, 2026, repla...

Uganda Airlines Faces Scrutiny as Officials Warn of Tourism Impact

Ugandan government officials are raising alarms over negative publicity surrounding Uganda Airlines, warning it's causin...