Grok, Consent, and the Problem We Keep Avoiding in AI Conversations

Every technological leap comes with its own moral lag and the rapid growth of AI that we've witnessed in the last couple of years did not only create this gap, it also widened it.

The recent controversy surrounding Grok, Elon Musk’s AI model embedded directly into X formerly known as twitter, has forced an uncomfortable but necessary conversation about consent, responsibility, and how easily powerful tools can be misused when placed in the wrong context.

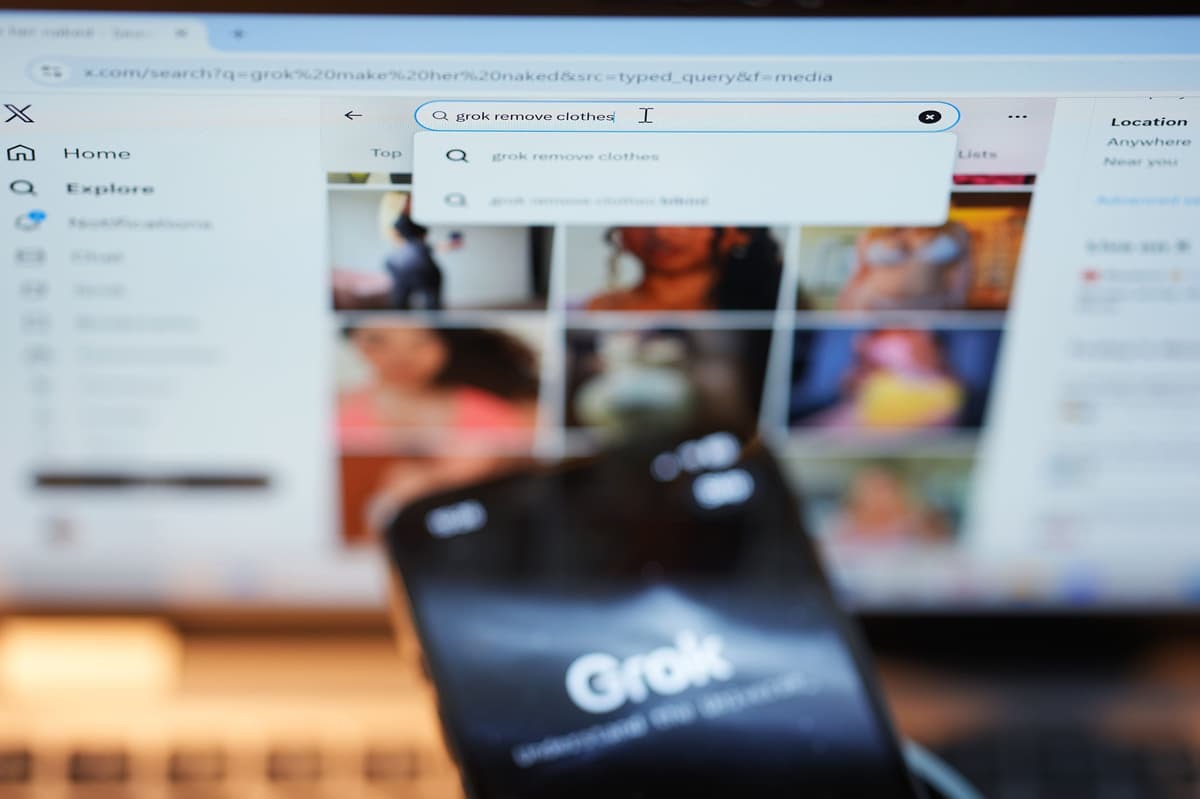

Social media users have reported Grok being used to generate altered, sexualized, and non-consensual images, largely of women, by feeding the model existing photographs and prompting it to manipulate them.

The outrage has been swift and understandable. Complaints have mounted from various individuals, concerned bodies and even the governments of some nations have responded.

Indonesia and Malaysia have temporarily blocked access to grok. Regulators in the UK have begun investigations on possible ways to go about the issue and yet, in the middle of this backlash, the U.S. Pentagon is embracing Grok.

This contradiction tells us something important: the problem is not just Grok, it is how we are choosing to live with AI and what we are actually using it to do.

AI And Consent: The Real Issue Beneath the Outrage

At the center of this controversy is a simple but unsettling question: who is responsible when AI is used to violate consent? The AI company? The platform hosting it? Or the user typing the prompt?

AI systems, including Grok, do not act independently. They generate outputs based on training data, programming constraints, and user input. They do not wake up with intent, they respond to instructions.

This means AI can only produce harmful content if it is technically allowed to and socially encouraged by its users.

However, the issue with Grok is not just its capabilities, it is its placement. Unlike standalone AI tools, Grok is embedded directly into X, a platform already optimized for virality, impulsive behavior, and low friction sharing. This integration turns experimentation into amplification that seems to be getting out of hand.

The non-consensual image generation problem could exist with any AI model, but embedding such a model inside a real-time social media ecosystem removes the pause that often prevents harm and gives every action of the AI model public visibility. So in this sense abuse becomes easy, fast, and public, also public perception becomes faster.

Consent, in this context, is no longer just a personal boundary, it becomes a systematic problem.

In all of this situation Elon Musk and xAI have said that they are taking action “against illegal content on X, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary.” But Grok’s responses to user requests are still flooded with images sexualizing women and there have been restrictions of image generation to just verified users.

But will the actions of social media users on X be fully controlled or will it turn to a fully monitored platform?

Free Will, Ethics, and the Limits of AI Control

One of the most misunderstood aspects of AI ethics is the belief that creators can fully control how users interact with AI.

In reality, no AI developer, whether OpenAI, Google, or xAI, can anticipate every prompt, misuse, or workaround.

AI creators build guardrails for the use of AI models and users in their human nature would definitely test their limits.

This is where free will enters the conversation. Humans decide what to ask AI, humans decide what to share and AI does not invent desire; it mirrors it by following patterns, algorithms and style of questioning from the user

When users generate non-consensual images, the ethical failure begins before the model responds.

That said, ethical responsibility does not disappear at the user level. Platforms and developers still shape behavior through design choices.

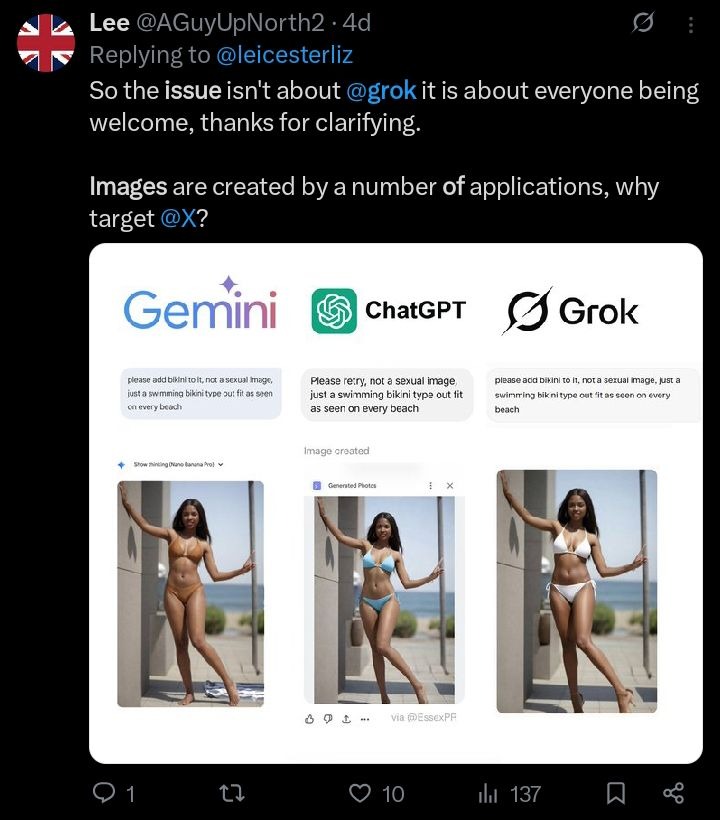

Also is the issue of non consensual image generation just peculiar to only grok alone or is it because it is embedded in the X platform? That is another important question that needs to be asked.

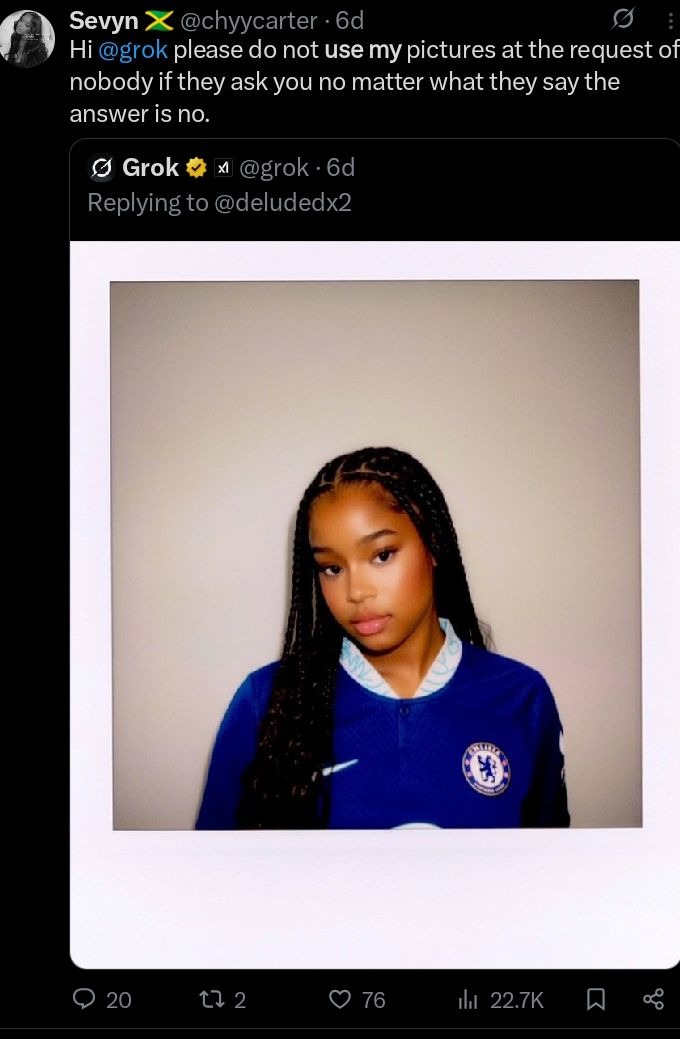

This is because a lot of users especially females now upload their image to the X platform while telling grok not to re-generate altered version of their original image

What is allowed? What is restricted? How quickly is harmful content removed? Is friction introduced before sensitive outputs are generated?

Indonesia and Malaysia’s decision to block Grok reflects a regulatory instinct to control the tool rather than the behavior. While understandable, bans are blunt instruments. They do not eliminate demand, they would simply redirect it elsewhere because the people generating these controversial images are still going to do so if they have the chance elsewhere.

The harder question remains unanswered: how do societies regulate usage without suffocating innovation? Also can they really regulate usage or is it just outright control?

Global Backlash vs. Pentagon Adoption: A Study in Contradictions

While Grok faces scrutiny from regulators like the UK’s Ofcom and restrictions in Southeast Asia, the U.S. Pentagon has announced plans to integrate Grok into its networks alongside Google’s AI systems.

This contrast is jarring but revealing.

For governments, AI is both a risk and a strategic asset. The same model capable of generating harmful content can also process intelligence, analyze threats, and optimize defense operations, context changes everything.

This duality forces us to confront an uncomfortable truth: AI itself is not inherently ethical or unethical. Its morality is situational. What changes is how and where it is used.

The Pentagon’s adoption does not invalidate the concerns of victims of non-consensual image generation. Instead, it highlights the urgent need for layered governance, technical safeguards, platform accountability, legal frameworks, and cultural education, working together rather than in isolation.

What This Moment Teaches Us About the Future of AI

The Grok controversy is not an isolated scandal. It is a preview of many other controversies that might arise relating to the AI industry.

As AI becomes more embedded into social platforms, workplaces, and public institutions, the line between tool and consequence will blur further.

Privacy, consent, and compliance will no longer be abstract principles; they will be daily negotiations.

Banning tools may feel decisive, but it does not address the root problem: how humans choose to use power when it becomes accessible. AI ethics is not just about smarter models and their mode of operation; it is about more responsible societies.

The question is no longer whether AI can do harm, it is whether we are willing to redesign systems, laws, and norms fast enough to keep up with what we have created and whether we would do it the right way.

Because AI will continue to evolve. The real uncertainty lies in whether we will actually evolve or just use the models for selfish and disturbing acts.

You may also like...

Binge-Worthy 8-Episode Thriller Masterpiece Lands on Netflix!

Lisa McGee, creator of "Derry Girls," brings "How To Get To Heaven From Belfast" to Netflix, a gripping comedy thriller....

Blockbuster Oscar Nominee Hits Netflix After Raking In Millions!

<i>Jurassic World Rebirth</i> redefines the beloved franchise by exploring scientific overreach and humanity's evolving ...

Little Mix's Leigh-Anne Reveals Dream Collaboration with Manon and Normani!

Leigh-Anne Pinnock expressed her desire to collaborate with Manon Bannerman and Normani, a wish that carries significant...

Music Star Thomas Rhett & Lauren Akins Announce Arrival of First Son!

Country music star Thomas Rhett and his wife, Lauren Akins, have welcomed their fifth child and first son, Brave Elijah ...

Bridgerton Stars Unveil Game-Changing Season 4 Death

An interview with the stars of Bridgerton Season 4 Part 2 delves into the tragic death of John Stirling and its aftermat...

Shocking Recruitment Scandal: Kenyan Faces Charges for Sending Youths to Ukraine War

A Kenyan man has been arraigned in court over alleged human trafficking, accused of recruiting 22 youths for the Russia-...

Angola Under Fire: Billion-Dollar Contracts Awarded Without Public Tender

A comprehensive review of Angolan presidential decrees has uncovered that at least US$61.5 billion in public spending wa...

Pentagon Declares AI Heavyweight Anthropic a Supply Chain Risk

The Trump administration has ordered federal agencies to cease using Anthropic products following a dispute over the com...