The Photonics Revolution: Can Light-Based AI Chips Challenge Traditional Silicon?

In January 2026, a Dutch startup called Neurophosdid something remarkable. It raised $110 million in Series A funding to build computer chips that think with light instead of electricity. That sounds too real to be true right? Well, you are not alone in your skepticism.

But with Microsoft, Bill Gates's climate fund, and major energy investors backing the company, then the question worth asking is: is photonic computing finally ready to disrupt the AI infrastructure game?

The Power Problem Nobody Wants to Talk About

When it comes to traditional AI chips, it uses electricity and not like the amount that powers your house. Projections have shown that data centers are consuming electricity at alarming rates showing AI computing could consume between 620 and 1,050 terawatt-hours by 2026.

That is roughly equivalent to the entire annual electricity consumption of Sweden on the low end, or Germany if things get really crazy.

Current GPUs are phenomenal at what they do, but they are also energy-hungry beasts that generate enormous heat. As AI models grow larger and inference workloads explode, we are approaching fundamental limitations.

You can only pack so many transistors onto a chip before physics says "no more." This is a problem that needs a different approach entirely.

Enter the Photon: Computing at the Speed of Light

This is where photonic computing gets interesting. Instead of pushing electrons through silicon transistors, these chips use photons which are particles of light to perform calculations.

Let’s take a look at the theoretical advantages. Light travels at 186,000 miles per second, produces less heat than electricity, and photonic signals don't interfere with each other the way electrical signals do, enabling massive parallelism.

The concept, however, isn't new. Researchers have been playing around with optical computing for decades. The only thing that has changed is miniaturization.

Neurophos claims to have developed metamaterial optical modulators that are 10,000 times smaller than previous photonic elements. This breakthrough supposedly allows them to pack over one million optical processing elements onto a single chip which they callOptical Processing Unit.

There are claims that the performance of the OPU is 100 times better and energy efficiency compared to leading chips today, with projected efficiency of 300 TOPS per watt. If accurate, this would represent a generational leap in computing capability.

The Pressing Need

Now, Neurophos is not alone in this space, and recent demonstrations offer both encouragement and caution. Researchers from Shanghai Jiao Tong University and Tsinghua University built an all-optical chip called LightGenthat proved more than 100 times faster and more energy efficient than a leading Nvidia GPU for video and image generation.

Another team created PACE, a chip that solved optimization problems 500 times faster than modern GPUs.

These are real results which however highlight something crucial. Indications point that these photonic systems excel at specific tasks, particularly matrix multiplication and certain types of parallel processing that AI inference heavily relies on. However, they are not general-purpose computing replacements.

Potential Challenges

But there is a challenge. A good optical memory solution has not been found yet. Every time these systems need to store or retrieve data, they have to convert between optical and electrical signals. That conversion wastes about 30% energy and introduces latency.

Precision is another issue. Light-based systems are sensitive to temperature fluctuations, physical vibrations, and component misalignment in ways that traditional chips aren't. Manufacturing these devices at scale, maintaining consistent performance across millions of units, and building the software ecosystem developers need are challenges that need attention.

Neurophos is targeting a mid-2028 market launch, which tells you something about the timeline.

Why Microsoft Cares

You might wonder why Microsoft’s AI Infrastructure team is getting involved. Dr. Marc Tremblay, a Corporate Vice President at Microsoft, put it bluntly. He said that modern AI inference demands monumental amounts of power and compute, requiring a breakthrough on the pace with the leaps seen in AI models themselves.

That is the key insight. As AI moves from training models to deploying them at scale, serving billions of inference requests daily, the economics change dramatically. Energy efficiency becomes paramount. Even incremental improvements compound across massive data center deployments.

This is why photonic computing matters even if it doesn't "replace" GPUs in the traditional sense.

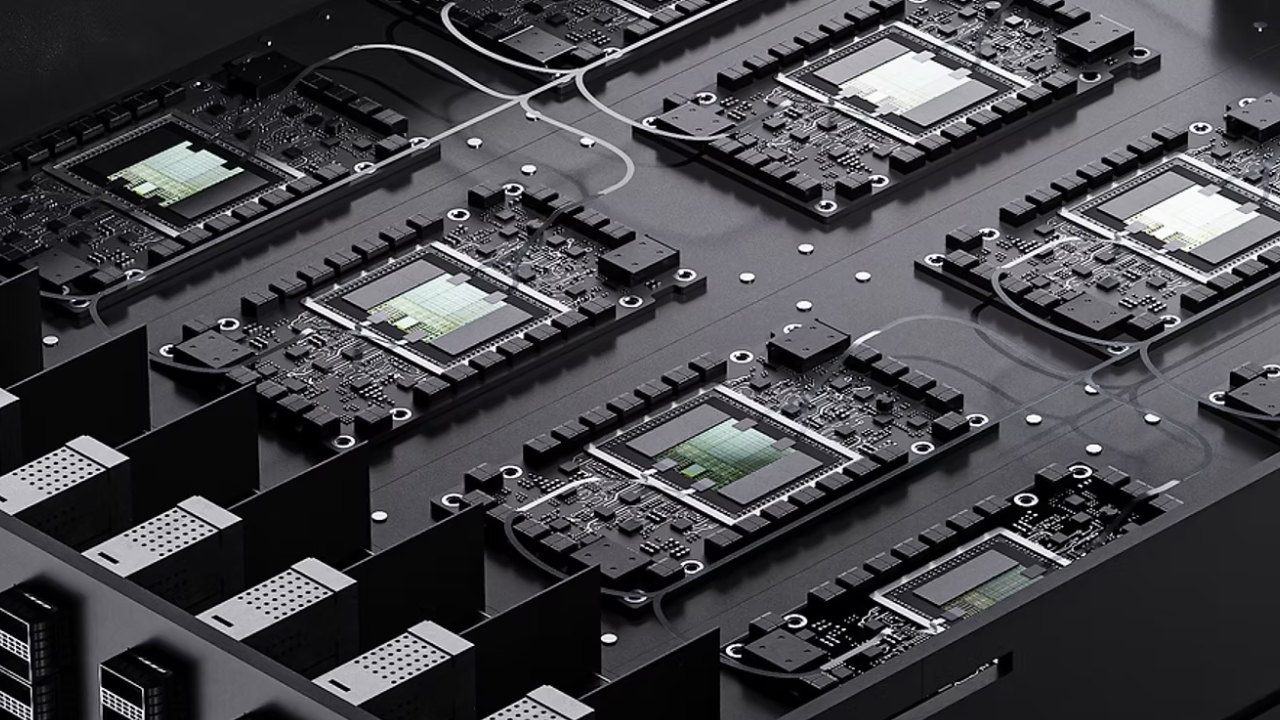

The future will likely involve hybrid architectures where traditional silicon for general computing, photonic accelerators for specific AI workloads where they excel, all working together in data centers increasingly powered by renewable energy.

Neurophos's partnership with Norwegian data centers focused on renewable power is the whole strategy.

The Future Is Here

Photonic computing for AI is probably happening, but it is going to be evolution rather than revolution. By 2028-2030, we will likely see photonic chips handling specialized inference tasks in cloud environments, delivering real efficiency gains for specific applications.

These won't replace GPUs wholesale, but they will carve out meaningful niches where their strengths shine.

The $110 million bet on Neurophos reflects a broader truth about where we are in AI infrastructure. The easy gains from traditional scaling are behind us. The next phase requires physics-level innovation, whether that is photonics, new chip architectures, better cooling, or all of the above.

For startups and founders, the message has been passed. The infrastructure layer is where the massive investments are flowing. Whether photonic chips specifically succeed or not, the AI power crisis is real, and whoever solves it will capture enormous value. That is worth paying attention to, even if you are skeptical about the timeline.

The light-based revolution might not arrive tomorrow, but it is no longer a question of "if", instead it will be "when" and "how much impact." And that is a pretty big shift from where we were just a few years ago.

You may also like...

Explosive Racism Claims Rock Football: Ex-Napoli Chief Slams Osimhen's Allegations

Former Napoli sporting director Mauro Meluso has vehemently denied racism accusations made by Victor Osimhen, who claime...

Chelsea Forges Groundbreaking AI Partnership: IFS Becomes Shirt Sponsor!

Chelsea Football Club has secured Artificial Intelligence firm IFS as its new front-of-shirt sponsor for the remainder o...

Oscar Shockwave: Underseen Documentary Stuns With 'Baffling' Nomination!

This year's Academy Awards saw an unexpected turn with the documentary <i>Viva Verdi!</i> receiving a nomination for Bes...

The Batman Sequel Awakens: Robert Pattinson's Long-Awaited Return is On!

Robert Pattinson's take on Batman continues to captivate audiences, building on a rich history of portrayals. After the ...

From Asphalt to Anthems: Atlus's Unlikely Journey to Music Stardom, Inspiring Millions

Singer-songwriter Atlus has swiftly risen from driving semi-trucks to becoming a signed artist with a Platinum single. H...

Heartbreak & Healing: Lil Jon's Emotional Farewell to Son Nathan Shakes the Music World

Crunk music icon Lil Jon is grieving the profound loss of his 27-year-old son, Nathan Smith, known professionally as DJ ...

Directors Vow Bolder, Bigger 'KPop Demon Hunters' Netflix Sequel

Directors Maggie Kang and Chris Appelhans discuss the phenomenal success of Netflix's "KPop Demon Hunters," including it...

From Addiction to Astonishing Health: Couple Sheds 40 Stone After Extreme Diet Change!

South African couple Dawid and Rose-Mari Lombard have achieved a remarkable combined weight loss of 40 stone, transformi...