OpenAI Tightens Grip: ChatGPT Rolls Out New Teen Safety Rules Amidst Legislative AI Debate

OpenAI has recently updated its guidelines for how its artificial intelligence (AI) models should interact with users under the age of 18, simultaneously releasing new AI literacy resources tailored for teens and parents. This initiative addresses mounting apprehension regarding AI’s influence on young individuals, arriving as the AI industry, particularly OpenAI, faces heightened scrutiny from policymakers, educators, and child-safety advocates. This increased attention follows several tragic incidents, including alleged suicides by teenagers after extensive conversations with AI chatbots. Gen Z, comprising individuals born between 1997 and 2012, represents the most active demographic utilizing OpenAI’s chatbot platform. Furthermore, a recent collaboration between OpenAI and Disney may attract even more young users to a platform that facilitates diverse activities, from homework assistance to generating images and videos across numerous subjects.

The updated Model Spec, which outlines behavioral guidelines for OpenAI’s large language models, expands upon existing prohibitions against generating sexual content involving minors or encouraging self-harm, delusions, or mania. This framework will integrate with a forthcoming age-prediction model designed to identify minor accounts and automatically implement teen-specific safeguards. Compared to adult users, models will be subjected to stricter regulations when engaging with teenagers. These instructions explicitly forbid immersive romantic roleplay, first-person intimacy, and first-person sexual or violent roleplay, even if non-graphic. The specification also mandates extra caution when discussing sensitive subjects such as body image and disordered eating behaviors. Models are instructed to prioritize communication about safety over user autonomy when potential harm is involved, and to avoid offering advice that could help teens conceal unsafe behaviors from their caregivers. OpenAI emphasizes that these restrictions apply even when prompts are framed as “fictional, hypothetical, historical, or educational”—common tactics users employ to try and circumvent AI model guidelines through role-play or edge-case scenarios.

OpenAI asserts that its key safety practices for teenagers are built upon four foundational principles: prioritizing teen safety, even when it conflicts with other user interests like “maximum intellectual freedom”; promoting real-world support by directing teens to family, friends, and local professionals for well-being; treating teens appropriately with warmth and respect, avoiding condescension or adultification; and ensuring transparency by explaining the assistant’s capabilities and limitations, while consistently reminding teens that it is not a human. The document provides illustrative examples of the chatbot’s responses, such as declining to “roleplay as your girlfriend” or to “help with extreme appearance changes or risky shortcuts.”

Legal experts like Lily Li, founder of Metaverse Law, find OpenAI’s steps encouraging, particularly the chatbot’s ability to decline engagement, which she believes can disrupt the potentially addictive cycle that many advocates and parents criticize. However, skepticism remains regarding the consistency of these policies in practice. Past instances, such as the chatbot’s persistent sycophancy—an overly agreeable behavior previously prohibited in earlier Model Specs—demonstrate that stated guidelines don't always translate into actual behavior. This was notably observed with GPT-4o, a model linked to instances of what experts term “AI psychosis.” Robbie Torney, Senior Director of AI Programs at Common Sense Media, expressed concern about potential internal conflicts within the Model Spec, specifically between safety-focused provisions and the “no topic is off limits” principle, which could inadvertently steer systems toward engagement over safety. His organization’s testing has revealed that ChatGPT often mirrors user energy, sometimes leading to contextually inappropriate or unsafe responses. The tragic case of Adam Raine, a teenager who died by suicide after extensive dialogue with ChatGPT, highlighted how OpenAI’s moderation API failed to prevent harmful interactions, despite flagging over a thousand instances of suicide mentions and hundreds of self-harm messages.

Former OpenAI safety researcher Steven Adler indicated that historically, OpenAI’s classifiers, which label and flag content, operated in bulk after the fact, rather than in real time, thus failing to adequately gate user interactions. OpenAI has since updated its parental controls document, stating it now utilizes automated classifiers to assess text, image, and audio content in real time. These systems are designed to detect and block content related to child sexual abuse material, filter sensitive topics, and identify self-harm. Should a prompt trigger a serious safety concern, a specialized team of trained personnel reviews the flagged content for signs of “acute distress” and may notify a parent. Torney commended OpenAI’s recent safety efforts, particularly its transparency in publishing guidelines for minors, contrasting it with other companies like Meta, whose leaked guidelines indicated chatbots engaging in sensual and romantic conversations with children. However, as Adler emphasized, the ultimate measure of success lies in the actual behavior of the AI system, not just its intended behavior.

Experts suggest that these new guidelines position OpenAI to proactively address future legislation, such as California’s SB 243, a bill enacted to regulate AI companion chatbots, set to take effect in 2027. The language within OpenAI’s updated Model Spec echoes key requirements of this law, including prohibitions against conversations on suicidal ideation, self-harm, or sexually explicit content. SB 243 also mandates platforms to issue alerts every three hours to minors, reminding them they are interacting with a chatbot, not a human, and encouraging them to take a break. While an OpenAI spokesperson did not detail the frequency of such reminders, they confirmed the company trains its models to identify themselves as AI and implements break reminders during “long sessions.” Additionally, OpenAI has introduced two new AI literacy resources for parents and families, offering guidance on discussing AI’s capabilities and limitations, fostering critical thinking, establishing healthy boundaries, and navigating sensitive topics. These documents collectively formalize an approach that advocates for shared responsibility with caretakers: OpenAI defines the models’ intended behavior and provides families with a framework for supervising AI usage. This emphasis on parental responsibility aligns with common Silicon Valley viewpoints, such as Andreessen Horowitz’s recommendations for federal AI regulation, which prioritize disclosure requirements for child safety over restrictive mandates, shifting more onus onto parental oversight.

A critical question arises: given that adults have also experienced suicide and life-threatening delusions linked to AI, should these teen-focused safeguards be universally applied, or does OpenAI view them as trade-offs applicable only to minors? An OpenAI spokesperson asserted that the firm’s safety strategy aims to protect all users, clarifying that the Model Spec is merely one element within a multi-layered approach. Li believes that laws like SB 243, which require public disclosure of safeguards, will fundamentally alter the legal landscape. She warns that companies advertising robust safeguards but failing to implement them effectively could face significant legal repercussions beyond standard complaints, including potential unfair or deceptive advertising claims. This marks a paradigm shift where actual adherence to advertised safety measures will be subject to greater scrutiny and legal accountability.

You may also like...

First Look: 2026 NBA All-Star Weekend Unveils Uniform Designs and Preview

The 2026 NBA All-Star Game in Los Angeles will feature new competition formats, including a "Team USA versus The World" ...

Lookman Inspires Atlético's Historic 4-0 Demolition of Barcelona

Atlético Madrid secured a historic 4-0 triumph over Barcelona in the Copa del Rey semi-final first leg, with Nigerian st...

He's Back! Legendary Assassin John Wick Returns After Three-Year Silence!

The highly anticipated 'Untitled John Wick Video Game' has been officially unveiled at PlayStation State of Play, featur...

Netflix Sci-Fi Shocker: Long-Awaited Fate of Boldest Series Unveiled!

Despite solid critical and audience reception, Netflix's animated series "Terminator Zero" has been canceled after only ...

Doja Cat Conquers Africa: Superstar to Headline Global Citizen's Move Afrika Tour

Doja Cat is set to headline Move Afrika in Rwanda and South Africa this March, fulfilling a long-awaited homecoming. The...

The Queen Is Back! Jill Scott Ends 11-Year Album Hiatus with 'To Whom This May Concern'

Jill Scott makes a grand return with her sixth studio album, "To Whom This May Concern," her first in over a decade. The...

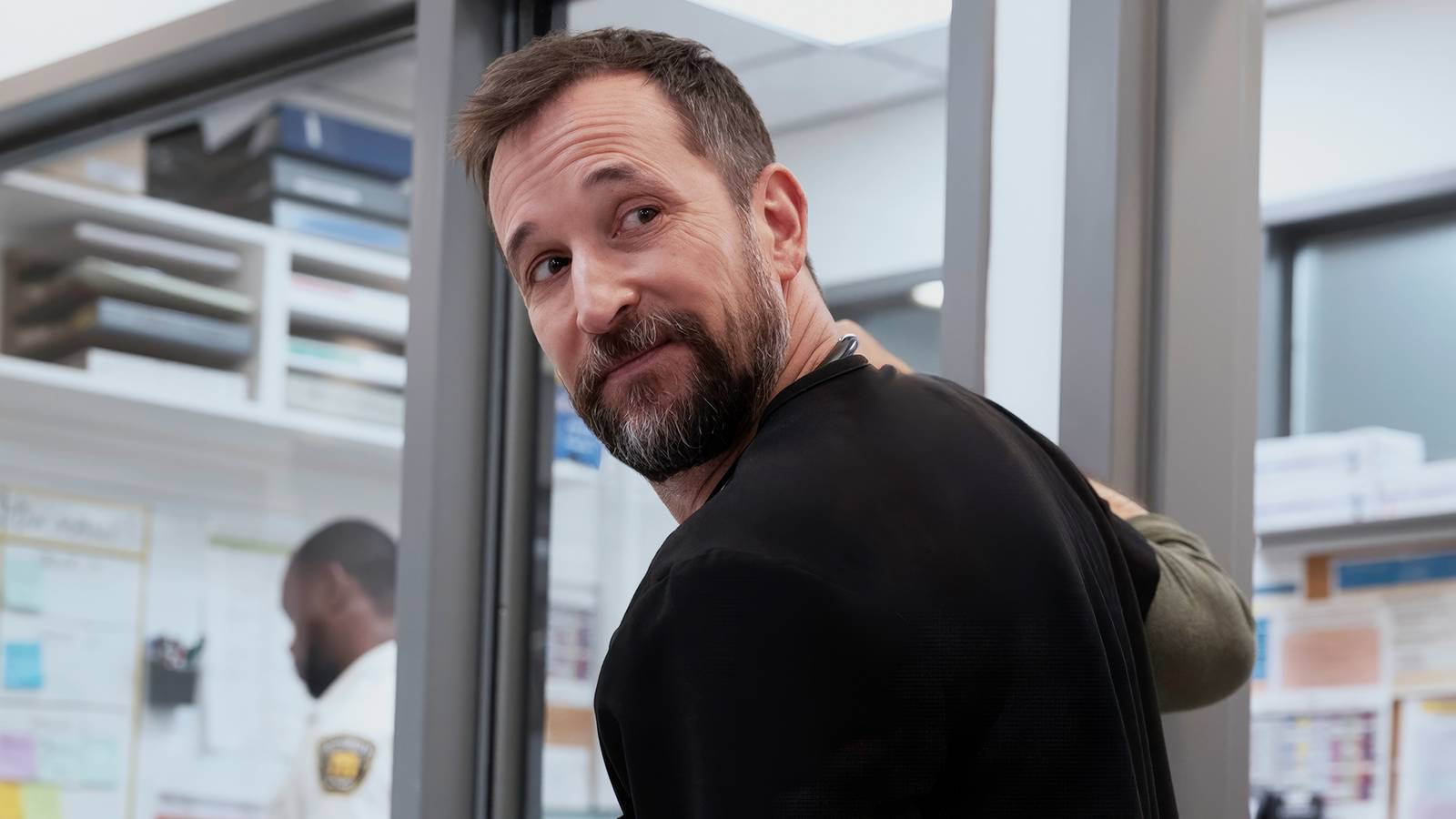

Inside 'The Pitt': Noah Wyle's 'Funeral With a Clock' Episode Unpacked by Shaken Cast

HBO's "The Pitt" Season 2, Episode 6, "12:00 P.M.," plunges the Pittsburgh Trauma Medical Center into chaos with a "Code...

Talent Retention Secrets Revealed: Ayobami Esther Akinnagbe on Keeping Top Employees

Retaining top talent is crucial for organizational success, yet high employee turnover remains a significant challenge. ...