OpenAI Unveils Next‑Gen Codex Spark Powered by Dedicated AI Chip

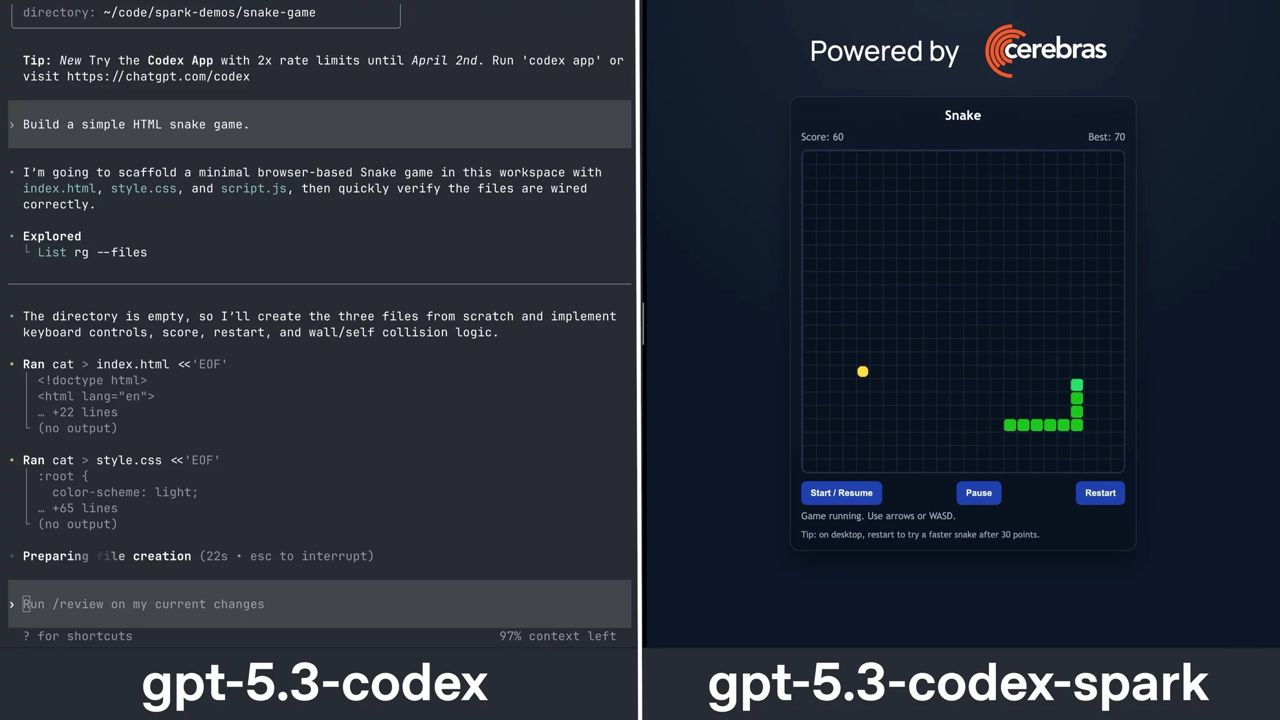

OpenAIhas officially launched GPT-5.3-Codex-Spark, a lightweight yet highly capable iteration of its agentic coding tool, Codex, initially released earlier this month.

Designed as a “smaller version” of the larger GPT-5.3 model, Spark is engineered for significantly faster inference, enabling developers to iterate rapidly on code, prototype solutions in real time, and streamline daily programming workflows.

A central factor behind Spark’s speed is OpenAI’s partnership with Cerebras, leveraging the hardware company’s Wafer Scale Engine 3 (WSE-3).

This third-generation waferscale megachip boasts an impressive 4 trillion transistors, providing unprecedented computational density and bandwidth.

The collaboration, part of a multi-year agreement valued at over $10 billion, reflects a strategic integration of AI software and purpose-built hardware, marking Spark as the “first milestone” in this deeper infrastructure partnership.

OpenAI positions GPT-5.3-Codex-Spark as a daily productivity driver, focusing on rapid, real-time coding tasks.

Unlike the original GPT-5.3, which is optimized for more extensive reasoning and long-running operations, Spark emphasizes ultra-low latency interactions, enabling developers to get immediate feedback and test code almost instantaneously.

OpenAI CEO Sam Altman hinted at the release on X (formerly Twitter) ahead of the announcement: “We have a special thing launching to Codex users on the Pro plan later today. It sparks joy for me.”

Currently, Spark is available as a research preview for ChatGPT Pro users via the Codex app, inviting developers to experiment with its high-speed capabilities.

OpenAI envisions two complementary modes for Codex: Spark for real-time collaboration and rapid iteration, and the full GPT-5.3 for complex, resource-intensive coding tasks.

This dual-mode approach aims to cater to both daily productivity and deep computational needs.

Cerebras, a company renowned for its decade-long AI hardware expertise, has seen its prominence skyrocket in recent years.

Just last week, the company announced a $1 billion fundraising round, boosting its valuation to $23 billion and laying the groundwork for a potential IPO.

Cerebras CTO and Co-founder Sean Lie expressed his excitement about Spark, highlighting the potential for “new interaction patterns, new use cases, and a fundamentally different model experience” enabled by ultra-fast inference.

He described the preview as “just the beginning” of what the partnership with OpenAI and the broader developer community could unlock.

With GPT-5.3-Codex-Spark, OpenAI is not only advancing the speed and efficiency of AI-assisted coding, but also redefining the developer experience, offering a tool that can keep up with the fast pace of modern software development and experimental AI projects.

Analysts suggest that this move positions OpenAI and Cerebras at the forefront of next-generation AI infrastructure, combining software intelligence with cutting-edge hardware to create a new paradigm for AI productivity.

You may also like...

Be Honest: Are You Actually Funny or Just Loud? Find Your Humour Type

Are you actually funny or just loud? Discover your humour type—from sarcastic to accidental comedian—and learn how your ...

Ndidi's Besiktas Revelation: Why He Chose Turkey Over Man Utd Dreams

Super Eagles midfielder Wilfred Ndidi explained his decision to join Besiktas, citing the club's appealing project, stro...

Tom Hardy Returns! Venom Roars Back to the Big Screen in New Movie!

Two years after its last cinematic outing, Venom is set to return in an animated feature film from Sony Pictures Animati...

Marvel Shakes Up Spider-Verse with Nicolas Cage's Groundbreaking New Series!

Nicolas Cage is set to star as Ben Reilly in the upcoming live-action 'Spider-Noir' series on Prime Video, moving beyond...

Bad Bunny's 'DtMF' Dominates Hot 100 with Chart-Topping Power!

A recent 'Ask Billboard' mailbag delves into Hot 100 chart specifics, featuring Bad Bunny's "DtMF" and Ella Langley's "C...

Shakira Stuns Mexico City with Massive Free Concert Announcement!

Shakira is set to conclude her historic Mexican tour trek with a free concert at Mexico City's iconic Zócalo on March 1,...

Glen Powell Reveals His Unexpected Favorite Christopher Nolan Film

A24's dark comedy "How to Make a Killing" is hitting theaters, starring Glen Powell, Topher Grace, and Jessica Henwick. ...

Wizkid & Pharrell Set New Male Style Standard in Leather and Satin Showdown

Wizkid and Pharrell Williams have sparked widespread speculation with a new, cryptic Instagram post. While the possibili...