Character.AI Closes Chatbot Doors to Kids: Minors Strictly Banned!

Character.AI, a leading AI role-playing startup, is taking significant steps to protect minors by banning users under 18 from engaging in open-ended chats with its AI chatbots. This major policy change, set to be fully implemented by November 25, begins immediately with a two-hour daily usage limit.

The decision by Character Technologies, the Menlo Park, California-based company, follows growing concerns over the potential psychological effects of AI conversations on children. These concerns have been intensified by multiple lawsuits related to child safety, including allegations that the company’s chatbots contributed to a teenager’s suicide.

CEO Karandeep Anand explained that the company’s goal is to transition Character.AI from an “AI companion” model to a “role-playing platform.” The shift will move user engagement away from unlimited conversations toward collaborative creation. Rather than extended back-and-forth chats, the platform will now encourage teens to use prompts for story-building, visual generation, and interactive entertainment experiences.

New features will include AvatarFX for video generation, Scenes for interactive storylines, and Streams for dynamic character interactions, alongside a Community Feed where users can share their creations.

To enforce the age restriction, Character.AI will introduce advanced age-verification tools, combining internal behavioral analytics, third-party systems such as Persona, and, if required, facial recognition and government ID checks. While the company recognizes the privacy concerns and technical challenges associated with these methods, particularly since minors often find ways to bypass such systems, it maintains that these steps are necessary to ensure user safety.

Anand acknowledged that these measures might cause “some churn” among underage users, as restrictions may not be well received, but emphasized that the tradeoff is essential for creating a safer AI environment.

As part of its broader safety initiative, the company is also launching and funding an independent nonprofit, the AI Safety Lab, dedicated to advancing safety alignment in AI-driven entertainment. The lab aims to fill a critical gap in industry research, which has primarily focused on productivity and enterprise AI applications.

Character.AI’s proactive approach comes as lawmakers consider stricter regulations on AI chatbot companions for minors. U.S. Senators Josh Hawley and Richard Blumenthal recently announced plans to introduce legislation banning AI chatbots for under-18 users. Meanwhile, California has become the first U.S. state to regulate AI companions, holding companies legally accountable for noncompliance with safety standards.

The move has received a mixed response. Critics like Meetali Jain, executive director of the Tech Justice Law Project, praised the restriction but questioned its timing and execution. Jain cited a lack of clarity around privacy-preserving verification systems and expressed concern about the psychological effects of abruptly cutting off young users who may have formed emotional dependencies on AI chatbots. She also noted that the new measures fail to address the design mechanisms that foster such dependencies among users of all ages.

These concerns are reinforced by a Common Sense Media study, which found that over 70% of teens have used AI companions, and half of them interact with such systems regularly.

Anand expressed hope that Character.AI’s decision would “set an industry standard that for under-18s, open-ended chats are probably not the right product to offer.” This move follows similar controversies faced by other AI platforms, including OpenAI’s ChatGPT, which came under scrutiny after reports linked a teenager’s suicide to prolonged interactions with its chatbot.

Character.AI’s leadership reaffirmed their commitment to ensuring that children grow up in a safe and responsible AI environment, a mission Anand said is driven by his personal experiences as a parent.

You may also like...

Super Eagles Fury! Coach Eric Chelle Slammed Over Shocking $130K Salary Demand!

)

Super Eagles head coach Eric Chelle's demands for a $130,000 monthly salary and extensive benefits have ignited a major ...

Premier League Immortal! James Milner Shatters Appearance Record, Klopp Hails Legend!

Football icon James Milner has surpassed Gareth Barry's Premier League appearance record, making his 654th outing at age...

Starfleet Shockwave: Fans Missed Key Detail in 'Deep Space Nine' Icon's 'Starfleet Academy' Return!

Starfleet Academy's latest episode features the long-awaited return of Jake Sisko, honoring his legendary father, Captai...

Rhaenyra's Destiny: 'House of the Dragon' Hints at Shocking Game of Thrones Finale Twist!

The 'House of the Dragon' Season 3 teaser hints at a dark path for Rhaenyra, suggesting she may descend into madness. He...

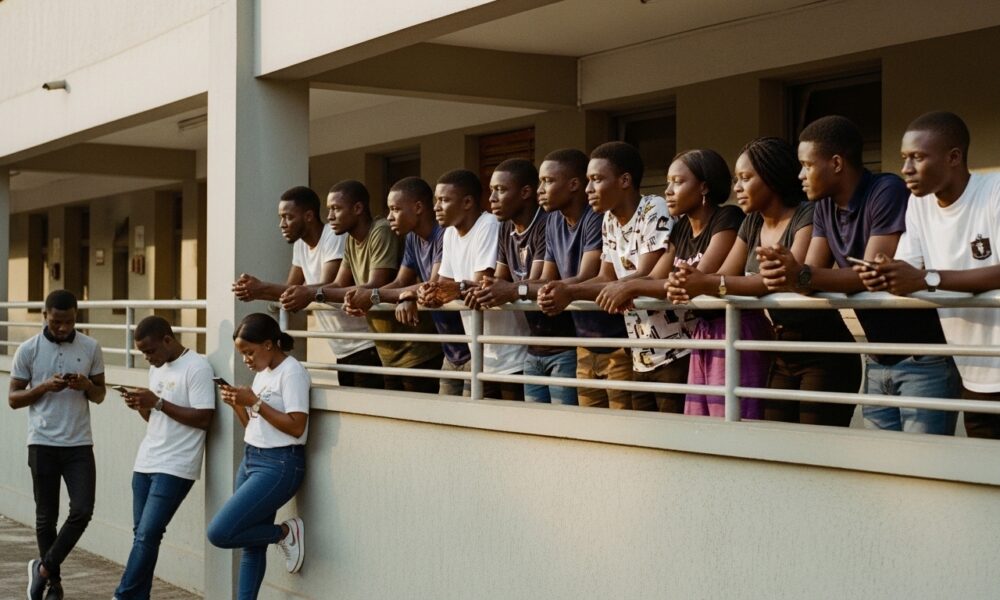

Amidah Lateef Unveils Shocking Truth About Nigerian University Hostel Crisis!

Many university students are forced to live off-campus due to limited hostel spaces, facing daily commutes, financial bu...

African Development Soars: Eswatini Hails Ethiopia's Ambitious Mega Projects

The Kingdom of Eswatini has lauded Ethiopia's significant strides in large-scale development projects, particularly high...

West African Tensions Mount: Ghana Drags Togo to Arbitration Over Maritime Borders

Ghana has initiated international arbitration under UNCLOS to settle its long-standing maritime boundary dispute with To...

Indian AI Arena Ignites: Sarvam Unleashes Indus AI Chat App in Fierce Market Battle

Sarvam, an Indian AI startup, has launched its Indus chat app, powered by its 105-billion-parameter large language model...