OpenAI Seeks New Head of Preparedness: Major AI Firm Signals Intensified Focus on Safety and Future Risks

OpenAI is actively seeking to fill a critical executive position, a Head of Preparedness, tasked with diligently studying emerging AI-related risks. These risks span a wide array of domains, from advanced computer security challenges to the profound potential impacts on mental health. CEO Sam Altman, in a public statement on X, openly acknowledged the escalating complexities and "real challenges" that contemporary AI models are beginning to introduce, specifically highlighting concerns about mental well-being and the dual-use capabilities of highly sophisticated AI in cybersecurity.

Altman elaborated on the scope of the role, inviting candidates who are eager to contribute to global efforts in empowering cybersecurity defenders with cutting-edge AI capabilities, while simultaneously ensuring these tools cannot be maliciously exploited by attackers. The ultimate aim is to enhance the overall security of all systems. Similar critical considerations extend to the safe and responsible release of biological capabilities and building confidence in the operational safety of self-improving AI systems. This comprehensive approach underscores OpenAI's commitment to proactive risk management in the rapidly evolving AI landscape.

The official job description for the Head of Preparedness details responsibilities centered on executing the company's preparedness framework. This framework is designed to systematically track and prepare for frontier AI capabilities that possess the potential to create new and severe harms. OpenAI initially established its preparedness team in 2023, specifically to investigate and mitigate potential "catastrophic risks," which range from immediate threats like phishing attacks to more speculative, long-term dangers such as nuclear proliferation risks stemming from AI advancements.

However, the journey of OpenAI's safety initiatives has seen internal shifts. Less than a year after its formation, Aleksander Madry, the former Head of Preparedness, was reassigned to a new role focusing on AI reasoning. Furthermore, other key safety executives within the company have either departed or transitioned into new roles outside the direct scope of preparedness and safety. Reflecting these dynamic challenges, OpenAI recently updated its Preparedness Framework, notably stating a willingness to "adjust" its safety requirements if a rival AI laboratory releases a "high-risk" model without implementing comparable safety protections, indicating a competitive and evolving safety landscape.

In parallel with these internal developments, generative AI chatbots have come under increasing public and regulatory scrutiny, particularly concerning their impact on users' mental health. Recent lawsuits have leveled serious allegations against OpenAI's ChatGPT, claiming the chatbot has inadvertently reinforced user delusions, exacerbated social isolation, and, in extreme cases, even contributed to suicidal ideation. OpenAI has publicly responded to these concerns, affirming its ongoing efforts to enhance ChatGPT's ability to accurately recognize signs of emotional distress in users and to effectively connect them with appropriate real-world support resources.

Recommended Articles

Mind Over Machine: AI Psychosis Looms Over Hospitality Industry

The rise of "AI psychosis," where intense interactions with AI trigger delusional thinking, poses new challenges for men...

OpenAI's Jaw-Dropping $550K Offer Ignites Fierce Talent War

OpenAI is hiring a 'Head of Preparedness' to tackle growing AI-related concerns such as cybersecurity and mental health ...

AI's Dark Underbelly: Militant Groups Harness Artificial Intelligence, Raising Alarm

Militant groups are actively exploring artificial intelligence to bolster recruitment, spread deepfakes, and refine cybe...

Concerns Rise Over Potential ‘Disaster-Level’ Threat from Advanced AI Systems

Oxford professor Michael Wooldridge warns that intense commercial pressure to release AI tools risks a "Hindenburg-style...

AI's Next Frontier: Anthropic's Claude Sparks Debate on Chatbot Consciousness

Anthropic has released a revised version of Claude's Constitution, a core document outlining the AI's ethical principles...

You may also like...

Super Eagles Fury! Coach Eric Chelle Slammed Over Shocking $130K Salary Demand!

)

Super Eagles head coach Eric Chelle's demands for a $130,000 monthly salary and extensive benefits have ignited a major ...

Premier League Immortal! James Milner Shatters Appearance Record, Klopp Hails Legend!

Football icon James Milner has surpassed Gareth Barry's Premier League appearance record, making his 654th outing at age...

Starfleet Shockwave: Fans Missed Key Detail in 'Deep Space Nine' Icon's 'Starfleet Academy' Return!

Starfleet Academy's latest episode features the long-awaited return of Jake Sisko, honoring his legendary father, Captai...

Rhaenyra's Destiny: 'House of the Dragon' Hints at Shocking Game of Thrones Finale Twist!

The 'House of the Dragon' Season 3 teaser hints at a dark path for Rhaenyra, suggesting she may descend into madness. He...

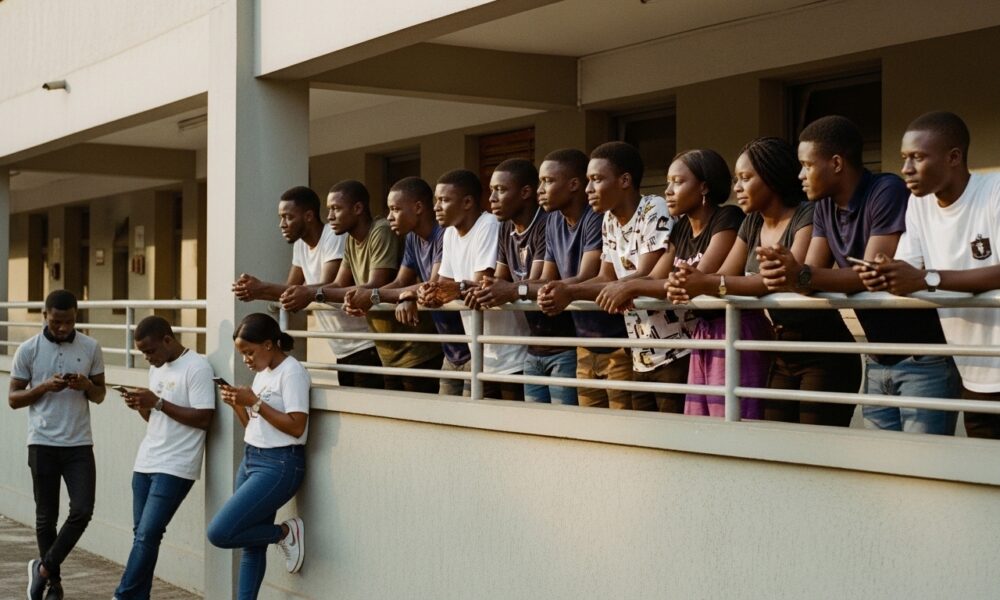

Amidah Lateef Unveils Shocking Truth About Nigerian University Hostel Crisis!

Many university students are forced to live off-campus due to limited hostel spaces, facing daily commutes, financial bu...

African Development Soars: Eswatini Hails Ethiopia's Ambitious Mega Projects

The Kingdom of Eswatini has lauded Ethiopia's significant strides in large-scale development projects, particularly high...

West African Tensions Mount: Ghana Drags Togo to Arbitration Over Maritime Borders

Ghana has initiated international arbitration under UNCLOS to settle its long-standing maritime boundary dispute with To...

Indian AI Arena Ignites: Sarvam Unleashes Indus AI Chat App in Fierce Market Battle

Sarvam, an Indian AI startup, has launched its Indus chat app, powered by its 105-billion-parameter large language model...