The Mind Forgers: Is Social Media Building Echo Chambers That Break Our Reality?

In an increasingly digital world, social media platforms promise connection and diverse information.

Yet, a darker reality has emerged: these platforms, driven by algorithms designed for engagement, are creating sophisticated echo chambers and filter bubbles that inadvertently "program our minds" by relentlessly feeding us information that confirms existing beliefs.

This article will expose how these personalized digital environments, while comforting, are increasingly fragmenting societies, fueling misinformation, exacerbating polarization, and profoundly shaping our individual and collective understanding of reality.

Algorithmic Architecture: Fueling Echo Chambers

The algorithms of major social media platforms, such as Facebook, TikTok, and X, specifically contribute to the formation and reinforcement ofecho chambers and filter bubblesthrough their core design principles, which aim to maximize user engagement. These algorithms operate as powerful "mind forgers," shaping what we see and believe, often without our conscious awareness.

At their core, these algorithms are designed to predict and deliver content that users are most likely to interact with, whether through likes, shares, comments, or prolonged viewing. This objective is achieved by analyzing vast amounts of user data: past interactions, search history, time spent on certain posts, and connections with other users.

This phenomenon is often termed the "filter bubble," a concept popularized by Eli Pariser, where algorithms selectively guess what information a user would like to see, based on their past online behavior, thereby isolating them from conflicting viewpoints.

SOURCE: Google

On platforms like TikTok, the "For You Page" exemplifies this. It learns user preferences at an astonishing speed, not just from explicit interactions but also from subtle cues like how long a video is watched or scrolled past.

This hyper-personalization can rapidly lead users down rabbit holes of increasingly specific content, reinforcing niche interests or extreme viewpoints. If a user watches a few videos related to a conspiracy theory, the algorithm will subsequently offer more of that content, assuming it aligns with their interests.

This creates a powerful echo chamber, where users are primarily exposed to voices and opinions that echo their own, further solidifying existing beliefs and making dissenting opinions appear rare or irrelevant.

X (formerly Twitter), while historically more open, also suffers from this. Its "For You" algorithm promotes tweets based on engagement signals, often pushing trending topics or content from accounts users frequently interact with.

While users can follow diverse accounts, the algorithmic feed can still prioritize content that sparks strong emotional responses (which often correlates with confirmation bias), effectively creating a personalized stream of validating information.

The viral nature of retweets and replies can quickly amplify homogenous perspectives within a user's network, limiting exposure to alternative viewpoints.

Research by the Pew Research Center has consistently highlighted how social media usage correlates with increased political polarization, with users often reporting seeing content that aligns with their existing views.

Furthermore, these algorithms inadvertently leverage human cognitive biases, particularly confirmation bias (the tendency to seek out information that supports one's existing beliefs) and ingroup bias (the tendency to favor one's own group).

By consistently feeding users content that confirms their biases, platforms create a self-reinforcing loop.

This not only validates users' existing realities but can also lead to the de-legitimization of dissenting viewpoints or sources of information that challenge the established "truth" within their echo chamber.

Psychological and Societal Impacts: Fractured Realities

Prolonged exposure to these highly curated information environments exacts measurable psychological and societal impacts, significantly eroding critical thinking, diminishing empathy, and fragmenting social cohesion.

The comfort of the echo chamber comes at a steep price, creating fractured realities that undermine the foundations of informed public discourse.

SOURCE: Google

On a psychological level, the constant validation within an echo chamber can severely impair critical thinking.

When individuals are consistently exposed only to information that confirms their existing beliefs, they lose the incentive and the practice of evaluating opposing arguments or questioning their own assumptions.

This can lead to cognitive rigidity, where individuals become less adaptable to new information and less willing to engage in nuanced thought.

The absence of intellectual challenge can foster a false sense of certainty and intellectual superiority, making individuals less likely to seek out diverse perspectives or engage in healthy skepticism. This phenomenon contributes to what is known as the Dunning-Kruger effect.

The impact on empathy is equally concerning. When individuals are primarily exposed to their own "ingroup's" perspectives and narratives, often amplified through emotionally charged content, it becomes difficult to understand or relate to the experiences and motivations of "outgroups."

Echo chambers often demonize or caricature opposing viewpoints, stripping away their humanity and making it harder for individuals to feel empathy or find common ground. This reduction in empathy is a significant barrier to constructive dialogue and peaceful conflict resolution within diverse societies.

The polarization observed in political discourse, often exacerbated by social media, directly stems from this inability to see beyond one's own bubble and appreciate the legitimate concerns of others. Research from organizations like the Knight Foundation has highlighted how fragmented information environments deepen ideological divides.

At a societal level, the proliferation of echo chambers profoundly fragments social cohesion. When different segments of society inhabit entirely distinct information realities, built on differing "facts" and narratives, it becomes incredibly challenging to address shared problems or agree on common goals.

This divergence fuels misinformation and disinformation, as false or misleading narratives can spread unchecked within isolated communities, gaining credibility through repetition and lack of counter-evidence.

The erosion of a shared sense of truth is perhaps the most dangerous consequence, as it undermines the very basis of a functioning democracy and public trust. Without a common understanding of facts, reasoned debate becomes impossible, leading to increased political extremism, social unrest, and a general distrust of institutions.

The long-term implication is a society fractured not just along traditional lines, but along lines of perceived digital reality, making collective action and consensus-building an increasingly remote possibility.

Mitigating the Trap: Solutions for Shared Reality

Addressing the negative effects of echo chambers requires a multifaceted approachthat extends beyond individual responsibility, encompassing platform design, rigorous content moderation, and robust media literacy initiatives. Radical solutions are needed to foster a more inclusive and truthful digital public sphere.

Platform design plays a crucial role. While algorithms aim for engagement, future designs must prioritize broader exposure and critical engagement over pure click maximization. Solutions could include:

Algorithmic transparency: Allowing researchers and the public to understand how content is prioritized.

'Serendipity by Design': Actively introducing users to high-quality content from diverse, credible perspectives outside their usual consumption patterns. This could involve "stitching" content from different viewpoints into a user's feed or labeling content with ideological leanings.

"Friction" mechanisms: Introducing small hurdles (e.g., asking users if they've read an article before sharing) to encourage thoughtful engagement over impulsive sharing of potentially unverified content, as explored byMIT Technology Review.

Content moderation is essential but complex. Platforms must invest significantly more in human moderators and AI tools to identify and address harmful misinformation, hate speech, and coordinated inauthentic behavior that thrives in echo chambers. This requires:

Fact-checking partnerships: Collaborating with independent fact-checking organizations to swiftly identify and label false content.

Clearer policies and enforcement: Establishing transparent community guidelines and consistently enforcing them, even against influential accounts, to build user trust and deter harmful content spread. However, critics argue this can lead to censorship, highlighting the tension between free speech and harmful content. Organizations like theCenter for Countering Digital Hate advocate for stronger platform accountability.

Crucially, media literacy initiatives empower individuals to navigate the complex information landscape. These initiatives should be integrated into education systems and public awareness campaigns, teaching users to:

Identify bias: Understand how their own biases and algorithmic biases can shape their perceptions.

Evaluate sources: Critically assess the credibility, motives, and funding of information sources.

Seek diverse perspectives: Actively seek out information that challenges their existing views, rather than passively consuming what algorithms provide. Research from theNews Literacy Project underscores the vital role of media literacy in fostering an informed citizenry.

Beyond these, more radical solutions are gaining traction. Some propose regulating platforms as public utilities to ensure neutrality and public interest mandates. Others suggest data ownership models, giving users more control over their personal data and how it's used by algorithms.

There are calls for interoperability between platforms to reduce their monopolistic power and encourage diverse information flows. Ultimately, addressing the "mind forgers" demands a societal commitment to a shared reality, prioritizing truth and critical discourse over engagement-driven isolation.

The path forward requires a collaborative effort from technology companies, governments, educators, and individual citizens to reconstruct the digital public square into a space that fosters connection and understanding, rather than fragmentation and distortion.

You may also like...

Super Eagles Fury! Coach Eric Chelle Slammed Over Shocking $130K Salary Demand!

)

Super Eagles head coach Eric Chelle's demands for a $130,000 monthly salary and extensive benefits have ignited a major ...

Premier League Immortal! James Milner Shatters Appearance Record, Klopp Hails Legend!

Football icon James Milner has surpassed Gareth Barry's Premier League appearance record, making his 654th outing at age...

Starfleet Shockwave: Fans Missed Key Detail in 'Deep Space Nine' Icon's 'Starfleet Academy' Return!

Starfleet Academy's latest episode features the long-awaited return of Jake Sisko, honoring his legendary father, Captai...

Rhaenyra's Destiny: 'House of the Dragon' Hints at Shocking Game of Thrones Finale Twist!

The 'House of the Dragon' Season 3 teaser hints at a dark path for Rhaenyra, suggesting she may descend into madness. He...

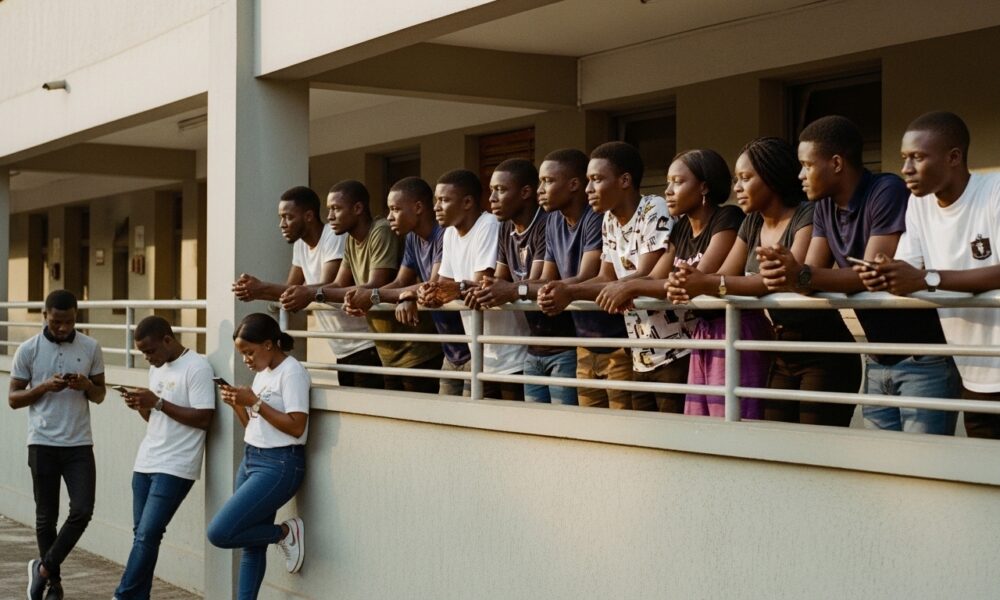

Amidah Lateef Unveils Shocking Truth About Nigerian University Hostel Crisis!

Many university students are forced to live off-campus due to limited hostel spaces, facing daily commutes, financial bu...

African Development Soars: Eswatini Hails Ethiopia's Ambitious Mega Projects

The Kingdom of Eswatini has lauded Ethiopia's significant strides in large-scale development projects, particularly high...

West African Tensions Mount: Ghana Drags Togo to Arbitration Over Maritime Borders

Ghana has initiated international arbitration under UNCLOS to settle its long-standing maritime boundary dispute with To...

Indian AI Arena Ignites: Sarvam Unleashes Indus AI Chat App in Fierce Market Battle

Sarvam, an Indian AI startup, has launched its Indus chat app, powered by its 105-billion-parameter large language model...