BMC Medical Ethics volume 26, Article number: 82 (2025) Cite this article

Integrating artificial intelligence (AI), especially large language models (LLM) into oncology has potential benefits, yet medical oncologists’ knowledge, attitudes, and ethical concerns remain unclear. Understanding these perspectives is particularly relevant in Türkiye, which has approximately 1340 practicing oncologists.

A cross-sectional, online survey was distributed via the Turkish Society of Medical Oncology’s channels from October 16 to November 27, 2024. Data on demographics, AI usage, self-assessed knowledge, attitudes, ethical/regulatory perceptions, and educational needs were collected. Quantitative analyses were performed using descriptive statistics and graphics were generated using R v.4.3.1, and qualitative analysis of open-ended responses was conducted manually.

Of 147 respondents (representing about 11% of Turkish oncologists), 77.5% reported prior AI use, mainly LLMs, yet only 9.5% had formal AI education. While most supported integrating AI into prognosis estimation, research, and decision support, concerns persisted regarding patient-physician relationships and social perception. Ethical reservations centered on patient management, scholarly writing, and research design. Over 79% deemed current regulations inadequate and advocated ethical audits, legal frameworks, and patient consent. Nearly all were willing to receive AI training, reflecting a substantial educational gap.

Turkish medical oncologists exhibit cautious optimism toward AI but highlight critical gaps in training, clear regulations, and ethical safeguards. Addressing these needs could guide responsible AI integration. Limitations include a single-country perspective. Further research is warranted to generalize findings and assess evolving attitudes as AI advances.

Not applicable due to cross-sectional survey design.

Artificial intelligence (AI) is rapidly reshaping various facets of healthcare, including oncology. By leveraging machine learning, deep learning, and large language models (LLM), AI-driven tools hold promises for enhancing diagnostic accuracy, optimizing treatment planning, refining prognosis estimation, and accelerating research [1, 2]. However, the effective integration of AI into routine oncology practice requires not only robust technologies but also clinicians who are equipped with the requisite knowledge, confidence, and ethical awareness to navigate this evolving landscape [3].

Medical oncologists’ acceptance and appropriate use of AI hinge on several factors. These include adequate education, trust in AI outputs, understanding of algorithmic limitations, data privacy protections, and clearly defined accountability structures. While global interest in AI is burgeoning, little is known about oncologists’ baseline familiarity, attitudes, and concerns, particularly in countries with evolving healthcare landscapes. Turkiye, with approximately 1340 practicing medical oncologists, provides a pertinent context for exploring these issues. Insights gathered from their perspectives can inform targeted educational programs, policy frameworks, and best practices that ensure AI augments—rather than undermines—patient-centered oncology care.

This study aimed to assess Turkish medical oncologists’ experiences and perceptions regarding AI. Specifically, we sought to: (1) quantify their exposure to and familiarity with AI tools, particularly LLM; (2) evaluate their attitudes toward AI’s role in clinical practice, research, and patient interactions; (3) identify their ethical and legal concerns; and (4) determine their educational and regulatory needs. By clarifying these dimensions, we can foster a more informed, responsible approach to implementing AI in oncology, ultimately contributing to safer, more effective patient care.

A cross-sectional survey design was employed. The target population comprised medical oncologists who were members of the Turkish Society of Medical Oncology. Eligible participants were medical oncology professionals in Türkiye, including residents and fellows who completed mandatory internal medicine training, as well as medical oncology specialists. With approximately 1340 medical oncologists nationwide, the sample of 147 participants represents roughly 11% of this population [4].

After a preliminary study with qualitative interviews, we developed a questionnare through expert consultation with ethical board members and AI experts, and superficial literature review with search terms (“artificial intelligence” OR “AI”) and (“ethics” OR “concerns”), encompassed: (1) demographics and professional background; (2) AI usage patterns and formal AI education; (3) self-assessed AI knowledge in domains such as machine learning, deep learning, and natural language processing; (4) attitudes toward AI in diagnosis, treatment planning, prognosis estimation, research, patient follow-up, and clinical decision support; (5) perceptions of AI’s impact on patient-physician relationships, healthcare access, policy development, workload, and job satisfaction; (6) ethical and regulatory considerations, including perceived ethically concerning activities, current legal sufficiency, and suggested reforms. The qualitative pilot study found high interest in LLMs within AI domains, so some of the survey questions were specifically about LLMs. The English translation of survey instrument is available in the supplementary appendix.

The survey was administered via Microsoft Forms (Microsoft Corp., Redmond, WA) online platform from October 16 to November 27, 2024. Invitations were distributed through the Turkish Society of Medical Oncology’s social media and instant messaging groups, and email network. Participation was voluntary, anonymous, and initiated upon electronic informed consent.

Descriptive statistics (frequency, percentage, median, interquartile range (IQR)) summarized quantitative data. Ordinal regression was utilized to evaluate the factors linked to knowledge levels, concerns, and attitudes. Post hoc calculations indicated 96% power with a 10% margin of error. Qualitative data from open-ended responses were analyzed manually, identifying recurring themes related to ethical concerns, data security, clinical integration, and educational gaps. All statistical analyses were performed using, and figures and graphics were generated in R version 4.4 (R Foundation for Statistical Computing, Vienna, Austria).

The study was approved by the institutional ethics committee (AUTF-KAEK 2024/635) and conducted in accordance with the Declaration of Helsinki. No personally identifying information was collected.

A total of 147 medical oncologists completed the survey, corresponding to approximately 11% of the estimated 1340 medical oncologists practicing in Türkiye [4]. The median age of participants was 39 years (IQR: 35–46), and 63.3% were male. Respondents had a median of 14 years (IQR: 10–22) of medical experience and a median of 5 years (IQR: 2–14) specifically in oncology. Nearly half (47.6%) practiced in university hospitals, followed by 31.3% in training and research hospitals, and the remainder in private or state settings (Table 1). In terms of academic rank, residents/fellows constituted 38.1%, specialists 22.4%, professors 21.1%, associate professors 16.3%, and assistant professors 2.0%. Respondents were distributed across various urban centers, including major cities such as Istanbul and Ankara, as well as smaller provinces, reflecting a broad regional representation of Türkiye’s oncology workforce.

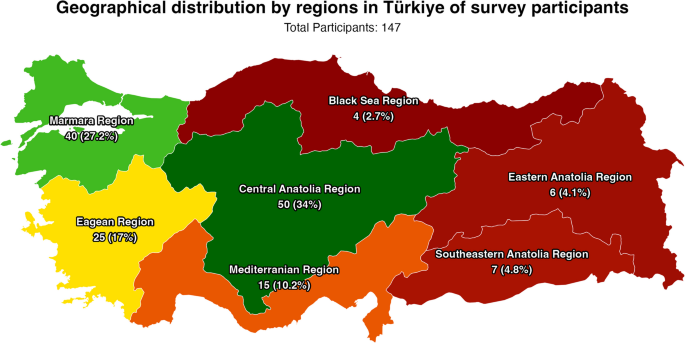

Most of the participants completed the survey from Central Anatolia Region of Türkiye (34.0%, n = 50), followed by Marmara Region (27.2%, n = 40), Eagean Region (17.0%, n = 25) and Mediterranian Region (10.2%, n = 15). The distrubution of the participants with regional map of Türkiye is presented in Fig. 1.

A majority (77.5%, n = 114) of oncologists reported prior use of at least one AI tool. Among these, ChatGPT and other GPT-based models were the most frequently used (77.5%, n = 114), indicating that LLM interfaces had already penetrated clinical professionals’ workflow to some extent. Other tools such as Google Gemini (17.0%, n = 25) and Microsoft Bing (10.9%, n = 16) showed more limited utilization, and just a small fraction had tried less common platforms like Anthropic Claude, Meta Llama-3, or Hugging Face. Despite this relatively high usage rate of general AI tools, formal AI education was scarce: only 9.5% (n = 14) of respondents had received some level of formal AI training, and this was primarily basic-level. Nearly all (94.6%, n = 139) expressed a desire for more education, suggesting that their forays into AI usage had been largely self-directed and that there was a perceived need for structured, professionally guided learning.

Regarding sources of AI knowledge, 38.8% (n = 57) reported not using any resource, underscoring a gap in continuing education. Among those who did seek information, the most common channels were colleagues (26.5%, n = 39) and academic publications (23.1%), followed by online courses/websites (21.8%, n = 32), popular science publications (19.7%, n = 29), and professional conferences/workshops (18.4%, n = 27). This pattern suggests that while some clinicians attempt to inform themselves about AI through peer discussions or scientific literature, many remain unconnected to formalized educational pathways or comprehensive training programs.

Participants generally rated themselves as having limited knowledge across key AI domains (Fig. 2A). More than half reported having “no knowledge” or only “some knowledge” in areas such as machine learning (86.4%, n = 127, combined) and deep learning (89.1%, n = 131, combined). Even fundamental concepts like LLM sand generative AI were unfamiliar to a substantial portion of respondents. For instance, nearly half (47.6%, n = 70) had no knowledge of LLMs, and two-thirds (66.0%, n = 97) had no knowledge of generative AI. Similar trends were observed for natural language processing and advanced statistical analyses, reflecting a widespread lack of confidence and familiarity with the technical underpinnings of AI beyond superficial usage.

Overview of Oncologists’ AI Familiarity, Attitudes, and Perceived Impact. (A) Distribution of participants’ self-assessed AI knowledge, (B) attitudes toward AI in various medical practice areas, and (C) insights into AI’s broader impact on medical practice

When asked to evaluate AI’s role in various clinical tasks (Fig. 2B), respondents generally displayed cautious optimism. Prognosis estimation stood out as one of the areas where AI received the strongest endorsement, with a clear majority rating it as “positive” or “very positive.” A similar pattern emerged for medical research, where nearly three-quarters of respondents recognized AI’s potential in academic field. In contrast, opinions on treatment planning and patient follow-up were more mixed, with a considerable proportion adopting a neutral stance. Diagnosis and clinical decision support still garnered predominantly positive views, though some participants expressed reservations, possibly reflecting concerns about reliability, validation, and the interpretability of AI-driven recommendations.

Broadening the perspective, Fig. 2C illustrates how participants viewed AI’s impact on aspects like patient-physician relationships, social perception, and health policy. While most believed AI could improve overall medical practices and potentially reduce workload, many worried it might affect the quality of personal interactions with patients or shape public trust in uncertain ways. Approximately half recognized potential benefits for healthcare access, but some remained neutral or skeptical, perhaps concerned that technology might not equally benefit all patient populations or could inadvertently exacerbate existing disparities.

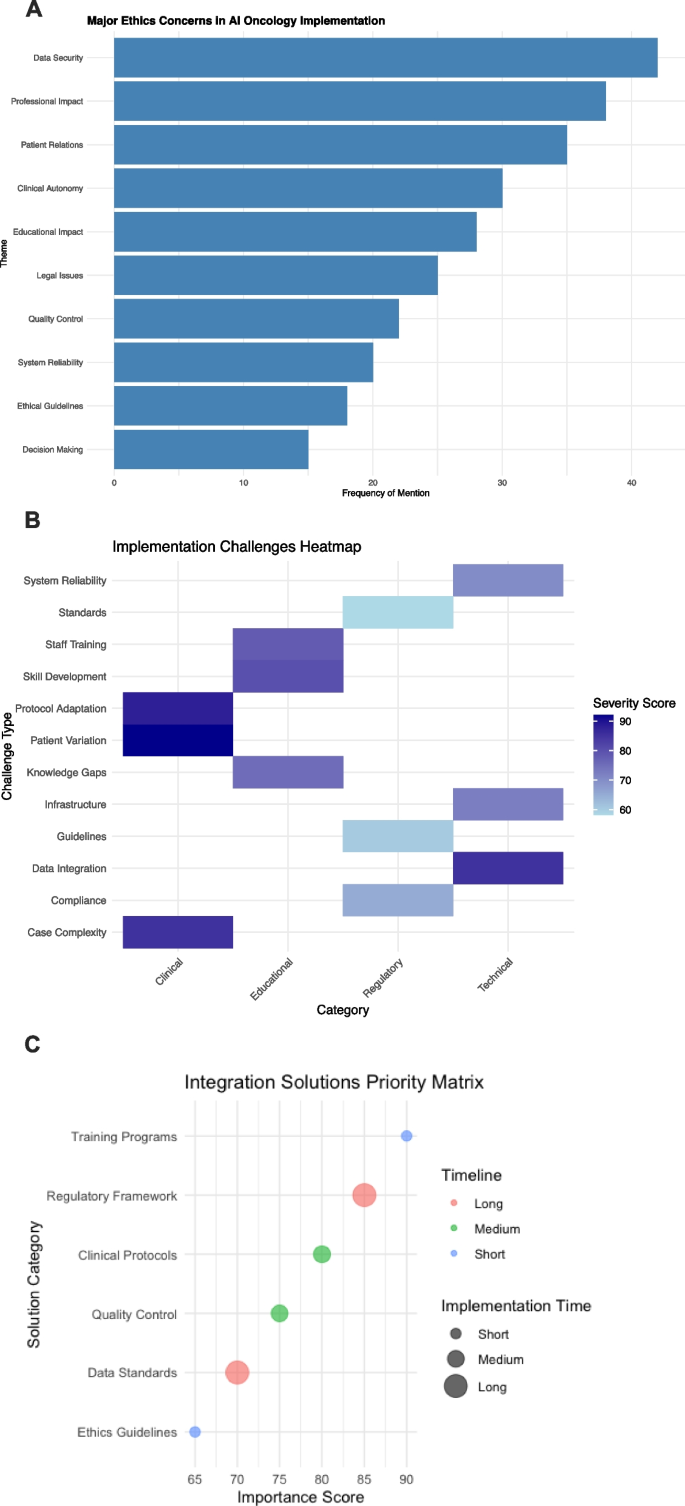

Tables 2 and 3, along with Figs. 3A–C, summarize participants’ ethical and legal considerations. Patient management (57.8%, n = 85), article or presentation writing (51.0%, n = 75), and study design (25.2%, n = 37) emerged as key activities where the integration of AI was viewed as ethically questionable. Respondents feared that relying on AI for sensitive clinical decisions or academic tasks could compromise patient safety, authenticity, or scientific integrity. A subset of respondents reported utilizing AI in certain domains, including 13.6% (n = 20) for article and presentation writing, and 11.6% (n = 17) for patient management, despite acknowledging potential ethical issues in the preceding question. However, only about half of the respondents who admitted using AI for patient management identified this as an ethical concern. This discrepancy suggests that while oncologists harbor concerns, convenience or lack of guidance may still drive them to experiment with AI applications.

Ethical Considerations, Implementation Barriers, and Strategic Solutions for AI Integration. (A) Frequency distribution of major ethical concerns, (B) heatmap of implementation challenges across technical, educational, clinical, and regulatory categories, and (C) priority matrix of proposed integration solutions including training and regulatory frameworks. The implementation time and time-line is extracted from the open-ended questions. Timeline: The estimated time needed for implementation; Implementation time: The urgency of implementation. The timelime and implementation time is fully correlated (R.2 = 1.0)

Moreover, nearly 82% of participants supported using AI in medical practice, yet 79.6% (n = 117) did not find current legal regulations satisfactory. Over two-thirds advocated for stricter legal frameworks and ethical audits. Patient consent was highlighted by 61.9% (n = 91) as a critical step, implying that clinicians want transparent processes that safeguard patient rights and maintain trust. Liability in the event of AI-driven errors also remained contentious: 68.0% (n = 100) held software developers partially responsible, and 61.2% (n = 90) also implicated physicians. This suggests a shared accountability model might be needed, involving multiple stakeholders across the healthcare and technology sectors.

To address these gaps, respondents proposed various solutions. Establishing national and international standards (82.3%, n = 121) and enacting new laws (59.2%, n = 87) were seen as pivotal. More than half favored creating dedicated institutions for AI oversight (53.7%, n = 79) and integrating informed consent clauses related to AI use (53.1%, n = 78) into patient forms. These collective views point to a strong desire among oncologists for a structured, legally sound environment in which AI tools are developed, tested, and implemented responsibly.

For knowledge levels, the ordinal regression model identified formal AI education as the sole significant predictor (ß = 30.534, SE = 0.6404, p < 0.001). In contrast, other predictors such as age (ß = −0.1835, p = 0.159), years as physician (ß = 0.0936, p = 0.425), years in oncology (ß = 0.0270, p = 0.719), and academic rank showed no significant associations with knowledge levels in the ordinal model.

The ordinal regression for concern levels revealed no significant predictors among demographic factors, professional experience, academic status, AI education, nor current knowledge levels (p > 0.05) were associated with the ordinal progression of ethical and practical concerns.

For attitudes toward AI integration, the ordinal regression identified two significant predictors. Those willing to receive AI education showed progression toward more positive attitudes (ß = 13.143, SE = 0.6688, p = 0.049), and actual receipt of AI education also predicted progression toward more positive attitudes (ß = 12.928, SE = 0.6565, p = 0.049). Additionally, higher knowledge levels showed a trend toward more positive attitudes in the ordinal model although not significant (ß = 0.3899, SE = 0.2009, p = 0.052).

Table 4 presents the ordinal regression analyses examining predictors of AI knowledge levels, concerns, and attitudes among Turkish medical oncologists.

The open-ended responses, analyzed qualitatively, revealed several recurring themes reinforcing the quantitative findings. Participants frequently stressed the importance of human oversight, emphasizing that AI should complement rather than replace clinical expertise, judgment, and empathy. Data security and privacy emerged as central concerns, with some respondents worrying that insufficient safeguards could lead to breaches of patient confidentiality. Others highlighted the challenge of ensuring that AI tools maintain cultural and social sensitivity in diverse patient populations. Calls for incremental, well-regulated implementation of AI were common, as was the suggestion that education and ongoing professional development would be essential to ensuring clinicians use AI effectively and ethically.

In essence, while there is broad acknowledgment that AI holds promise for enhancing oncology practice, respondents also recognize the need for clear ethical standards, solid regulatory frameworks, comprehensive training, and thoughtful integration strategies. oncology care.

This national survey of Turkish medical oncologists shows cautious enthusiasm for AI’s, particularly generative AI and LLMs’ integration into oncology practice since the pilot study of our survey showed particular interest in LLMs besides non-generative AI applications in decision-making. While participants acknowledge AI’s potential to enhance decision-making, research, and treatment optimization, they highlight substantial unmet needs in education, ethics, and regulation.

The high percentage of oncologists who have used AI tools—particularly LLMs—illustrates growing interest. Yet the near absence of formal training and the widespread desire for education suggest that professional societies, universities, and regulatory bodies must develop tailored programs. Such training could focus on critical interpretation of AI outputs, data governance, algorithmic bias, and validation processes to ensure that clinicians remain informed, confident users of these tools.

Many participants expressed positive attitudes toward AI’s impact on prognosis estimation and research, that may be attributable for hypothesis generation, literature synthesis and data analysis. This aligns with global trends, where AI excels at processing vast datasets to identify patterns and guide evidence-based practice [5, 6]. Concerns regarding patient-physician relationships highlight the necessity of preserving a humanistic approach. Additionally, the potential decrease in job satisfaction may be attributed to cultural factors specific to Turkey, which warrant further investigation. AI should function as a supportive tool rather than a substitute for empathy, communication, and clinical judgment that are essential to quality oncology care.

Ethical and regulatory challenges emerged prominently. Respondents identified ethically concerning activities involving patient management and academic work, suggesting that misuse or misinterpretation of AI outputs could compromise patient safety and scientific integrity. The main concerns are consistent with recent literature, yet the major large-language models like GPT includes ethical reasoning and information about the risks of AI usage in clinical practice, ensuring sharing of the risks [7,8,9,10,11]. Also, most of the literature in artificial intelligence ethics emphasize on the publication ethics, which is a result of recent developments in the AI practice and increasing usage [12, 13]. With most participants deeming current legal frameworks inadequate, developing robust standards, clear guidelines, and oversight institutions is essential. Turkiye’s experience, though specific to one country, may reflect broader global needs. International collaborations and harmonized regulations can mitigate uncertainty, clarify liability, and ensure that advances in AI align with ethical principles and patient welfare, as the recent literature also suggesting the same aspects for legal principles [14,15,16,17].

The ordinal regression findings demonstrate that both wanting AI education and having received it strongly predict positive attitudes toward AI in oncology. Most strikingly, formal AI education increased knowledge levels dramatically, suggesting that even brief training can transform oncologists from novices to knowledgeable users. While education successfully improves both knowledge and attitudes, it does not reduce concerns about AI implementation, which remain consistent across all oncologist groups regardless of experience or training. This indicates that building AI competency through education is essential for acceptance, but addressing ethical and practical concerns will require additional strategies beyond individual training programs.

In open-ended questions, participants primarily mentioned data security and AI's potential impact on professional practice, such as job loss and reputational harm, which could be due to fear and anxiety about the unknown, and some studies from Türkiye is consistent with our results [18,19,20]. Several respondents expressed concerns regarding potential ethical issues, particularly the illegal trading of data and the lack of confidentiality as major problems. A few participants reported utilizing non-generative AI technologies, such as radiomics systems, whereas the majority did not. This outcome may be attributed to the widespread prevalence of large language models (LLMs) in practical applications. The open-ended questions revealed that participants predominantly seek formal education on AI in the immediate future, while recommending postponement for clinical application. The qualitative analyses indicated that most participants have negative perceptions and future expectations regarding the impact of AI on oncology. Additionally, the majority of participants demonstrated a lack of awareness about non-generative AI systems in oncology practice, although some expressed a desire for widely available AI-augmented risk models.

In oncology research, while many studies focus on large language models (LLMs), others utilize methods such as big data analysis, imaging modalities, and genomic exploration. Advances in machine learning techniques, risk modeling, reasoning, radiogenomics, the availability of extensive datasets, AI-augmented data extraction, and various bioinformatics approaches signify notable progress for oncology practice. These innovations have the potential to greatly enhance cancer care. Nevertheless, it is imperative to address ethical considerations. As the field continues to develop, additional concerns are likely to emerge.

This study’s limitations include its single-country focus and reliance on self-reported data. While Turkiye’s ~ 1340 oncologists provide a meaningful context, findings may not generalize to other countries with different healthcare systems or regulatory environments. The survey excluded the purpose of use in particular AI tools, making the results less applicable to all groups. Nonetheless, these insights can inform stakeholders worldwide about common concerns and aspirations surrounding AI in oncology. Additionally, while our survey instrument was developed through expert consultation and preliminary qualitative interviews, we did not conduct formal psychometric validation prior to data collection, which may limit the reliability of our survey instrument.

Future research should explore qualitative interviews, focus groups, and longitudinal assessments to capture evolving attitudes and the effects of educational interventions or policy changes over time. Comparative studies across multiple countries and regions would also help clarify cultural and systemic factors influencing AI adoption in oncology.

Turkish medical oncologists recognize AI’s potential to enhance oncology practice but emphasize critical gaps in education, ethical standards, and regulatory frameworks. Their cautious optimism signals a need for proactive measures—comprehensive training, transparent policies, robust oversight, and patient-centered guidelines—that ensure AI augments clinical expertise without eroding trust or professional integrity. Although limited by a single-country perspective, these findings offer valuable lessons for global efforts to integrate AI responsibly into cancer care.

The survey instrument is given on supplementary appendix. The data collected will be extracted and translated into English upon a reasonable request.

- AI:

-

Artificial intelligence

- GPT:

-

Generalized pretrained transformers

- IQR:

-

Interquartile range

- LLM:

-

Large language models

Not applicable

The study was not funded by any organization or corporation.

The institutional ethics committee approved the study protocol (Ankara University Faculty of Medicine, Ethics Board of Human Research, approval number: AUTF-KAEK 2024/635, approval date: 10.10.2024). Prior to completing the survey, all participants provided informed consent to participate in the study. The research was conducted in compliance with the Declaration of Helsinki.

Not applicable.

The authors declare no competing interests.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Erdat, E.C., Çay Şenler, F. Turkish medical oncologists’ perspectives on integrating artificial intelligence: knowledge, attitudes, and ethical considerations. BMC Med Ethics 26, 82 (2025). https://doi.org/10.1186/s12910-025-01249-7