“Context engineering” means providing an AI (like an LLM) with all the information and tools it needs to successfully complete a task – not just a cleverly worded prompt. It’s the evolution of prompt engineering, reflecting a broader, more system-level approach.

“Prompt engineering” became a buzzword essentially meaning the skill of phrasing inputs to get better outputs. It taught us to “program in prose” with clever one-liners. But outside the AI community, many took prompt engineering to mean just typing fancy requests into a chatbot. The term never fully conveyed the real sophistication involved in using LLMs effectively.

As applications grew more complex, the limitations of focusing only on a single prompt became obvious. One analysis quipped: Prompt engineering walked so context engineering could run. In other words, a witty one-off prompt might have wowed us in demos, but building demanded something more comprehensive.

This realization is why our field is coalescing around as a better descriptor for the craft of getting great results from AI. Context engineering means constructing the entire an LLM sees – not just a short instruction, but all the relevant background info, examples, and guidance needed for the task.

The phrase was popularized by developers like Shopify’s CEO Tobi Lütke and AI leader Andrej Karpathy in mid-2025.

“I really like the term ‘context engineering’ over prompt engineering,” wrote Tobi. “It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.” Karpathy emphatically agreed, noting that people associate prompts with short instructions, whereas in every serious LLM application, is the delicate art and science of filling the context window with just the right information for each step.

In other words, real-world LLM apps don’t succeed by luck or one-shot prompts – they succeed by carefully assembling context around the model’s queries.

The change in terminology reflects an evolution in approach. If prompt engineering was about coming up with a magical sentence, context engineering is about for the AI. It’s a structural shift: prompt engineering ends once you craft a good prompt, whereas context engineering begins with designing whole systems that bring in memory, knowledge, tools, and data in an organized way.

As Karpathy explained, doing this well involves everything from and explanations, to providing few-shot examples, retrieved facts (RAG), possibly multimodal data, relevant tools, state history, and careful compacting of all that into a limited window. No wonder Karpathy calls it both a science and an art.

The term is catching on because it intuitively captures what we actually do when building LLM solutions. “Prompt” sounds like a single short query; “context” implies a richer information state we prepare for the AI.

Semantics aside, why does this shift matter? Because it marks a maturing of our mindset for AI development. We’ve learned that for the AI. A one-off prompt might get a cool demo, but for robust solutions you need to control what the model “knows” and “sees” at each step. It often means retrieving relevant documents, summarizing history, injecting structured data, or providing tools – whatever it takes so the model isn’t guessing in the dark. The result is we no longer think of prompts as one-off instructions we hope the AI can interpret. We think in terms of : all the pieces of information and interaction that set the AI up for success.

To illustrate, consider the difference in perspective. Prompt engineering was often an exercise in clever wording (“Maybe if I phrase it this way, the LLM will do what I want”). Context engineering, by contrast, feels more like traditional engineering: What inputs (data, examples, state) does this system need? How do I get those and feed them in? In what format? At what time? We’ve essentially gone from squeezing performance out of a single prompt to designing LLM-powered systems.

A useful mental model (suggested by Andrej Karpathy and others) is to think of an LLM like a CPU, and its context window (the text input it sees at once) as the RAM or working memory. As an engineer, your job is akin to an operating system: . In practice, this context can come from many sources: the user’s query, system instructions, retrieved knowledge from databases or documentation, outputs from other tools, and summaries of prior interactions. Context engineering is about orchestrating all these pieces into the prompt that the model ultimately sees. It’s not a static prompt but a dynamic assembly of information at runtime.

Illustration: multiple sources of information are composed into an LLM’s context window (its “working memory”). The context engineer’s goal is to fill that window with the right information, in the right format, so the model can accomplish the task effectively.

Let’s break down what this involves:

Above all, context engineering is about .

Remember, an LLM is powerful but not psychic – it can only base its answers on what’s in its input plus what it learned during training. If it fails or hallucinates, often the root cause is that we didn’t give it the right context, or we gave it poorly structured context. When an LLM “agent” misbehaves, usually “the appropriate context, instructions and tools have not been communicated to the model.” Garbage in, garbage out. Conversely, if you do supply all the relevant info and clear guidance, the model’s performance improves dramatically.

Now, concretely, how do we ensure we’re giving the AI everything it needs? Here are some pragmatic tips that I’ve found useful when building AI coding assistants and other LLM apps:

Remember the golden rule: The quality of output is directly proportional to the quality and relevance of the context you provide. Too little context (or missing pieces) and the AI will fill gaps with guesses (often incorrect). Irrelevant or noisy context can be just as bad, leading the model down the wrong path. So our job as context engineers is to feed the model exactly what it needs and nothing it doesn’t.

Let's be direct about the criticisms. Many experienced developers see "context engineering" as either rebranded prompt engineering or, worse, pseudoscientific buzzword creation. These concerns aren't unfounded. Traditional prompt engineering focuses on the instructions you give an LLM. Context engineering encompasses the entire information ecosystem: dynamic data retrieval, memory management, tool orchestration, and state maintenance across multi-turn interactions. Much of current AI work lacks the rigor we expect from engineering disciplines. There's too much trial-and-error, not enough measurement, and insufficient systematic methodology. Let's be honest: even with perfect context engineering, LLMs still hallucinate, make logical errors, and fail at complex reasoning. Context engineering isn't a silver bullet - it's damage control and optimization within current constraints.

As Karpathy described, context engineering is a delicate mix of science and art.

The “science” part involves following certain principles and techniques to systematically improve performance. For example: if you’re doing code generation, it’s almost scientific that you should include relevant code and error messages; if you’re doing question-answering, it’s logical to retrieve supporting documents and provide them to the model. There are established methods like few-shot prompting, retrieval-augmented generation (RAG), and chain-of-thought prompting that we know (from research and trial) can boost results. There’s also a science to respecting the model’s constraints – every model has a context length limit, and overstuffing that window can not only increase latency/cost but potentially degrade the quality if the important pieces get lost in the noise.

Karpathy summed it up well: “Too little or of the wrong form and the LLM doesn’t have the right context for optimal performance. Too much or too irrelevant and the LLM costs might go up and performance might come down.”.

So the science is in techniques for selecting, pruning, and formatting context optimally. For instance, using embeddings to find the most relevant docs to include (so you’re not inserting unrelated text), or compressing long histories into summaries. Researchers have even catalogued failure modes of long contexts – things like (where an earlier hallucination in the context leads to further errors) or (where too much extraneous detail causes the model to lose focus). Knowing these pitfalls, a good engineer will curate the context carefully.

Then there’s the “art” side – the intuition and creativity born of experience.

This is about understanding LLM quirks and subtle behaviors. Think of it like a seasoned programmer who “just knows” how to structure code for readability: an experienced context engineer develops a feel for how to structure a prompt for a given model. For example, you might sense that one model tends to do better if you first outline a solution approach before diving into specifics, so you include an initial step like “Let’s think step by step…” in the prompt. Or you notice that the model often misunderstands a particular term in your domain, so you preemptively clarify it in the context. These aren’t in a manual – you learn them by observing model outputs and iterating. , but now it’s in service of the larger context. It’s similar to software design patterns: there’s science in understanding common solutions, but art in knowing when and how to apply them.

Let’s explore a few common strategies and patterns context engineers use to craft effective contexts:

The impact of these techniques is huge. When you see an impressive LLM demo solving a complex task (say, debugging code or planning a multi-step process), you can bet it wasn’t just a single clever prompt behind the scenes. There was a pipeline of context assembly enabling it.

For instance, an AI pair programmer might implement a workflow like:

Each step has carefully engineered context: the search results, the test outputs, etc., are each fed into the model in a controlled way. It’s a far cry from “just prompt an LLM to fix my bug” and hoping for the best.

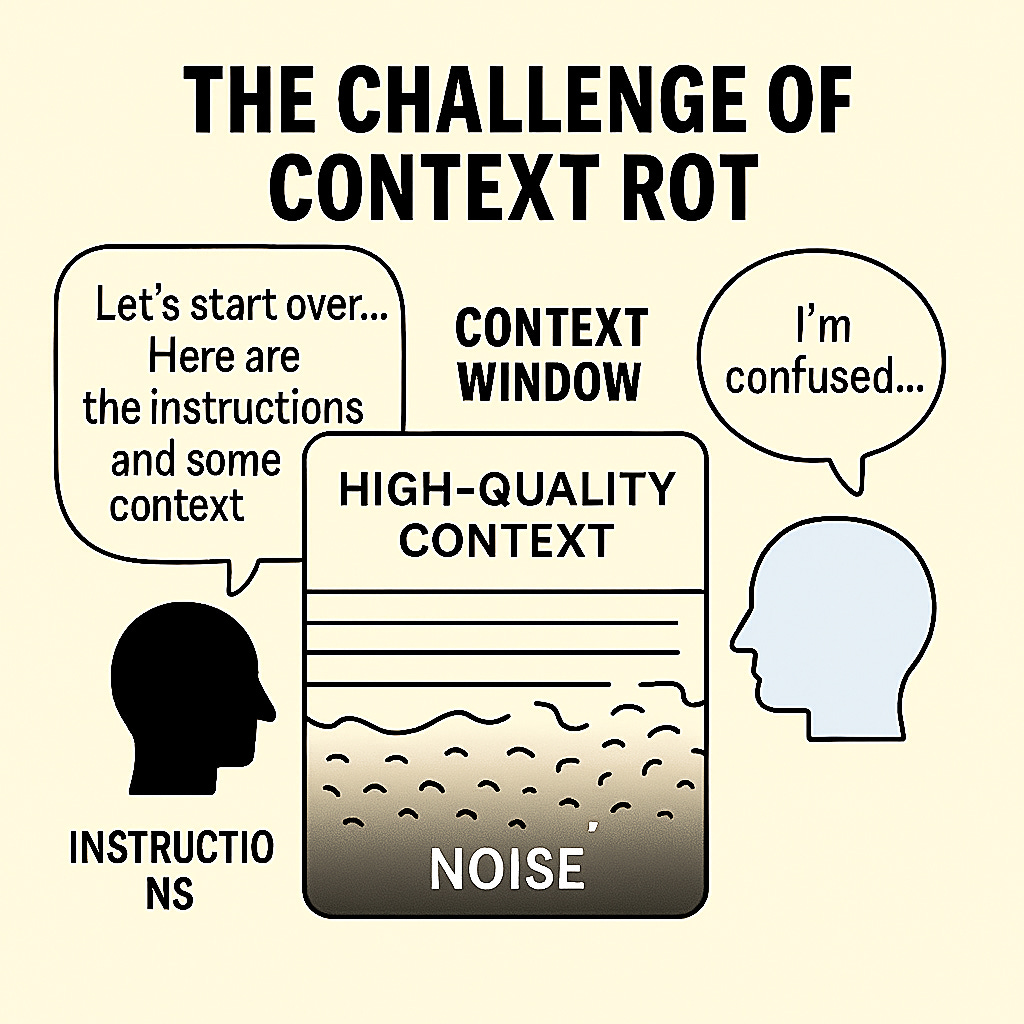

As we get better at assembling rich context, we run into a new problem: context can actually poison itself over time. This phenomenon, aptly termed "context rot" by developer Workaccount2 on Hacker News, describes how

The pattern is frustratingly common: you start a session with a well-crafted context and clear instructions. The AI performs beautifully at first. But as the conversation continues - especially if there are false starts, debugging attempts, or exploratory rabbit holes - the context window fills with increasingly noisy information. The model's responses gradually become less accurate, more confused, or start hallucinating.

Why does this happen? Context windows aren't just storage - they're the model's working memory. When that memory gets cluttered with failed attempts, contradictory information, or tangential discussions, it's like trying to work at a desk covered in old drafts and unrelated papers. The model struggles to identify what's currently relevant versus what's historical noise. Earlier mistakes in the conversation can compound, creating a feedback loop where the model references its own poor outputs and spirals further off track.

This is especially problematic in iterative workflows - exactly the kind of complex tasks where context engineering shines. Debugging sessions, code refactoring, document editing, or research projects naturally involve false starts and course corrections. But each failed attempt leaves traces in the context that can interfere with subsequent reasoning.

Practical strategies for managing context rot include:

This challenge also highlights why "just dump everything into the context" isn't a viable long-term strategy. Like good software architecture, - deciding not just what to include, but when to exclude, summarize, or refresh.

In Karpathy’s words, context engineering is “one small piece of an emerging thick layer of non-trivial software” that powers real LLM apps. So while we’ve focused on how to craft good context, it’s important to see where that fits in the overall architecture.

A production-grade LLM system typically has to handle many concerns beyond just prompting, for example:

We’re really talking about . It’s one where the core logic involves managing information (context) and adapting it through a series of AI interactions, rather than just running deterministic functions. Karpathy listed elements like control flows, model dispatch, memory management, tool use, verification steps, etc., on top of context filling. All together, they form what he jokingly calls “an emerging thick layer” for AI apps – thick because it’s doing a lot! When we build these systems, we’re essentially writing meta-programs: programs that choreograph another “program” (the AI’s output) to solve a task.

For us software engineers, this is both exciting and challenging. It’s exciting because it opens capabilities we didn’t have – e.g., building an assistant that can handle natural language, code, and external actions seamlessly. It’s challenging because many of the techniques are new and still in flux. We have to think about things like prompt versioning, AI reliability, and ethical output filtering, which weren’t standard parts of app development before. In this context, of the system: if you can’t get the right information into the model at the right time, nothing else will save your app. But as we see, even perfect context alone isn’t enough; you need all the supporting structure around it.

The takeaway is that . Context engineering is a core part of that system design, but it lives alongside many other components.

By mastering the assembly of complete context (and coupling it with solid testing), we can increase the changes of getting the best output from AI models.

For experienced engineers, much of this paradigm is familiar at its core – it’s about good software practices – but applied in a new domain. Think about it:

In embracing context engineering, you’re essentially saying: I, the developer, am responsible for what the AI does. It’s not a mysterious oracle; it’s a component I need to configure and drive with the right data and rules.

This mindset shift is empowering. It means we don’t have to treat the AI as unpredictable magic – we can tame it with solid engineering techniques (plus a bit of creative prompt artistry).

Practically, how can you adopt this context-centric approach in your work?

Context engineering isn’t just about machine-machine info; it’s ultimately about solving a user’s problem. Often, the user can provide context if asked the right way. Think about UX designs where the AI asks clarifying questions or where the user can provide extra details to refine the context (like attaching a file, or selecting which codebase section is relevant). The term “AI-assisted” goes both ways – AI assists user, but user can assist AI by supplying context. A well-designed system facilitates that. For example, if an AI answer is wrong, let the user correct it and feed that correction back into context for next time.

Make context engineering a shared discipline. In code reviews, start reviewing prompts and context logic too (“Is this retrieval grabbing the right docs? Is this prompt section clear and unambiguous?”). If you’re a tech lead, encourage team members to surface issues with AI outputs and brainstorm how tweaking context might fix it. Knowledge sharing is key because the field is new – a clever prompt trick or formatting insight one person discovers can likely benefit others. I’ve personally learned a ton just reading others’ prompt examples and post-mortems of AI failures.

As we move forward, I expect – much like writing an API call or a SQL query is today. It will be part of the standard repertoire of software development. Already, many of us don’t think twice about doing a quick vector similarity search to grab context for a question; it’s just part of the flow. In a few years, “Have you set up the context properly?” will be as common a code review question as “Have you handled that API response properly?”.

In embracing this new paradigm, we don’t abandon the old engineering principles – we reapply them in new ways. If you’ve spent years honing your software craft, that experience is incredibly valuable now: it’s what allows you to design sensible flows, to spot edge cases, to ensure correctness. AI hasn’t made those skills obsolete; it’s amplified their importance in guiding AI. The role of the software engineer is not diminishing – it’s evolving. We’re becoming directors and editors of AI, not just writers of code. And context engineering is the technique by which we direct the AI effectively.

Start thinking in terms of what information you provide to the model, not just what question you ask. Experiment with it, iterate on it, and share your findings. By doing so, you’ll not only get better results from today’s AI, but you’ll also be preparing yourself for the even more powerful AI systems on the horizon. Those who understand how to feed the AI will always have the advantage.

Happy context-coding!

I’m excited to share I’m writing a new AI-assisted engineering book with O’Reilly. If you’ve enjoyed my writing here you may be interested in checking it out.