Will AI Cross the Reasoning Gap?

On the other side of the reasoning gap is what many people call artificial general intelligence or AGI.

I’ll call it general reasoning: the ability to achieve human-level reasoning in a variety of domains.

General intelligence for me simply is the ability to achieve human-level reasoning and creativity in a wide variety of domains. But, artificial general intelligence has been used to describe a wide range of abilities, so I’ll use general reasoning to avoid confusion.

Are AIs a few short years away from general reasoning?

Or is there some fundamental challenge to general reasoning that AIs are not remotely close to cracking?

models that learn from data using multiple parameters and layers.

Deep neural networks are the paradigm of modern AI, from Large-language models (GPT-4 and Gemini) to image generators (DALL-E) to navigation systems (Tesla Autopilot). Going forward, I’ll use “deep neural networks”, “deep learning models”, and “today’s AIs” interchangeably.

If today’s AIs are impacting our lives in notable ways, we’d expect the societal impact of general reasoning to be far greater.

, artificial general reasoning () could greatly accelerate intellectual progress.

If the proponents of these technologies are to be believed, then without the human propensity for sleep and toilet breaks AGR could research cures, build crucial technologies and provide personalised help at scale.

AGR could also do away with much of the tedium in our lives, like the aspects of knowledge work we don’t enjoy.

AGR could do away with the valuable parts of our lives as well.

What’s stopping an AGR from replacing me at my job and writing that convoluted screenplay I’m planning to get to eventually?

Of course, the societal impacts would be more severe: AGR could significantly amplify the harm bad actors and ignorant decision-makers could get up to.

Imagine steady intellectual horsepower in the hands of neighbourhood scammers, negligent bureaucrats, crypto shills, global terrorists and the guy who creates good morning messages on Facebook.

That last joke was probably misplaced. The potential consequences of AGR are very serious.

This obviously motivates the question of this essay: how soon could AI reach general reasoning?

But it also motivates deeper reckoning: should AGR even be our aim? How should we act now to limit future harm?

We’ll bracket out the “should questions” until the end of this essay. But let us keep their high stakes in mind.

How far is AI from crossing the reasoning gap?

It turns out that opinion among AI technicians and theorists is deeply divided.

There are two opposing camps, which I’ll name deep learning optimists and deep learning skeptics respectively.

Both camps have compelling arguments in their favour. But their advocates don’t seem to be hearing each other.

We’ll take a birds-eye-view of the philosophical terrain, pitting the best arguments of each camp against the other.

This isn’t supposed to make sense yet, but here are the landmarks for our journey ahead:

At the end, you get to decide which camp you find compelling. Or, what evidence you’ll be looking for before you make up your mind.

Regardless of your view, I think you’ll be interested to hear what each side has to say.

Preparing this essay surprised me a few times, and I’m beginning to appreciate how open the debate is.

The future of AI is not a foregone conclusion.

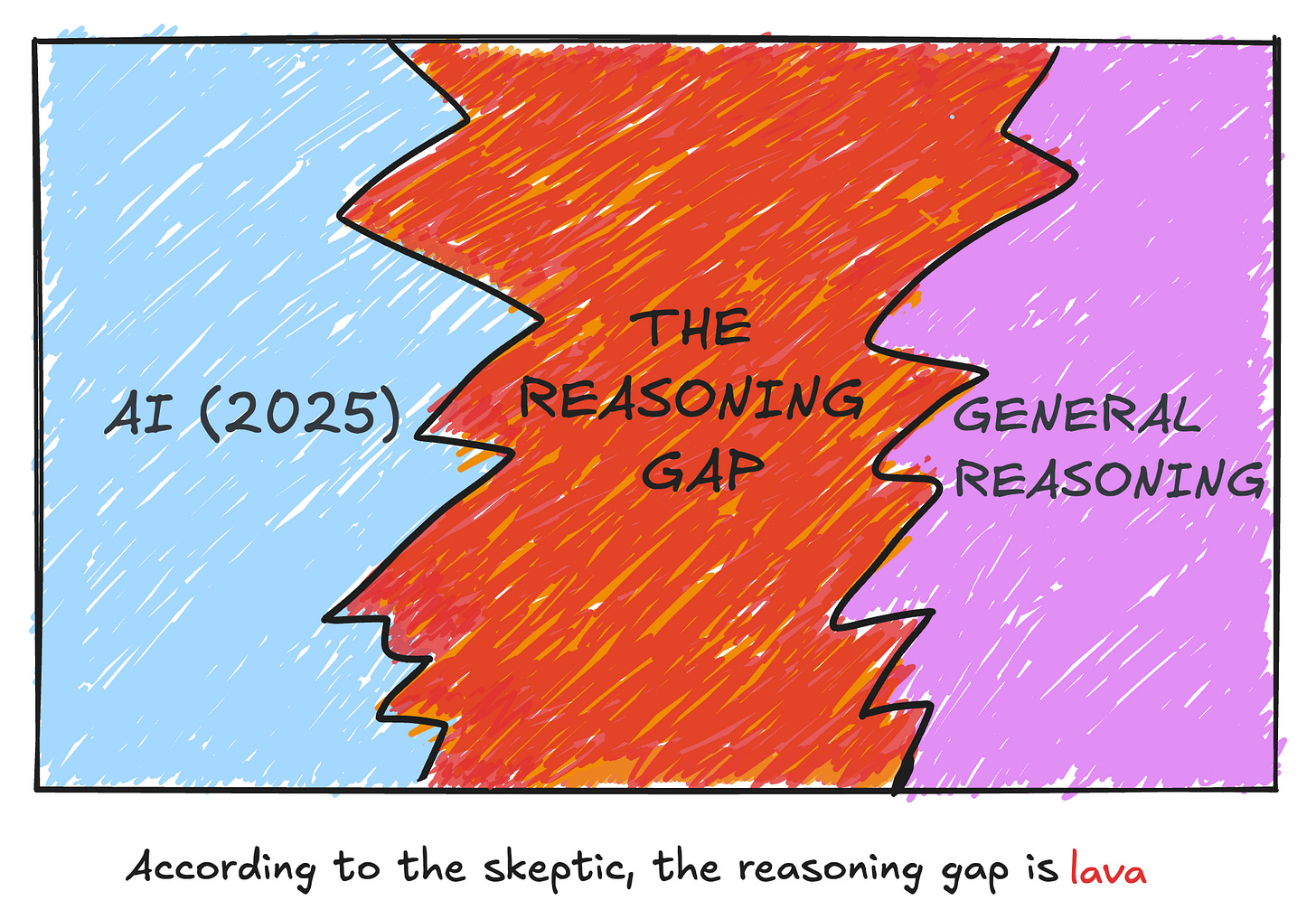

believe that the reasoning gap is a fundamental problem for deep learning models.

Improving deep learning will not resolve AIs reasoning gap in the foreseeable future.

believe that the same methods that have allowed deep learning models to come this far will also result in general reasoning.

Deep learning AIs will cross the reasoning gap in the foreseeable future.

The foreseeable future is both likely and soon. Optimist’s usually predict general reasoning within a few months or years—often under 5 years and certainly under 10.

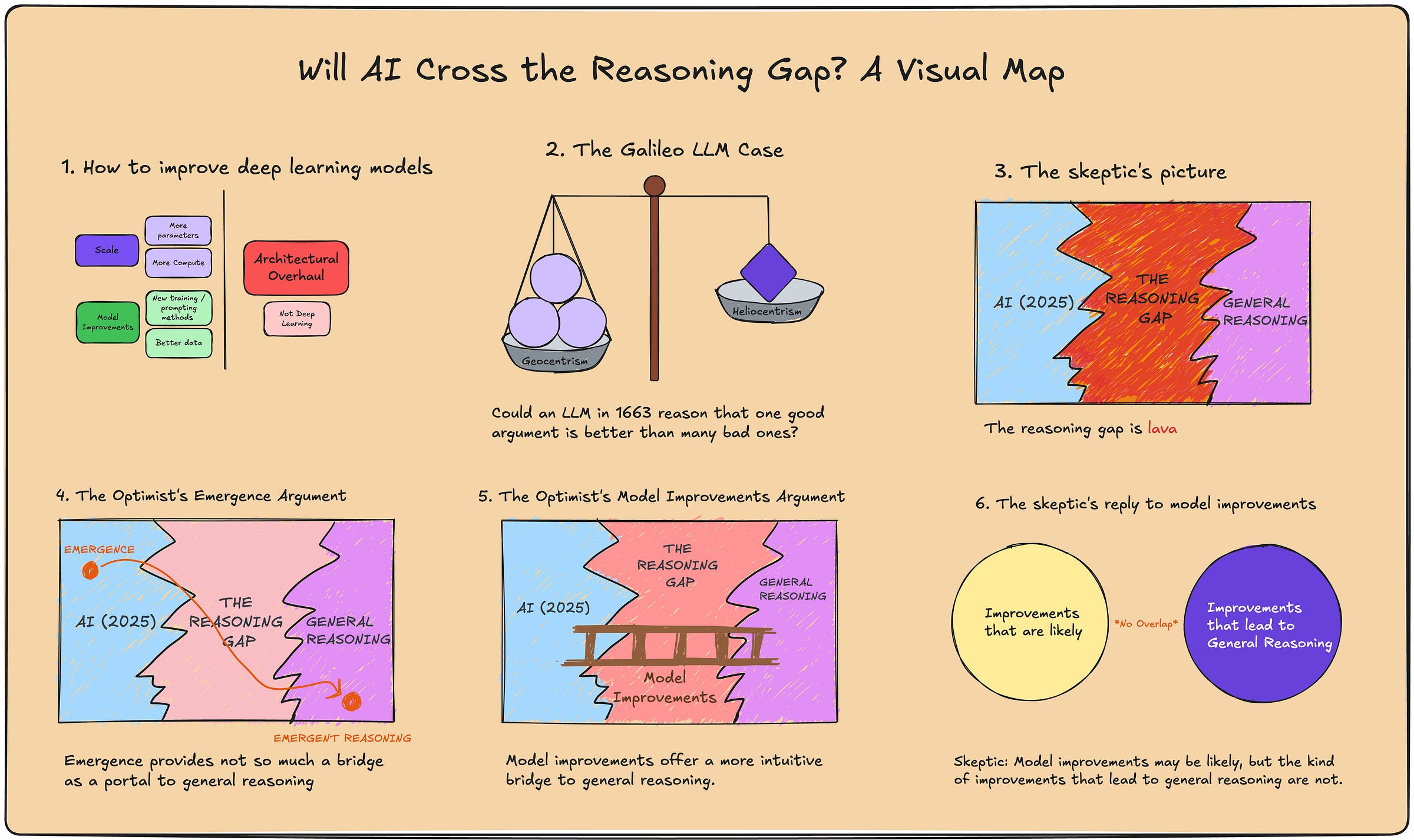

To understand these views, we also need a conception of what it means to “improve deep learning”.

For me, improvements build on deep learning, without creating an entirely different kind of AI architecture. That would be everything on the left side of this diagram:

The deep learning optimist believes that changes on the left - scale and model improvements - are sufficient to lead to general reasoning within a few years. The skeptic disagrees.

Let’s start with what seems to be the less popular view currently.

For the skeptic, the reasoning gap is not the kind of problem AI can just power through with an aspirin and a cold shower.

It is a fundamental limitation of the way deep neural networks are built. Let’s see why.

First, the skeptic argues that AIs don't reason at all.

Notice what allows a deep neural network to do intelligent things in the first place.

Deep neural networks are fine-grained prediction models optimised over large volumes of data. They behave intelligently by predicting the next token.

Undoubtedly, deep neural networks have achieved incredible things through this technique. Like Large language models (LLMs), which produce impressively intelligent responses to a variety of queries.

But just because an LLM can help you craft a tactful response to your passive aggressive housemate doesn't mean it understands the concept of friendship or is reasoning through the elements of negotiation1.

As notable skeptic Shannon Valor might say, the LLM "simply activates a complex statistical pattern learned from its vast language corpus of human sentences... and predicts a new string of words as a likely extension of the same pattern"2.

These words just happen to be very useful for your purposes - which is a result of your starting prompt and AIs fluency with word prediction. Put another way:

LLMs don't understand words, they are simply terrific at completing word puzzles.

But because words encode meanings, with the right prompt an LLM can end up saying some very meaningful things.

Well, because even the best LLMs sometimes make rudimentary mistakes and hallucinate, revealing a lack of geniune understanding.

When an LLM confidently answers a math question wrong, or fumbles a previously solved logic puzzle that's slightly rephrased, it reveals that it never really understood these concepts in the first place3.

What the skeptic has argued amounts to this premise:

Deep learning does not have a mechanism for genuine understanding or human-like reasoning. It produces "intelligent" behaviour through statistical prediction.

While this undermines AI models, the skeptic needs a stronger claim to argue that deep learning can’t cross the reasoning gap.

Because for our purposes, for AI to cross the reasoning gap simply requires that it reliably achieve the results of general reasoning.

Whether AI hacks its way to this result or gets there through real understanding does not affect the end outcome4.

What the skeptic needs to show is not only that AI does not reason in a human-like way, but also that the statistical method AI uses cannot reliably achieve the outcomes of general reasoning.

To argue this, the skeptic can offer a thought experiment.

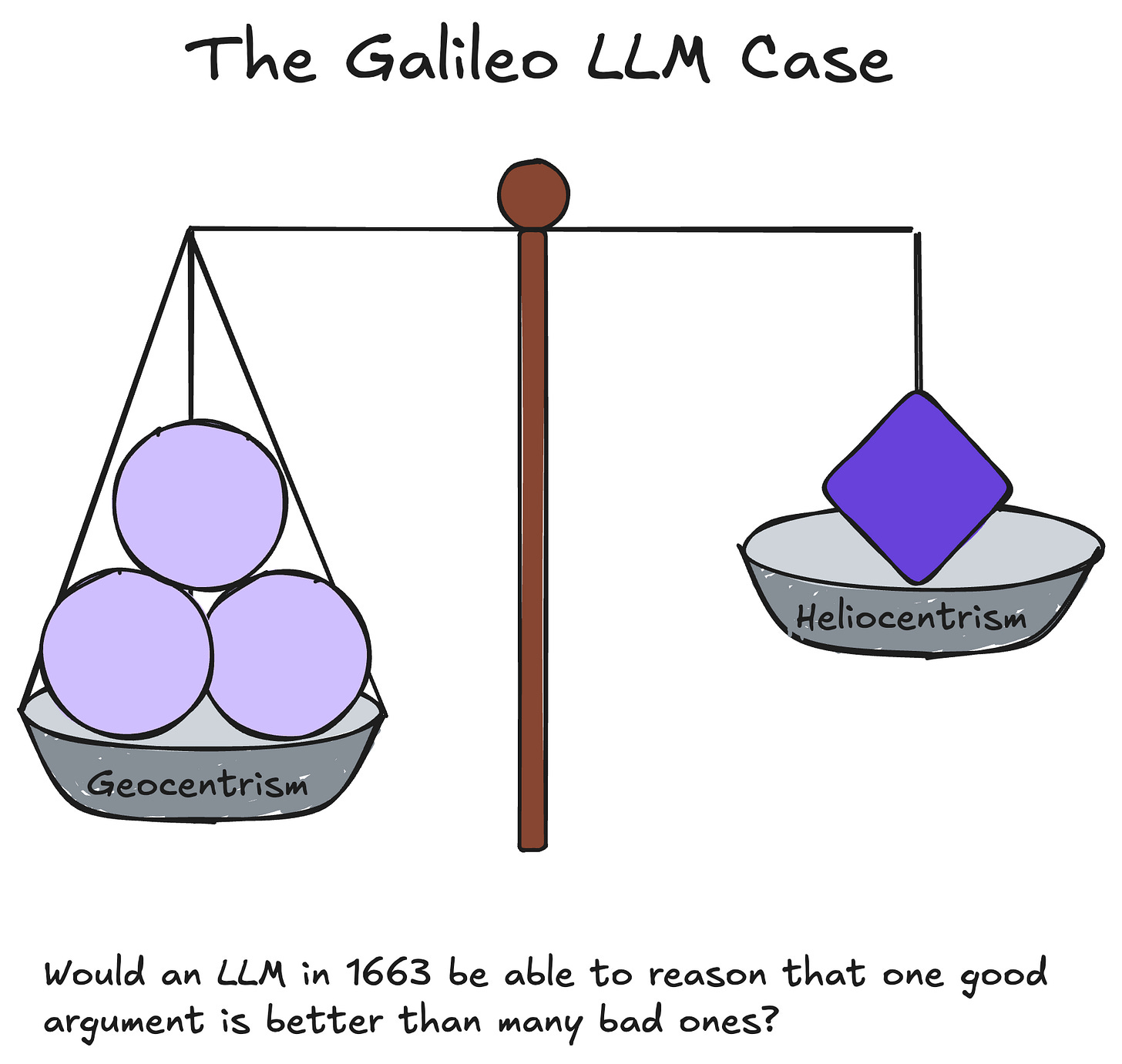

In 1663, there were two competing theories about the universe: geocentrism and heliocentrism, which placed the Earth and the Sun respectively, at the centre of the universe.

We believe today that heliocentrism - by far the less popular of the two views then - better fit the evidence that had become was available to us in 1663. Given the evidence, a model capable of general reasoning should be able to recognize this as well.

Given the data we had in 1663, would an LLM be able to reason that heliocentrism was the stronger view?

In this thought experiment from “Theory is all you need”6, Felin and Holweg argue that an LLM would, in most cases, argue for geocentrism over heliocentrism.

After all, the training data of a 1663 LLM would contain far more instances of text defending geocentrism, the popular view.

An LLM would be far more likely to offer sentence completions that mirror the state of the debate then, which would see geocentrism represented in greater volume. The LLM would do so even if the actual evidence for heliocentrism was stronger.

The authors are careful to note that their thought experiment is a comment on the limitations of LLMs today, not those of the future. But the upshot of the Galileo LLM case supports the skeptic’s argument in general:

Statistical prediction, unlike human reasoning, is not a mechanism for accessing truth.

Here, accessing truth means an ability to evaluate claims based on how true they are.

Now, we might object that most human beings in 1663 also supported geocentrism. Recognising heliocentrism amidst the cacophony of geocentric voices is an unfair standard to hold an LLM to.

But the skeptic can reply that it is the principle that counts. While human reasoning is prone to biases of all sorts, we also have an ability to assess the truth of claims.

And when we’re able to look beyond our biases, or when social dynamics are less constraining, we are able to reason our way to unpopular views. After all, some human beings like Galileo did figure out that heliocentrism was the better theory even in 1663.

An LLM just doesn’t seem to have a similar mechanism for independently assessing the truth value of a claim.

No matter what evidence it comes across, it doesn't seem that the LLM has a mechanism for "thinking through" problems.

With this thought experiment the skeptic has defended the following premise:

Premise 2: Deep learning has no mechanism for accessing truth.

To complete the argument, the skeptic can offer the other premise:

Premise 1: If deep learning has no mechanism for accessing truth, then scaling deep learning cannot resolve AI's reasoning gap.

Without a mechanism for accessing truth, an LLMs ability to reason correctly seems conditional on the truth coinciding with whatever is well-represented in its dataset.

While that can allow for great specific problem solving, it does not allow for general reasoning.

After all, general reasoning requires the ability to figure out which reasons are better than others.

Given a hundred bad arguments for why X is true and one good argument for why X is false, a reasonable intelligence should have some way of discerning that the latter view is better supported.

It doesn't seem to an LLM could do that.

Having seen why the two premises are plausible, we now can see the skeptic’s argument in its entirety7:

Premise 1: If deep learning has no mechanism for accessing truth, then scaling deep learning cannot resolve AI's reasoning gap.

Premise 2: Deep learning has no mechanism for accessing truth.

Conclusion: Therefore, scaling deep learning cannot resolve AI's reasoning gap.

The conclusion is severe: the reasoning gap isn’t something deep learning AIs can get across.

The skeptic's challenge is based on the fact that deep learning lacks an intuitive mechanism for achieving general reasoning.

How can the optimist respond to this?

The optimist can concede that there is no intuitive mechanism by which deep learning could achieve general reasoning.

But neither is there an intuitive mechanism for how deep learning could have fluid conversations or create lush illustrations out of text prompts.

Yet, AI now regularly achieves these results. So why can it not go further?

The crux of the optimist’s reply is that our .

Instead, our expectation of its future possibilities should be based on how it has scaled so far.

And when we observe the effects of scaling deep learning so far, we see the of some reasoning skills. There is no reason to expect that further scaling deep learning will not lead to general reasoning and beyond.

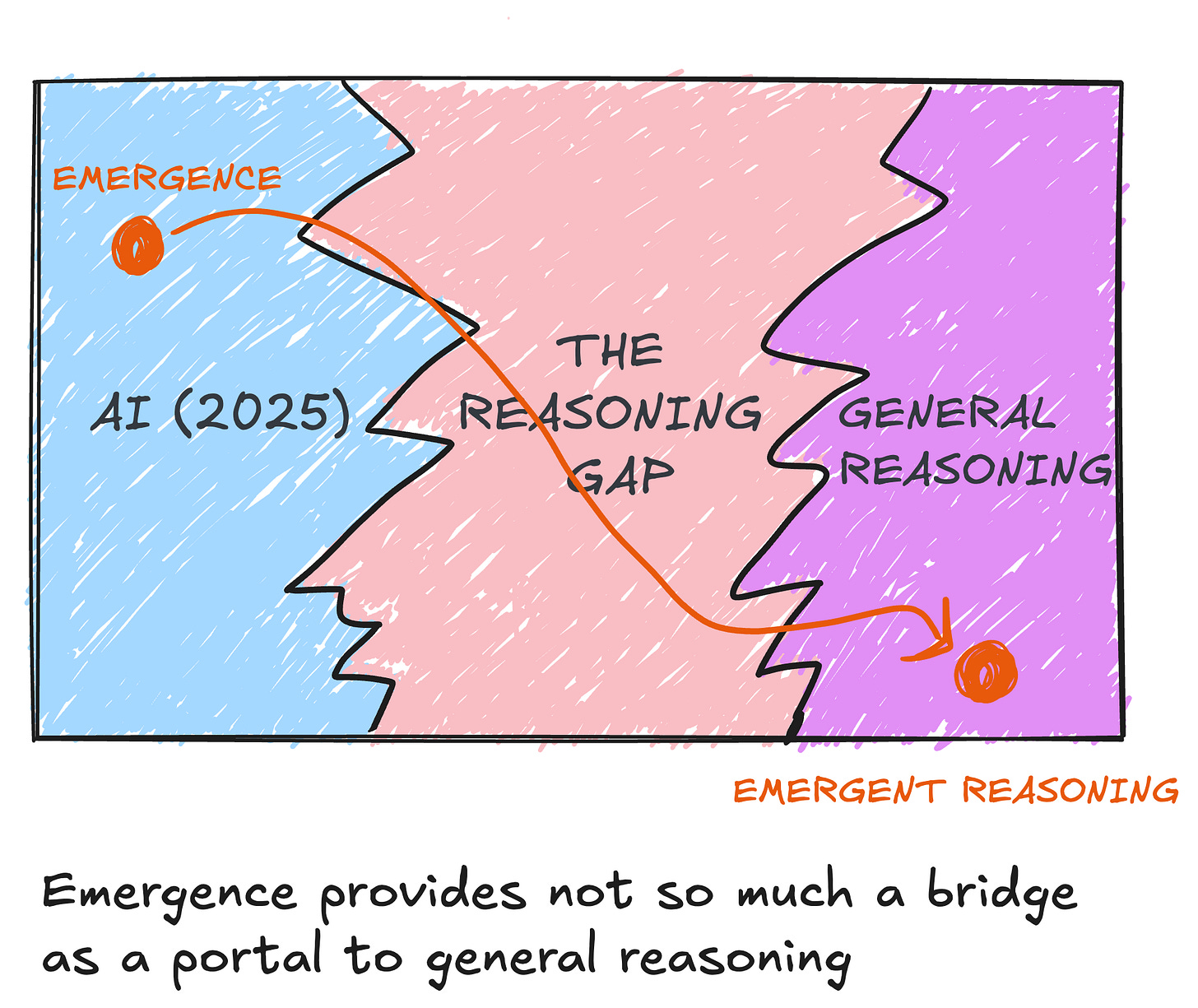

Here the optimist is invoking the phenomena of emergence.

A property is emergent within a system if it is only observed after the system has achieved a certain scale8.

Emergent properties are not present when the system is small, but are present when the system is large.

Crucially, emergence implies that there are properties a system gains on scaling which could not be inferred from its state at a smaller scale.

If reasoning truly is an emergent behaviour of deep learning models, then our lack of an intuitive explanation for how it arises is only to be expected.

You're can't infer an emergent property from your theoretical armchair! Scale the system and it will appear.

The optimist’s argument can be captured in the following way:

Premise 1: If previously scaling deep learning has led to the emergence of some reasoning skills, then scaling deep learning can resolve AI's reasoning gap.

Premise 2: Previously scaling deep learning has led to the emergence of some reasoning skills.

Conclusion: Therefore, scaling deep learning can resolve AI's reasoning gap.

And we can already see the force behind each premise.

Premise 1 seems plausible enough. If previously scaling deep learning has led to the emergence of reasoning skills, then reasoning in an emergent behaviour within deep learning.

We should then expect further scaling deep learning models to allow for the emergence of more complex forms of reasoning, and eventually general reasoning.

Premise 2 states that reasoning skills have emerged through scaling deep learning previously.

For the optimist, this is evidenced in the behaviour of AI today. We can all reflect on our own experiences with LLMs for example, and how they seem to be genuinely reasonable conversationalists for the most part.

More systematic reviews of GPTs seem to echo these anecdotal experiences:

"GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting."9

Regardless of whether AI is reasoning in the way humans do, they do seem to be reliably achieving the results of reasoning in a wider domain of tasks. To the optimist, this is definitive evidence of the emergence of reasoning skills within AIs.

And put together, the premises make a strong case for believing that general reasoning AIs are coming.

Scaling deep learning has led them to achieve notable improvements in reasoning. We should expect that further scale will lead to great improvements, and general reasoning.

What the optimist has provided is not exactly a bridge to general reasoning as much as a portal.

We may not understand the mechanism by which AI scales to achieve general reasoning. It might even seem like magic to us. But that doesn't mean it's not going to get there.

The skeptic has a couple of potential replies to the optimist's emergence argument.

This would attack the optimist's second premise:

Premise 2: Previously scaling deep learning has led to the emergence of some reasoning skills.

To deny that scaling deep learning has led to the emergence of reasoning skills, the skeptic can offer alternative explanations for what appears to be emergent reasoning in deep learning models.

For example, the skeptic may claim that the apparent reasoning abilities of larger deep learning models is in fact a consequence of problem familiarity.

It is quite likely, for example, that many of the reasoning tasks which confounded previous LLMs have now become part of the training data of larger LLMs.

Therefore, the improved performance of larger LLMs on certain reasoning tasks may not indicate emergent reasoning abilities. This is just an instance of the LLM doing what it does well: solving problems specific to its training data. It is just that the training data now incorporates a wider set of problem types10.

Now, an optimist may respond that expanding the AI's dataset sufficiently could itself lead to general reasoning. Why could the training data of deep learning models not eventually be exhaustive enough to cover general reasoning in all cases?

This response is worth consideration.

Generalisability seems to imply the ability to reason beyond one’s training data - how could our training data ever be comprehensive enough to account for the majority of problems in reality?

But the optimist might argue that a training dataset can represent reality well enough for an AI model to achieve general reasoning while being concise enough to feasibly be processed by the model11.

An alternative reply of the skeptic is to concede that scaling deep learning may have led to the emergence of some level of reasoning results, but to argue that this will not scale all the way to general reasoning.

This way, the skeptic is objecting to premise 1:

Premise 1: If previously scaling deep learning has led to the emergence of some reasoning skills, then scaling deep learning can resolve AI's reasoning gap.

Such a skeptic might say:

You're right, we underestimated what scaling deep learning models could achieve. But that doesn't mean scaling is going to keep working.

One genie has already appeared from this lamp. It doesn't mean the lamp will keep producing genies.

- a deep learning skeptic probably

This reply exposes a key weakness within the emergence argument.

Since emergence doesn't identify the mechanism by which scale yields results, nothing in the emergence argument certifies that scaling will continue improve the reasoning of deep learning models.

Under emergence, the potential of deep learning models becomes a purely empirical question:

For the optimist, scale-and-watch is fun when deep learning yields results.

But it quickly becomes excruciating when results slow down. Because scaling, like going to college or buying water at the airport, is expensive.

This more or less describes the state of things as of right now. Sentiments about the future of deep learning swing from wildly positive to pessimistic depending on how impressive (e.g. deep seek) or disappointing (e.g. GPT-4.5) the newest AI release is.

So, while the emergence argument has its strenghts, the optimist may wish to try a second approach.

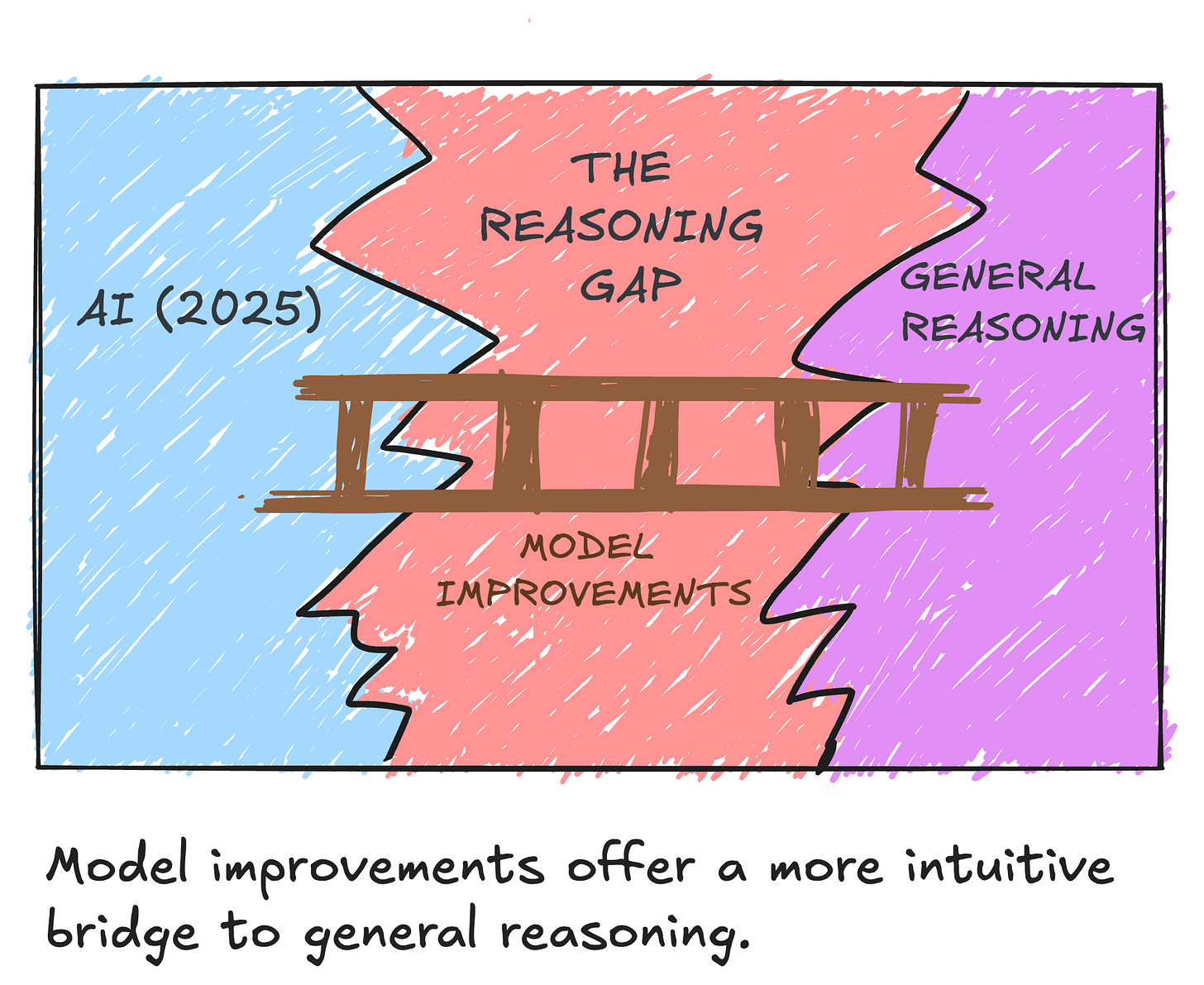

The second path for the optimist is to argue that AI will achieve general reasoning not through emergence, but concrete model improvements.

This argument may even capture the essence of the optimist’s view better: it is not just scale but the continuous development in AI that fuels the optimist’s belief that general reasoning is within reach.

The optimist might then present the following argument:

Premise 1: If model improvements have previously led to leaps in reasoning for deep learning, then model improvements can resolve AI's reasoning gap in the foreseeable future.

Premise 2: Model improvements have previously led to leaps in reasoning for deep learning.

Conclusion: Model improvements can resolve AI's reasoning gap in the foreseeable future.

First consider premise 2.

Arguably, model improvements have led to leaps in reasoning for deep learning previously.

Just consider the transformer architecture, which came out in 201712. Transformers—which led to ChatGPT and successive LLMs—brought about a radical jump in the reasoning abilities of deep learning models.

Recent improvements such as chain-of-thought prompting have continued to build on the reasoning gains of previous improvements, resulting in AI models that get steadily better13.

That’s the force behind premise 2. What about premise 1?

Does the success of past model improvements make it plausible that future improvements will achieve general reasoning?

This seems plausible too.

Future improvements will advance the powerful models we already have, allowing them to build on the reasoning gains we’ve already seen.

If model improvements have been steadily improving the reasoning results of AI models, then we should expect further improvements in the near future to lead to even better reasoning results and eventually general reasoning.

Together, these premises lead to the conclusion that model improvements could bring us general reasoning AIs.

This argument is quite compelling, driven by the feeling that the AI field is rapidly improving, and that model improvements stack to make AI continuously better.

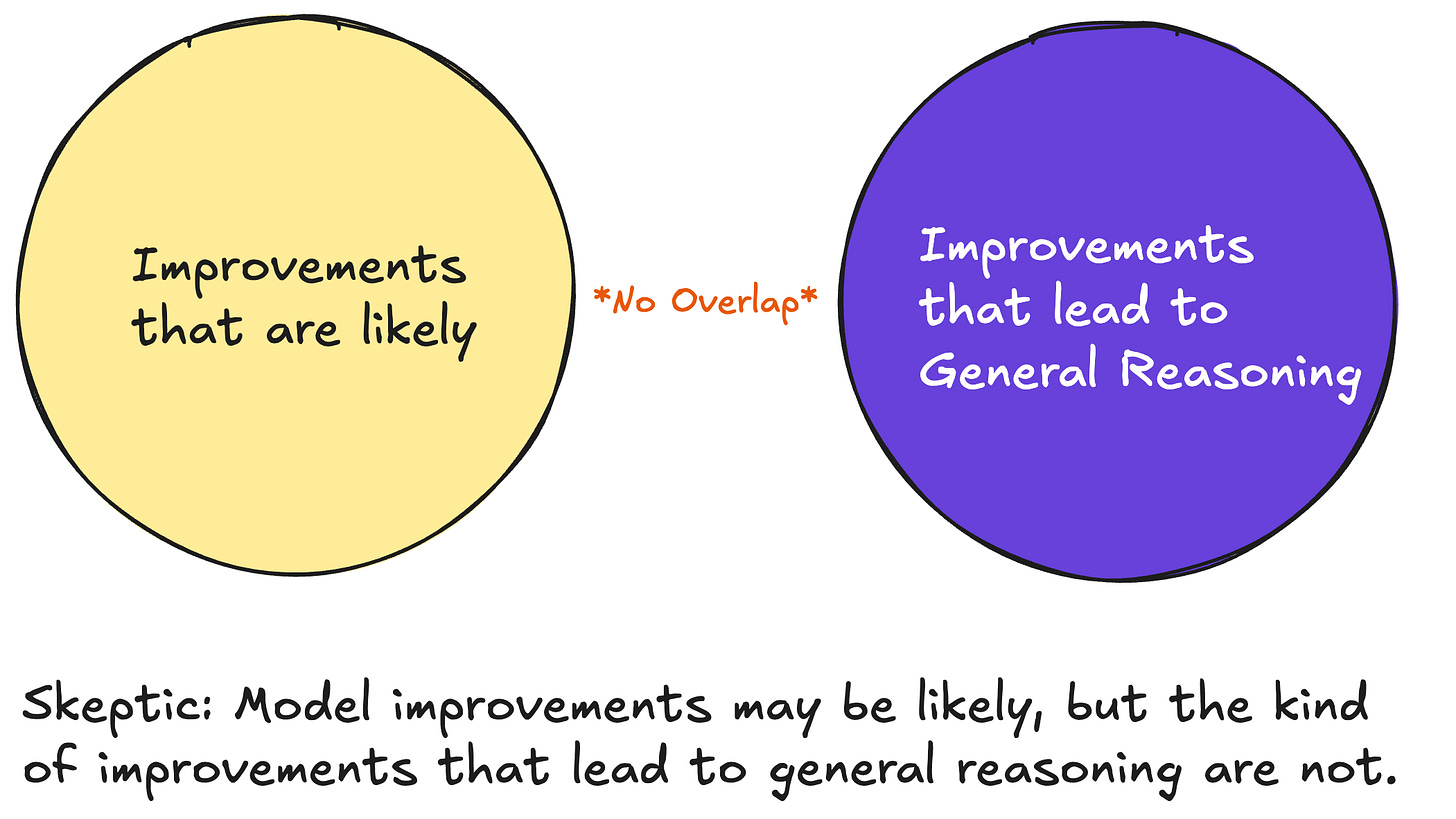

How might the skeptic respond to this?

The skeptic may first defuse the hype around model improvements in general. Model improvements can make AI better in various ways without making it any better at general reasoning.

Many model improvements make AI computationally cheaper or improve its ability to solve for specific situations. This may not help AI in general reasoning at all.

The skeptic can then argue that

The skeptic is thus dividing model improvements into two categories:

There are model improvements, akin to chain-of-thought prompting in their impact, which are likely to continue steadily emerging in the future. But these model improvements are not advancing - they do not actually make AIs better at general reasoning.

There may exist model improvements that help us advance towards general reasoning, perhaps similar to transformers in their impact. However, such breakthroughs are incredibly rare, if they even exist for general reasoning.

There is no evidence to suggest we are close to such a breakthrough.

The skeptic argues that we cannot expect the second kind of model improvements to arrive for AI in the foreseeable future. So, we cannot expect model improvements to help AI achieve general reasoning14.

The skeptic is returning to a core intuition: deep learning doesn’t optimise for reasoning.

Most model improvements make deep learning better at what it does - solving specific problems through statistical prediction. These improvements don't make AI better at general reasoning.

The kinds of improvements that would make AI better at general reasoning are of a radically different sort and not likely to come by (if they even exist).

At this point, the optimist might try to end this argument by specifying the kinds of model improvements that will lead to general reasoning (the model improvements argument itself doesn’t require this).

There are several such proposals on the table15. And while I am not in a position to evaluate them, none so far appear to have put the question conclusively to rest.

We’ve reached the top of the mountain. Let's look down at the terrain as a whole.

Our question was whether deep learning models can achieve general reasoning in the near future.

First, the skeptic argued:

Deep learning models are fancy statistical predictors. They have no mechanism for reasoning. So, scaling deep learning isn't going to get us to general reasoning.

The optimistic camp replied with :

Deep learning does not need an intuitive mechanism for reasoning.

We have already seen that reasoning is an emergent phenomena in deep learning models. All we need for general reasoning AI is to scale deep learning further.

The skeptic could reply by denying that reasoning is an emergent phenomena in deep learning models at all—thought it is not easy to do.

The stronger problem with emergence is that:

While emergence allows for the possibility of achieving general reasoning, it doesn't do much to argue for its probability.

When the returns of scaling seem to diminish, so does our confidence in the potential of general reasoning.

Given this cost with emergence, the optimist can try a different route, :

Model improvements, like the transformer, are the reason for AI's explosive growth.

As new improvements build on old ones, AI is going to get steadily better at reasoning and, in the foreseeable future, achieve general reasoning.

To the model improvements argument, the skeptic offers the following response:

While we often see new model improvements, most of them don't make AI better at reasoning.

The kind of improvement we are looking for is a rare and radical breakthrough. We shouldn't expect this to occur in the foreseeable future.

Whether you tilt towards the optimist’s or the skeptic’s camp will depend which arguments you find compelling and what evidence strikes you as most telling.

The skeptical camp draws heavily on the intuition that what deep learning models do - which seems to be a form of statistical prediction - does not lead to general reasoning.

The evidence that the skeptic weighs most heavily are the mistakes AIs make today. To the skeptic this is an undeniable marker of AIs inability to learn reasoning.

The optimistic camp draws heavily on the intuition that scale and model improvements have helped deep learning achieve astonishing results. To the optimist, it seems evident that this improvement continues and leads to general reasoning soon.

The evidence that the optimist weighs most heavily are the positive results of AI models, and AI improvement over time.

The fact that GPT-4.0 is so much better than GPT-2.0, and GPT-2.0 is so, so much better than deep learning models in 2017 is reason to believe that there will be a general reasoning AI in the near future.

Regardless of which camp you find compelling, here are 3 upshots I see:

Since 2022, there has been both serious hype and genuine evidence in favour of the deep learning optimist.

With the plethora of "reasoning" models being released every month, it's easy to feel that AI’s with general reasoning are around the corner.

But on closer inspection it seems that the best arguments in favour of deep learning optimist still leave a lot to be done.

Much is expected from future developments that haven't yet happened and aren't necessarily entailed by what AI has already achieved.

Whether scale or model improvements to deep learning will lead to general reasoning is, to me, still an open question.

Our uncertainty around the future of deep learning reveals our fundamental lack of understanding of the mechanisms that make deep learning “intelligent”.

We need AI interpretability and a philosophy of AI focused on understanding the cutting edge developments in the space.

Before I tell you what I plan to do about this, here's why our lack of understanding is a problem:

It is tempting to enjoy the fruits of deep learning without understanding how it works.

But it comes at a great cost.

The economic case for understanding deep learning is that scaling is very expensive and has high opportunity cost. Why scale-and-pray when we can understand-and-build?

The universal case for understanding deep learning is far more significant.

We cannot safely give agency to systems we don't understand.

If we don't understand how AI works, we don't understand how it breaks.

And while mistakes don't cost much when AI generates our LinkedIn posts, it could cost us everything if AI is given the agency to run our research labs and fly our planes.

First, it’s your turn to weigh in on the reasoning gap.

Is one camp obviously right? Do you have evidence that changes everything?Is the entire question misplaced?

Next, here’s what I’ll be doing.

This essay bracketed out two questions:

a) What kind of intelligence does deep learning have?

b) How should we approach the future of deep learning?

My next AI essay will dive into the first.

What kind of intelligence does AI have?

We'll develop a theory of intelligence and see how far it takes us in exploring the following:

How does AI compare to human intelligence? Can we anticipate when AI will do something catastrophically stupid? What, if anything, will be humanity's edge in the future?

To get that essay in your email, subscribe to internet philosophy.

And until then, here’s a final question for both of us.

We’ve spent a lot of time asking whether AI could reason like humans do.

But how do humans reason in the first place? What are you and I doing when we say we are reasoning?

What does it mean to reason at all?

Thanks to Atharva Brahmecha for his comments on a draft of this essay.