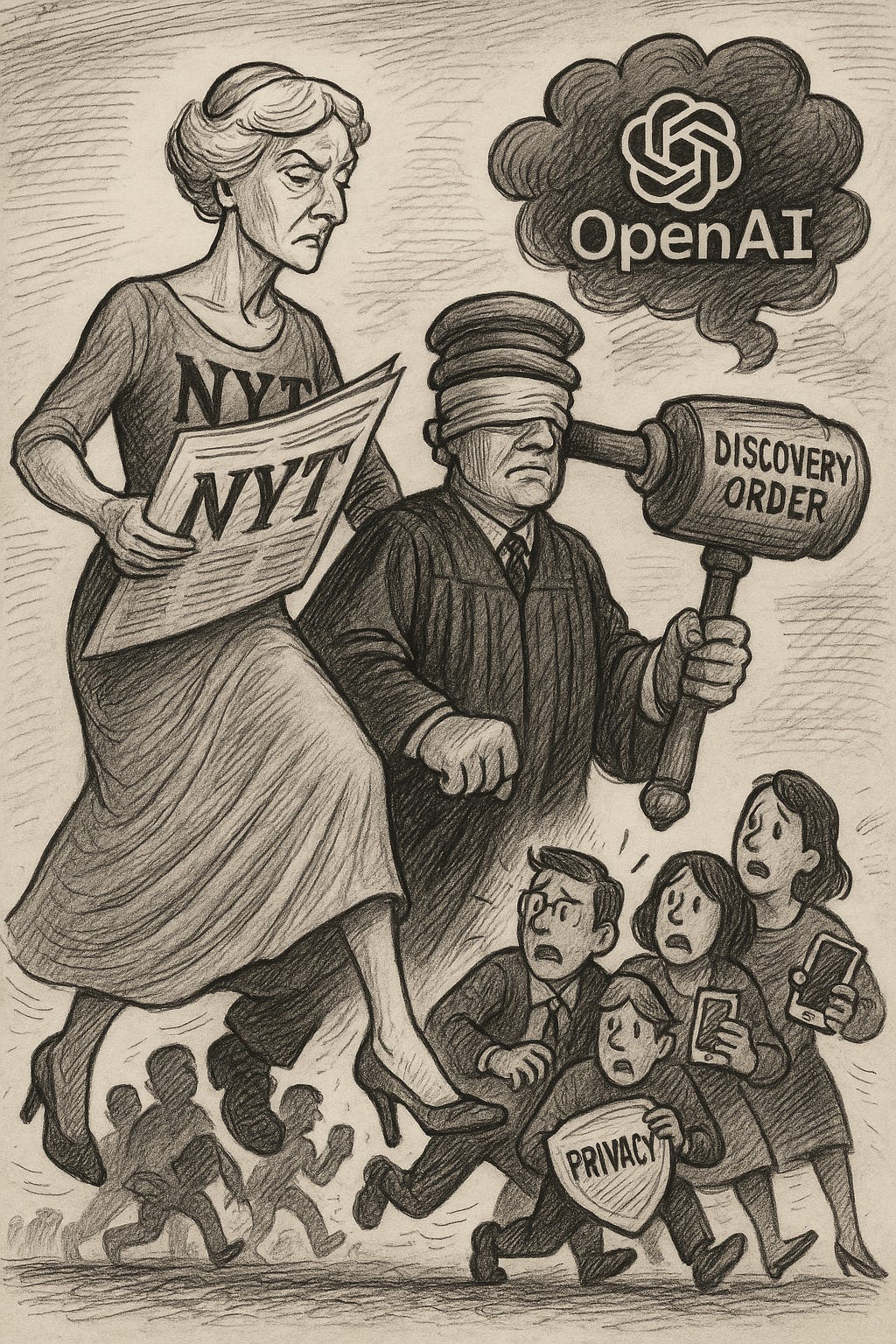

The Gray Lady's Data Dragnet: How One Court Order Just Nuked ChatGPT Privacy

I think we all need to know this, so I’m pulling it from behind the paywall and I’m sending it right now instead of waiting until Monday.

It’s absolutely horrifying. And no, I’m not going to comment on the merits of the case itself. My focus here is exclusively on the choice that the NYT made to ask for Judge Wang to all in order to make that very personal data eligible for data discovery .

Judge Wang’s discovery order in the NYT case compels OpenAI to stockpile every consumer chat and many business chats, forcing OpenAI to break its own privacy commitments to users.

The NYT-backed overreach disregards user privacy, sets up massive legal conflicts with GDPR in the EU, and likely does nothing substantive to prove copyright infringement. It’s overreach that reflects a fundamental misunderstanding of how LLMs work.

I try to be even-handed a lot, and I think this is pretty even-handed tbh

I’m not making this call to protect OpenAI in any way. They aren’t perfect. They make mistakes. I will continue to call those out. The last time I called out a poor product implementation on their side was this week.

But no matter what the merits of the case, this ruling is an absolute travesty for AI users. Not just ChatGPT users! It casts a chilling effect across the entire industry. And it’s horrendous that a so-called safeguard of the consumer (the NYT) has demanded it. We need to demand better LLM fluency from the media.

I’m particularly furious because the court has just forced OpenAI to do the very thing critics always complain about—hoard our personal conversations—yet the long arm of government rooting through millions of private conversations solves exactly of the copyright questions in The New York Times v. OpenAI.

Yep. NONE.

Here’s why the log-hoarding demand is a dead end for the copyright claims: the lawsuit hinges on what went GPT-4’s weights during training and whether that ingestion was transformative fair use or straight-up infringement.

Those training runs were frozen . Capturing billions of chats tells you nothing about that historical data set—it’s like subpoenaing every email sent from an iPhone to prove Apple stole a song to train Siri.

At best, user logs might reveal occasional snippets of Times prose the model regurgitates, but the Times already demonstrated that in its complaint with a handful of cherry-picked prompts.

If they need more examples, a targeted test suite would surface them in an afternoon; forcing OpenAI to warehouse the entire global chat stream is massive overkill. Most critically, the presence (or absence) of a verbatim quote in an output log : whether ingesting those articles in the first place was lawful. That turns on statutory factors like transformation and market harm—not on what random users typed to the model last night.

Bottom line: neither Judge Wang nor the NYT understands how LLMs actually work. It shows. And it’s hurting all of us.

And this isn’t a one-time thing! As I noted above in the facts—in May Judge Wang ordered the company to “preserve and segregate all ChatGPT output logs ,” a directive prompted by the Times’ discovery demands. Indefinitely. While the case runs on. Possibly for years. It’s nothing less than a torpedo at the heart of AI trust.

The sheer scope of the order is breathtaking. It sweeps in every prompt and response from anyone on ChatGPT Free, Plus, Pro, Team, or the standard API—even data users already deleted. Only Enterprise, Edu, and Zero-Data-Retention API customers are exempt. OpenAI is absolutely right to warn that this contradicts its long-standing 30-day deletion policy and “abandons privacy norms” that protect everyday users.

Why is the Times asking for this trove? As I noted, the newspaper claims it needs the logs to show that OpenAI encourages infringement. But that betrays a fundamental misunderstanding of how large-language models work. The question in a copyright case is whether training data contained protected text— Preserving billions of post-training conversations won’t reveal what went into GPT-4’s weights two years ago; it merely warehouses private data that is irrelevant to the alleged wrongdoing.

Judge Stein’s decision therefore creates a perverse situation: to “protect” an 18-month-old newspaper archive, the court undermines live, private conversations between millions of citizens and AI chat instances they regard as personal companions. That is the definition of judicial overreach. It also hands ammunition to every critic who warns that AI companies can—and now must—keep everything we say forever. Public trust in AI was fragile before; this is a sledgehammer blow.

There is also a blatant ethical hypocrisy here. The Times routinely positions itself as a principled watchdog on technology, lecturing Silicon Valley on surveillance and privacy abuses. Yet it has asked a court to impose one of the most sweeping data-retention orders in tech-policy history. That request does not “elevate the ethics of AI” in any recognizable sense; it weaponizes discovery to score legal points while disregarding the collateral damage to user privacy.

Nor does the order make sense under global privacy regimes. OpenAI now risks conflict with the GDPR’s data-minimization and right-to-erasure doctrines for European users, and similar statutes elsewhere, because a U.S. judge insists the company must hold on to data those very laws tell it to delete. OpenAI says it will store the logs in a sealed, audited enclave accessible only to a small legal team, but that doesn’t cure the underlying legal paradox.

The chilling effect is immediate. There are going to be enterprises who pause internal ChatGPT pilots until the appeal is resolved. If courts can compel indefinite retention on a whim, every compliance team in the Fortune 500 will rethink AI adoption. Ironically, a lawsuit meant to “protect journalism” now risks slowing the responsible deployment of the very technology that could help newsrooms thrive.

And I can’t help but wonder how unwelcome such a chilling effect would be in the NYT newsroom—if the Grey Lady really does see this as an existential war with OpenAI, then attacking your enemy’s ability to get revenue makes sense.

Part of what is frustrating is that there were narrower, saner paths. The Times could have requested a capped sample of logs linked to specific alleged infringements or accepted hashed-match evidence rather than a raw data dump. Instead, we got a blanket order that tramples user rights, teaches the public to distrust AI platforms, and still won’t answer whether GPT-4’s training set unlawfully copied Times articles. That is bad law and worse technology policy, and I hope the district court vacates it before lasting damage is done.

Guys, I believe you mean well. I do. I don’t assume all of you have a particular stance on this lawsuit! I’m certainly not blaming you for what a judge decided or even for what one media company chose.

I can distinguish between journalists and boardrooms.

But there’s something you can do in all this that we desperately need: take the time to understand AI so you can report on it accurately. My note on how AI actually works in pre-training and how that inherently impacts claims made in this case isn’t brilliant or new. It should be table stakes journalism to understand how LLMs work well enough to report on these kinds of claims correctly.

If anyone in journalism has serious claims they’re investigating and want a perspective on how AI actually works, my DMs are open. So is my email. I do not care if you cite me or if I am on background. I just want you to not misinform the public. Please.

And last but not least if you’re not in journalism, share this with someone who doesn’t understand this case or needs to hear about the privacy news! No one should be misinformed.