You may also like...

Falconets on the Brink: U-20 World Cup Dream Hinges on Senegal Showdown Amidst Injury Scare

Nigeria's U-20 women's team, the Falconets, are preparing for a critical FIFA U-20 Women’s World Cup qualifier return le...

Nwabali's Shock Chippa Exit & Global Plea: Star Goalkeeper Eyes New Horizon

Super Eagles goalkeeper Stanley Nwabali is now a free agent after mutually terminating his contract with Chippa United. ...

Stars Collide, New Films Revealed: European Film Market Heats Up

The European Film Market in Berlin is buzzing with new film announcements, prominently featuring the global sales launch...

Motionless in White Rocketing to No. 1 on Hard Rock Charts!

Motionless in White's "Afraid of the Dark" has claimed the No. 1 spot on Billboard's Hot Hard Rock Songs chart, marking ...

Country Sensation Ella Langley Dominates Billboard Hot 100!

Ella Langley has achieved a historic milestone with her song “Choosin’ Texas,” becoming the first woman to top the Billb...

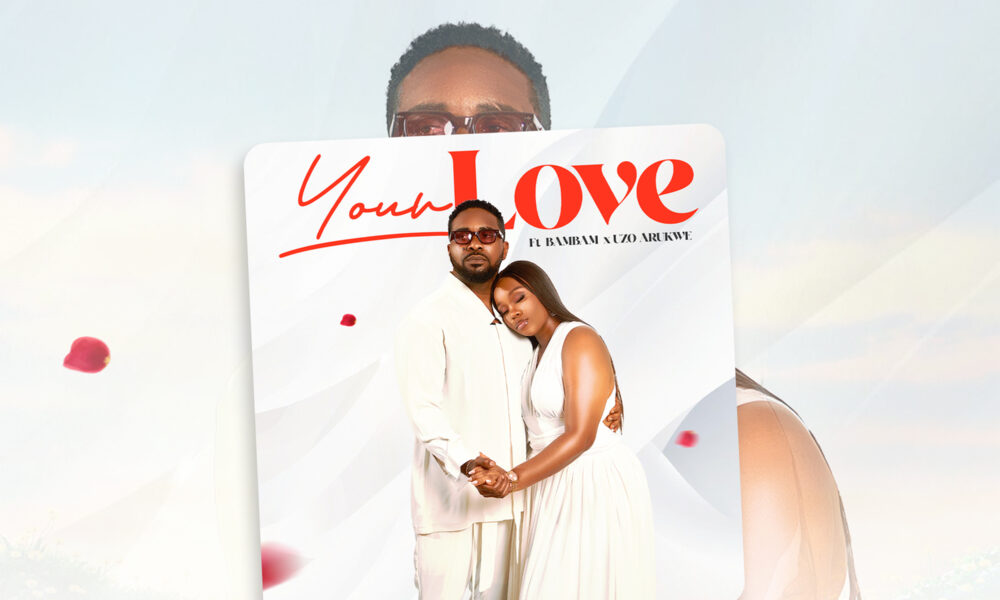

Music Alert: Uzor & BamBam Drop 'YOUR LOVE' as Official Soundtrack for 'WITHOUT YOU'!

Uzor Arukwe and BamBam return with "Without You," an emotional love story exploring love, loss, and healing after heartb...

Fashion Frenzy: Veekee James Stuns in Crystal-Adorned Maternity Gown and Opera Gloves!

Fashion designer Veekee James hosted an unforgettable baby gender reveal party in Dubai, stunning guests with her elegan...

Historic Breakthrough: Ghana & Zambia Forge Visa-Free Travel Link!

Ghana and Zambia have inaugurated a historic visa-free travel agreement, promising to boost regional mobility, trade, to...