America’s AI leadership wasn’t accidental. It came from businesses, small and large, solving problems using the tools available to them in a policy environment that fostered innovation. Now, with global competition heating up, Congress has a chance to reinforce that success.

On May 8, 2025, the Senate Commerce Committee held a hearing titled “Winning the AI Race: Strengthening U.S. Capabilities in Computing and Innovation.” The focus was on how America can lock in its AI leadership by removing barriers and avoiding the regulatory overreach seen elsewhere. As AI user adoption is surging, the discussion could not have come at a more relevant time. The numbers are already in, and they show that .

In the last decade, AI left the lab and entered everyday life. It’s reshaping how we work, connect, and solve problems. That shift didn’t happen on its own. It was powered by U.S.-based innovation and made possible by regulatory and tax frameworks creating incentives for investment.

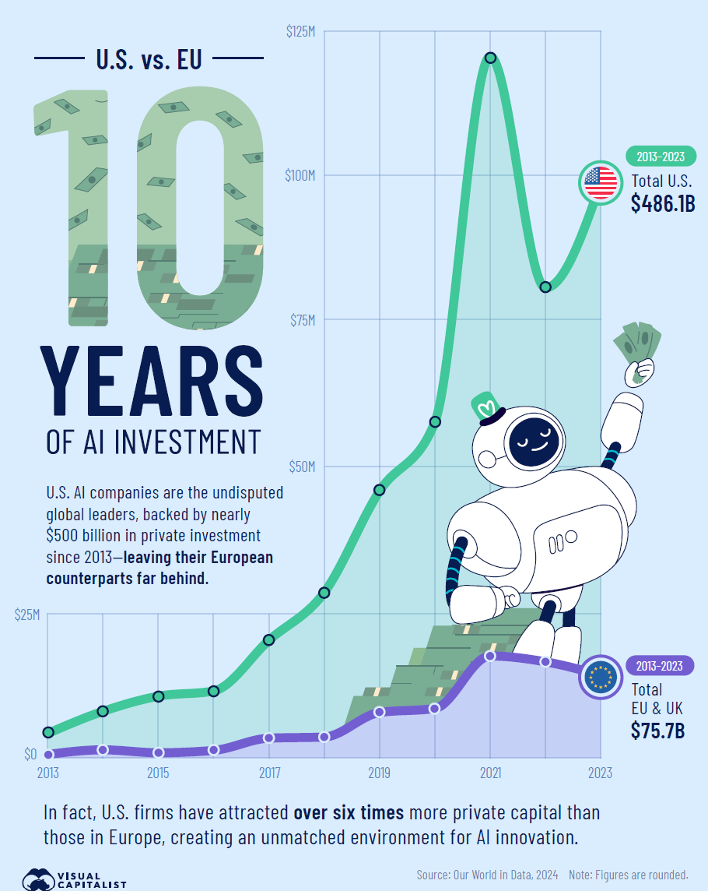

Over that same 10-year period, data shows that the United States has attracted more than $486 billion in private AI investment, while the EU, even when combined with the UK, attracted just $75 billion. In most years, the United States outpaced Europe six- to 12-fold. Why? In large part because our laws encourage, rather than inhibit, investment in innovation.

Europe has pursued a highly regulatory approach to AI development. Laws like the General Data Protection Regulation (GDPR), the Digital Markets Act (DMA), and the EU AI Act have imposed layers of compliance on developers; while intended to build trust with consumers, they have stalled innovation and driven away investment.

The EU AI Act categorizes AI systems by potential risk and subjects them to escalating and burdensome pre-market requirements. Combined with restrictions on data use and platform design under the GDPR and DMA—not to mention bankruptcy laws that conclusively punish the business failures that are routine steppingstones for entrepreneurs in the United States—the result is a compliance-first and risk intolerant environment that makes experimentation costly and prohibitively uncertain.

Startups in this EU regulatory environment face higher legal costs, longer development timelines, and diminished potential returns, all before they even find product-market fit. And investors have noticed. Overlapping and contradictory regulations, such as the AI Act and GDPR, impose significant compliance burdens, costing businesses hundreds of billions annually and hindering their ability to scale, thereby discouraging investment and innovation.

This isn’t a talent issue. Europe has world-class engineers and researchers. The problem is structural. As highlighted in the 2024 Draghi report on EU competitiveness, restrictive European regulations create barriers at every stage for innovative companies wanting to scale.

Now Congress has a choice. It can learn from the EU’s missteps or repeat them.

Following the Senate Commerce hearing, Sen. Ted Cruz (R-TX) floated the idea of a regulatory sandbox, that could pause regulations on AI similar to the model that helped the internet grow in the 1990s. The proposal could provide innovators with space to test new models unburdened by the prospect of forthcoming, overlapping state regulations, in exchange for complying with guardrails.

It’s an interesting concept, but the design will matter. A sandbox can only succeed if it serves everyone, not just the largest players. For small businesses and startups, regulatory uncertainty can be existential. A well-crafted sandbox could give them breathing room, along with the guardrails needed to learn and adapt.

We must avoid the mistakes of the EU. That means no DMA-style detours. And it means building a regulatory model that works for innovators and the consumers who benefit from the new and evolving products and services they bring to market.