deepseeks-ai-style-matches-chatgpts-74-percent-of-the-time-new-study

New Copyleaks' study finds 74.2% of DeepSeek’s reviewed written text has a stylistic fingerprint ... [+] similar to OpenAI's models. This overwhelming similarity, was not seen with any other models tested—implying DeepSeek may have been trained on OpenAI outputs.

Getty ImagesA new study finds a stunning 74.2% of DeepSeek’s written text, reviewed in the research, has striking stylistic resemblance to OpenAI’s ChatGPT outputs. The findings suggest that DeepSeek may have been trained on ChatGPT outputs.

AI detection firm Copyleaks provided the study exclusively for this article ahead of its planned publication on Cornell’s arXiv.org repository. According to the company, this prospective research could have significant implications for intellectual property rights, AI regulations and AI development going forward.

The Copyleaks study used screening technology and algorithm classifiers to detect the stylistic fingerprints of written text that various language models produced, including OpenAI, Claude, Gemini, Llama and DeepSeek. The classifiers used a unanimous voting approach to reduce false positives and ensure high precision.

Interestingly, while written text generated by most models were easily distinguished as unique to each of them, a substantial majority of DeepSeek’s outputs were classified as having been generated by OpenAI’s models.

Shai Nisan, head of data science at Copyleaks, wrote in an email exchange that the study was similar to a handwriting expert trying to identify the author of a manuscript by comparing the handwritten text with other samples from various writers. In this instance the results were surprising and significant.

“Our research utilized a ‘unanimous jury’ approach and identified a strong stylistic similarity between DeepSeek and OpenAI’s models, which wasn’t found with other inspected models," he explained.

Nisan added that this raises crucial questions about how DeepSeek was trained and whether it leveraged OpenAI’s outputs—potentially without authorization.

“While this similarity doesn’t definitively prove or declare DeepSeek as a derivative, it does raise questions about its development. Our research specifically focuses on writing style; within that domain, the similarity to OpenAI is significant. Considering OpenAI’s market lead, our findings suggest that further investigation into DeepSeek’s architecture, training data and development process is necessary,” wrote Nisan.

If DeepSeek’s training data used OpenAI-generated texts without proper authorization, the implications for IP rights would be profound. Such a scenario could represent a violation of OpenAI’s terms of service and potentially its intellectual property. The general lack of transparency in AI training data amplifies the issues, highlighting the need for regulatory frameworks that enforce clear disclosure of training datasets.

Nisan noted the potential impact on the AI industry could be far reaching.

“The research strongly suggests that transparency and strong IP protections are paramount in the future of AI development and regulation. Regulators are likely to consider requiring companies to disclose detailed information about the datasets and model outputs used in training their models,” he added.

This issue becomes even more concerning in light of the market impact of DeepSeek’s perceived innovation and other questions surrounding its tech. For example, Nvidia reportedly experienced a significant market value loss shortly after DeepSeek’s breakthrough announcement in January that its “novel” training and speedy inference solutions required a fraction of Nvidia’s pricey AI processors compared to other generative AI models.

If evidence were to suggest that the DeepSeek innovation was based on unauthorized use of OpenAI outputs, the financial and legal ramifications could be significant.

While OpenAI itself has faced criticism for training on vast amounts of web content without explicit permission, the potential for DeepSeek to have mirrored OpenAI’s style introduces new complexities. It suggests a potential loophole in current IP frameworks—whereby AI models can effectively “learn” from each other without legal recourse.

From a legal standpoint, the absence of established precedents makes enforcement difficult. While stylistic fingerprinting of AI models can serve as a powerful tool for identifying unauthorized model usage, it is not a "smoking gun" for legal action.

However, these findings could catalyze efforts to define clearer IP rights and regulatory standards for AI training and development.

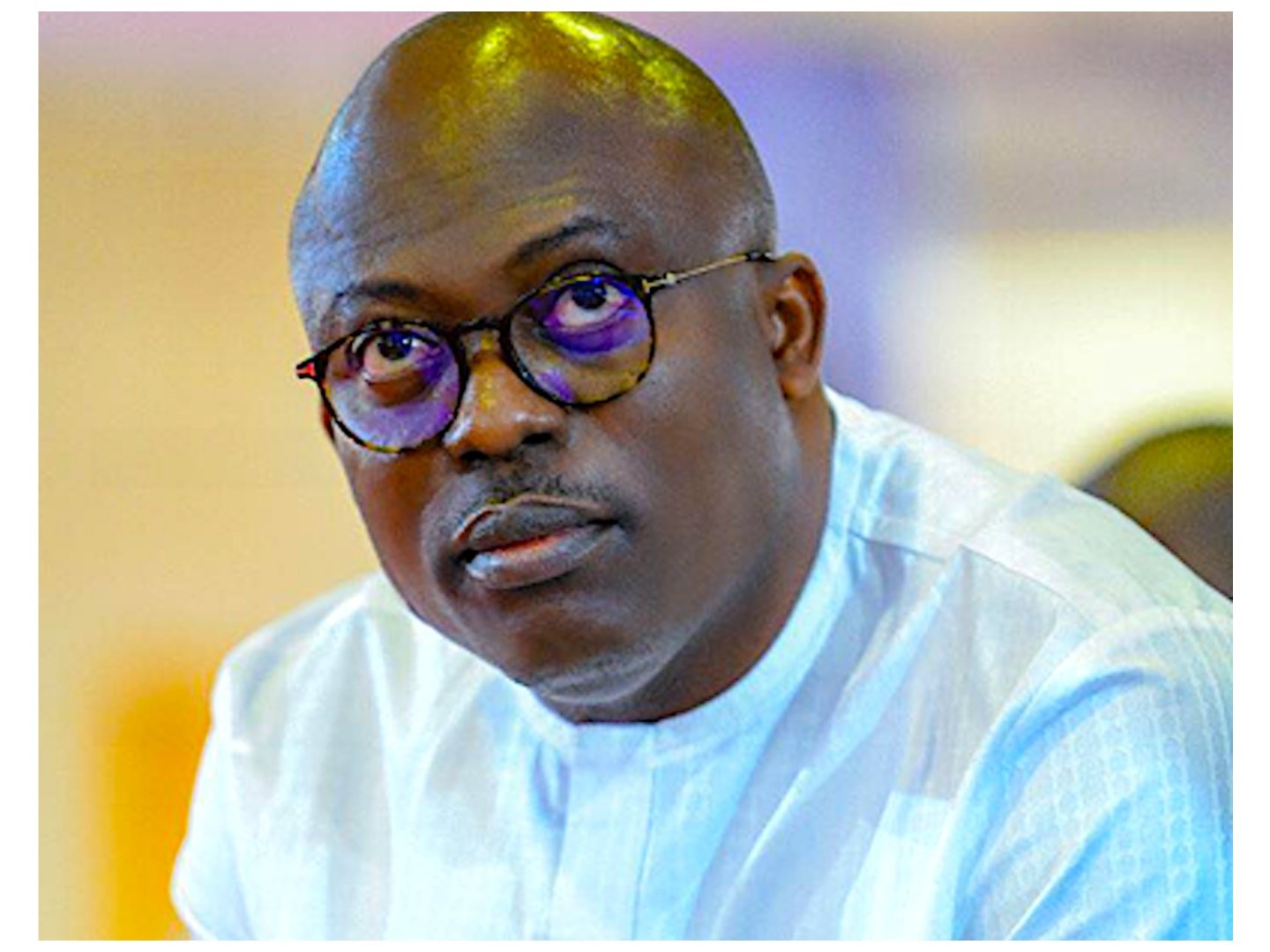

The Copyleaks bar chart shows 74.2% of DeepSeek’s written text was identified as having a stylistic ... [+] fingerprint similar to OpenAI's models. This similarity—not observed with any other models tested—implies DeepSeek may have used OpenAI’s outputs during its training. But more research is necessary.

Used With Permission: CopyleaksA counter-argument to the Copyleaks findings is the possibility that AI models could converge stylistically over time, especially if they are trained on overlapping datasets. However, the study’s unanimous ensemble method was specifically designed to detect nuanced stylistic differences between models.

This suggests that the similarity between DeepSeek and OpenAI is not merely a byproduct of dataset overlap but potentially indicative of deeper structural or training similarities.

“Even if large language models draw from overlapping datasets, AI fingerprinting remains crucial. The sheer variety of elements—such as architecture, fine-tuning methods and generation techniques—ensures that each LLM develops a distinct writing style," Nisan concluded.

As AI continues to permeate nearly every aspect of modern life, the need for clear IP regulations and ethical standards becomes more necessary and important. Whether DeepSeek is ultimately proven to have leveraged OpenAI’s outputs without authorization remains to be seen.

However, the questions raised by this type of research are likely to endure and could shape the future of AI development and regulation–impacting DeepSeek, ChatGPT and every other player in the space. DeepSeek did not respond to a request for comment by the time of publication.