BMC Medical Education volume 25, Article number: 824 (2025) Cite this article

Artificial intelligence (AI) is becoming increasingly relevant in healthcare, necessitating healthcare professionals’ proficiency in its use. Medical students and practitioners require fundamental understanding and skills development to manage data, oversee AI tools and make informed decisions based on AI applications. Integrating AI into medical education is essential to meet this demand.

This cross-sectional study aimed to evaluate the level of undergraduate medical students’ readiness for AI as they enter their clinical years at Sultan Qaboos University’s College of Medicine and Health Sciences. The students’ readiness was assessed after being exposed to various AI related topics in several courses in the preclinical phases of the medical curriculum. The Medical Artificial Intelligence Readiness Scale For Medical Students (MAIRS-MS) questionnaire was used as the study instrument.

A total of 84 out of 115 students completed the questionnaire (73.04% response rate). Of these, 45 (53.57%) were female while 39 (46.43%) were male. The cognition section, which evaluated the participants’ cognitive preparedness in terms of knowledge of medical AI terminology, the logic behind AI applications, and data science, received the lowest score (Mean = 3.52). Conversely, the vision section of the questionnaire, which assessed the participants’ capacity to comprehend the limitations and potential of medical AI, and anticipate opportunities and risks displayed the highest level of preparedness, had the highest score (Mean = 3.90). Notably, there were no statistically significant differences in AI competency scores by gender or academic year.

This study’s findings suggest while medical students demonstrate a moderate level of AI-readiness as they enter their clinical years, significant gaps remain, particularly in cognitive areas such as understanding AI terminology, logic, and data science. The majority of students use ChatGPT as their AI tool, with a notable difference in attitudes between tech-savvy and non-tech-savvy individuals. Further efforts are needed to improve students’ competency in evaluating AI tools. Medical schools should consider integrating AI into their curricula to enhance students’ preparedness for future medical practice. Assessing students’ readiness for AI in healthcare is crucial for identifying knowledge and skills gaps and guiding future training efforts.

Advancements in artificial intelligence (AI) have resulted in its increased utilization in the healthcare sector, creating a demand for healthcare professionals who possess expertise in AI [1]. As the healthcare industry shifts towards value-based care, experts are exploring how technology and AI solutions can contribute to delivering high-quality care at reduced costs [2]. This means that future medical practitioners will need to adapt to significant changes in healthcare due to the widespread implementation of innovative technologies and AI [3]. Therefore, they should have a fundamental understanding of AI to identify and evaluate its growing potential. They will need to develop skills to manage data, oversee AI tools, and make informed decisions based on the output of AI applications [4, 5]. To meet this need, it is anticipated that AI will become an integral part of medical education in the future [6].

Current medical students, residents and physicians lack proficiency in emerging technologies such as artificial intelligence (AI) [7], which is partly attributed to traditional medical education methods that have not kept up with changes in the field. There is also reported resistance among physicians to using AI in healthcare, possibly due to a lack of formal training in this area [8].

Medical education typically focuses on patient care, communication skills, and systems-based practice after a core phase of preclinical lectures [9]. However, recent advances in AI, public accessibility and the performance of AI tools, particularly, ChatGPT’s performing well on USMLE medical exams without prior medical training are expected to hasten the integration of AI and technology-oriented topics into the medical curriculum [10]. In fact, physicians and medical students themselves have emphasised the need for a structured training program on AI applications during medical education [11]. Overall, there is a growing recognition of the need to better prepare medical students and residents for the use of AI in healthcare [10].

There is limited research on the effectiveness of AI educational interventions, as most existing literature only describes programs, proposes content modifications, or makes recommendations [12]. While experts agree that understanding the uses, applications, disadvantages and limitations of AI is important, there is no consensus on how much AI should be introduced into the medical curriculum [13]. Instead of focusing solely on introducing sophisticated technical tools, there is a strong argument that the goal should be to enhance readiness to work with AI technologies and evaluate future innovations [14]. Assessing students’ readiness for AI in healthcare is crucial for identifying knowledge and skills gaps and guiding future training efforts [13].

At Sultan Qaboos University (SQU), medical students’ progress from preclinical phases (during which, basic sciences, such as Human Anatomy and Physiology, are taught in a system-based courses format) into the clinical phase, during which they have more direct contact with patients in teaching hospitals and connected clinics. This study aims to evaluate the level of medical students’ readiness for AI prior to their moving into the clinical phase, after being exposed to AI-related topics in several courses in the preclinical phase of the curriculum.

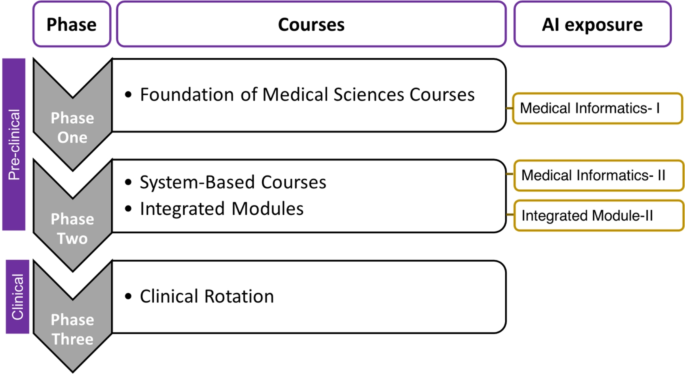

This study is a cross-sectional analysis of undergraduate medical students enrolled at Sultan Qaboos University’s College of Medicine and Health Sciences. The curriculum at SQU is structured around a student-centered, integrated approach that spans six years, consisting of three phases. Phase one (two semesters) covers foundational medical science, phase two (four semesters) is organised around integrated organ-system courses, and phase three (three years) involves clinical clerkships leading up to the MD exam. See Fig. 1.

Our students receive some early exposure to concepts of AI. In phases one and two, they study Medical Informatics I and II, which introduce them to the basic terminology of AI, and also to the use of AI in Clinical Decision Support Systems (CDSSs). Some of the students investigate CDSSs in more detail and give class presentations on them (Fig. 1).

During phase two, students take an Integrated Module (IM-II) course that combines basic science with clinical cases, covering pathophysiology, examination strategies, interpretation of laboratory and radiological investigations, management plans, and ethical and socio-cultural issues. For each clinical scenario discussed in this course, students are exposed to some AI applications; and are asked to evaluate the tools, their limitations and related ethical considerations. Figure 1; Table 1.

Although we cannot ascribe a direct cause-and-effect from this exposure to any readiness measurement, it would not be unreasonable to expect that measurement results from our students may show a greater level of readiness than students whose courses did not include such early exposure.

During each session, we incorporated a variety of learning methods to enhance student participation and promote their self-directed learning abilities. In each session, we encouraged students to engage in discussions, share their perspectives, work in teams, and conduct literature searches to answer questions. Table 2 provides examples of the topics covered and the corresponding learning activities used in one of the course which is the Integrated module-II.

The students’ readiness for AI in healthcare was evaluated through the use of the Medical Artificial Intelligence Readiness Scale For Medical Students (MAIRS-MS) questionnaire designed to evaluate medical students’ perception of their AI readiness [13]. The MAIRS-MS questionnaire has been validated, psychometric parameters have been evaluated, and written permission was obtained from the designers to use the questionnaire [13]. It is composed of five sections: demographics, cognition (eight items), ability (eight items), vision (three items), ethics (three items).

For our study, the questionnaire was administered via Google Forms, with the original wording of the entire questionnaire and additions of two questions related to previous exposure to AI in other courses. The link to the questionnaire was distributed to the students by the end semester three in phase two after completing the Integrated module-II course through their institutional email addresses. The questionnaire remained open from November 27th, 2022 to December 4th, 2022. An informed consent was obtained from all students before their participation in the study.

The raw data were entered into an Excel (Version 365) spreadsheet, and descriptive statistics obtained. Mann-Whitney tests were conducted to test for statistical differences in the results for gender and prior exposure to AI. A statistically significant difference was taken at P < 0.05. Cronbach’s Alpha was calculated to test the results for internal consistency, for each category and overall.

A total of 84 out of 115 students completed the questionnaire, giving a response rate of 73.04%. Of the 84 students, 45 (53.57%) identified as female and 39 (46.43%) identified as male. Table 3 shows the results of the responses to the questions in the MAIRS-MS questionnaire.

The students’ readiness in different sections was comparable with a mean score of 3.5–3.9. The highest was for the vision Sect. (3.90) followed by the ethics Sect. (3.80).

Table 4 shows the results of the Cronbach’s alpha tests, indicating acceptable internal consistency.

These results demonstrate that the questionnaire had strong internal reliability, confirming its validity for measuring AI readiness among medical students.

In all the items, there were no statistically significant differences based on prior exposure to AI, and only one item (Cognition: “I can define the basic concepts and terminology of AI.”) showed a statistical difference on gender (f = 3.75; m = 3.33; P = 0.0062).

The aim of this study was to assess the preparedness of medical students in adopting Artificial Intelligence (AI) in healthcare as they enter their clinical years. The results indicate that the readiness scores of the students was greater than 3 in all four sections, indicating a moderate to high level of preparedness. The cognition section, which evaluated the participants’ cognitive preparedness in terms of knowledge of AI terminology, the logic behind AI applications, and data science, received the lowest scores. On the other hand, the vision section, which mainly tested the participants’ ability to comprehend the limitations and potential of medical AI, anticipate opportunities and risks, and generate ideas, exhibited the highest level of preparedness. Potential reasons for this lower cognition scores could include the lack of sufficient exposure to technical aspects of AI during preclinical training, limited emphasis on data science and algorithmic logic in medical curricula, and the abstract nature of AI concepts, which may be challenging for medical students.

An unexpected findings was that only 80% of the students reported having prior exposure to AI, despite the fact that all students had taken Medical Informatics I and II, which cover AI topics. This is very possibly due to the fact that the survey was, coincidentally, run over the period that ChatGPT 3.5 was publicly-released. Because of its dramatic impact, many people many people began associating AI solely with Large Language Models (LLMs). It is likely that some students responded to the survey based on their knowledge of ChatGPT, rather than their formal AI coursework. Since the survey had already been running, adjustment could not be made. Further research on this topic would be required.

Overall, the readiness score above the mid-point of 3 indicate that SQU medical students demonstrate moderate AI preparedness. As the MAIRS-MS questionnaire is relatively new, we could find only two others studies (apart from the original work) that utilised it and reported on the individual Likert Scale scores [15]. One study [15] measured the AI-readiness of medical and dental professionals (not students) in Saudi Arabia, in June 2022 (barely 5 months before ours). Their scores were almost all below 3, and below the scores in our study. The other [16] was a small pre-post study among German medical students. In their pre-test, apart from one item (“I find it valuable to use AI for education, service and research purposes.”) which scored identically to ours (3.92), all of their scores were below ours. This is not entirely surprising, however, because, in both cases, their students had not received prior AI training, and the literature cited in our introduction indicates that current health professionals are generally lacking in AI knowledge and skills. Because we did not perform a pre- and post-test, we do not know the exact impact of our training; our figures do, however, give us some confidence in the readiness of our students.

Despite the growing literature advocating for AI integration into medical education, we have yet to find a comprehensive and widely applicable framework [5, 12, 17]. Furthermore, current curriculum recommendations lack specific learning outcomes and are not based on any particular education theory [17]. Therefore, there is a need to establish standardised core competencies to enable medical educators to design AI curricula with specific learning outcomes that are based on a particular education theory and aligned with the needs of healthcare professionals and the healthcare system [14, 17].

Furthermore, continued evaluation of the students’ needs, perceptions and readiness is crucial to adjust the curriculum according to their needs and to reach desirable learning outcomes and to take into account appropriate educational theory. For example, medical students expressed a desire to gain specific knowledge and skills on many more topics related to AI, such as applications for assisting clinical decision-making and reducing medical errors, AI-assisted emergency response, and AI-assisted risk analysis for diseases [11, 12].

In light of the already dense medical curriculum, experts have proposed the integration of AI into various levels of the medical education through a longitudinal model, aimed at enhancing the acquisition of knowledge and skills [12, 18]. Medical educators advocate for diverse teaching methods to educate healthcare professionals on AI skills, including covering the theory and terminology of AI, and providing experiential learning opportunities for students to engage with AI tools and receive feedback [18].

In this study, students were introduced to AI theory and terminologies and were also exposed to practical exercises in various clinical scenarios throughout their preclinical years. Different teaching activities were employed, such as discussions and debates on ethical aspects of AI use, including autonomy and confidentiality, and evaluations the potential of AI and machine learning in addressing healthcare challenges. The students were also observed engaging in evaluations of AI tools discussed in the media or literature, where they could make informed decisions by assessing critical factors such as the accuracy, the nature of the data used for training and testing, and the sources of funding, among other relevant factors.

However, it should be noted that the development of a comprehensive AI curriculum is still a work in progress. Experts are calling for evidence-informed curricula with planned evaluations to allow for iterative refinements based on student and faculty feedback. They also emphasize the need for evaluating the interventions, such as assessing attitudes and readiness at different curriculum levels. This is because there is a lack of published literature on the evaluation of implemented programs in medical education, which could provide valuable guidance for program improvements. Therefore, continuous evaluation and refinement of AI curricula in medical education to ensure their effectiveness and impact.

As more AI-driven healthcare services are integrated into the healthcare systems, there is a concern that a conflict might arise between technology-enabled care models and patient-centered care model in healthcare. This conflict is mainly driven by a lack of formal training and low familiarity with AI and emerging technology among the healthcare professionals. It is believed that with such skills and proficiencies, physicians will be able to identify the AI tools that benefit in patient care. Furthermore, medical education should enhance competencies such as effective communication, leadership, and emotional intelligence which will be increasingly important, given that AI-based systems cannot fully account for all facets of a patient’s physical and emotional state [19, 20].

In summary, introducing AI into medical education requires careful consideration of what to teach and how to teach it [21]. To overcome challenges, it is crucial to establish a standardized framework of essential competencies for AI training and incorporate AI training into lessons on clinical reasoning and other fundamental activities. Additionally, a range of educational approaches and hands-on learning opportunities should be implemented to teach various AI skills, ensuring that healthcare professionals are proficient in utilizing AI in their practice.

While this study provides insights into medical students’ AI-readiness as they enter clinical years, there are some limitations to consider. Firstly, the study was conducted in a single institution, which limits the generalizability of the findings to other undergraduate medical programs with different curricula and resources. Secondly, there was no pre-assessment of students’ AI readiness before their exposure to AI topics in the preclinical phases, but that is not a major concern, because the aim of this study is not course evaluation, but rather to determine our students’ perceptions of their AI-readiness as they enter their clinical years. The results indicate that while students demonstrate some readiness for integrating AI into clinical practice, significant gaps remain, particularly in understanding AI terminology and logic, suggesting the need for further training and objective assessments to better prepare them for real-world applications. Thirdly, while using a new scale (MAIRS-MS questionnaire) increases the value of this paper, its novelty means that we do not have sufficient literature for comparison. As more institutions use the scale, and this paper becomes a point of comparison, the significance of our results will become clearer. Additionally, the study relied on self-reported data from students, which may be subject to social desirability and selection bias; again, however when comparing to other studies using the scale, those other studies would also be subject to the same limitation.

Incorporating AI topics into medical education can enhance medical students’ readiness to adopt AI in healthcare. However, the development of a standardized and evidence-informed AI curriculum with specific learning outcomes and diverse teaching methods is essential to ensure effectiveness and impact. Continuous evaluation and refinement of AI curricula are also needed to meet the evolving needs of healthcare professionals and the healthcare system. Furthermore, the development of AI skills and proficiencies should be balanced with the enhancement of essential competencies such as effective communication, leadership, and emotional intelligence, to ensure patient-centred care in the era of AI-driven healthcare.

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Not applicable.

No fund was received for this manuscript.

This study was performed in accordance with the Declaration of Helsinki. It is ethically approved by the Ethics committee from the College of Medicine and Health Sciences at Sultan Qaboos University ( SQU-EC/ 287/2022). Informed consent was obtained from all students with detailed explanation about the study before their participation in the study.

Not applicable.

The authors declare no competing interests.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

AlZaabi, A., Masters, K. Assessing medical students’ readiness for artificial intelligence after pre-clinical training. BMC Med Educ 25, 824 (2025). https://doi.org/10.1186/s12909-025-07008-x