AP

APThe study used controlled puzzle environments, such as the popular Tower of Hanoi puzzle, to systematically test reasoning abilities across varying complexities by large reasoning models such as OpenAI's o3 Mini, DeepSeek's R1, Anthropic's Claude 3.7 Sonnet and Google Gemini Flash. The findings show that while large reasoning and language models may handle simple or moderately complex tasks, they experience total failure when faced with high-complexity problems, which occur despite having sufficient computational resources.

Gary Marcus, a cognitive scientist and a known sceptic of the claims surrounding large language models, views Apple's work as providing compelling empirical evidence that today's models primarily repeat patterns learned during training from vast datasets without genuine understanding or true reasoning capabilities. "If you can't use a billion-dollar AI system to solve a problem that Herb Simon (one of the actual godfathers of AI, current hype aside) solved with AI in 1957, and that first semester AI students solve routinely, the chances that models like Claude or o3 are going to reach AGI seem truly remote," Marcus wrote in his blog.

Marcus' arguments are also echoed in earlier comments of Meta's chief AI scientist Yann LeCun, who has argued that current AI systems are mainly sophisticated pattern recognition tools rather than true thinkers.

The release of Apple's paper ignited a polarised debate across the broader AI community, with many panning the design of the study than its findings.A published critique of the paper by researchers from Anthropic and San-Francisco based Open Philanthropy said the study has issues in the experimental design, that it overlooks output limits.In an alternate demonstration, the researchers tested the models on the same problems but allowed them to use code, resulting in high accuracy across all the tested models. The critique around the study's failure to take in the output limits and the limitations in coding by the models have also been highlighted by other AI commentators and researchers including Matthew Berman, a popular AI commentator and researcher.

"SOTA models failed The Tower of Hanoi puzzle at a complexity threshold of >8 discs when using natural language alone to solve it. However, ask it to write code to solve it, and it flawlessly does up to seemingly unlimited complexity," Berman wrote in a post on X (formerly Twitter).

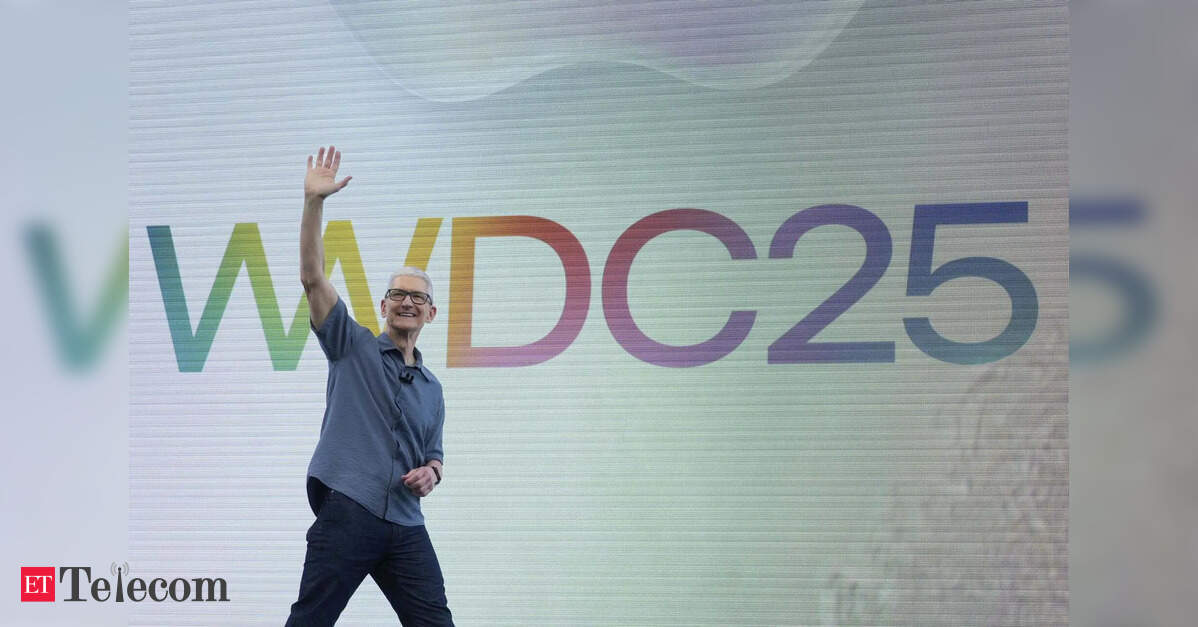

The study highlights Apple's more cautious approach to AI compared to rivals like Google and Samsung, who have aggressively integrated AI into their products. Apple's research explains its hesitancy to fully commit to AI, contrasting with the industry's prevailing narrative of rapid progress.Many questioned the timing of the release of the study, coinciding with Apple's annual WWDC event where it announces its next software updates.

Chatter across online forums said the study was more about managing expectations in light of Apple's own struggles with AI.

That said, practitioners and business users argue that the findings do not change the immediate utility of AI tools for everyday applications.