Physical And Agentic AI Is Coming

intelligence that has the ability to think and make decisions independently, and may appear as a futuristic interface or a semi-human form.Less

getty

Some interesting questions are coming up in the world of artificial intelligence that have to do with the combination of physical environments and agentic AI.

First of all, that term, agentic AI, is only a couple of years old. But it’s taking hold in a big way – in enterprise, and government, and elsewhere.

The key is this, though – if the AI agents can do things, how do they have the access to do those things?

If it’s digital tasks, the LLM has to be supported by APIs and connective tissue, like a Model Context Protocol or something else.

But what if it’s physical?

In a recent panel at Imagination in Action in April, my colleague, Daniela Rus, director of the MIT CSAIL lab, talked to a number of experts about how this would work in both the public and private sectors.

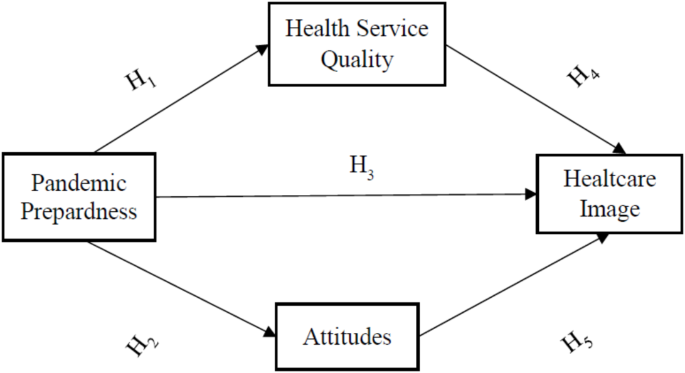

“The bridge is when we can take AI's ability to understand text, images and other online data about the physical, world to make real-world machines intelligent,” Rus said. “And now, if you can get a machine to understand a high-level goal, break it down into sub-components, and execute some of the sub-goals by itself, you end up with agentic AI.”

So what did panelists center on? Here are a few major ideas that came out of the discussion on how AI can work more humanly in the physical world where humans live.

In exploring what makes humans different from machines, there was the idea that people do things on a personal basis, which differentiates them from the herd. So the AI will have to learn not to follow a consensus-based model all of the time. That’s a key bit of difference, what you might call a “foible” that makes humans special - so in the enterprise world, it may not be a foible at all, but a value add.

“What you do not want is consensus regression to the mean information, like generally accepted ways of doing things,” said panelist Emrecan Dogan. “This is not how, as humans, we create value. We create value by taking a subjective approach, taking the path that is very personal, very subjective, very idiosyncratic. We are not always right, but when we are right, this is how we create value.”

As for government, panelist Col. Tucker Hamilton talked about electronic warfare, and stressed the importance of a human in the loop.

“I think we want to embed (HITL) so that a human is still in control,” he said. I think we need… explainability, traceability, and that goes along with governability as well. And I think we want to be able to favor adaptability to perfection.”

You have to reason, you have to think and understand,” added panelist Jonas Diezun.

Another way to think about this is that the programs have to be just the right amount of deterministic guidance.

“They don't always repeat,” Dogan said of these tools. “They don't behave exactly the same way the second, third, fourth time you run it. So I think the big (idea) is the right blend of determinism that you can embed along with the stochasticity. So I think the truly powerful agents will convey some expression of deterministic behavior, but then the stochastic upside of AI models.

Some other components of this have to do, simply, with infrastructure.

“Sensors, we're gathering information off of a sensor multi-modal (program), like sensor gathering,” Hamilton said. “How do we summarize that information? How do we make sure that one sensor is fused with another sensor? How do we have pipelines that we can get that information to, in order to have someone just assess that, like sensor information, let alone how do we adopt flight autonomy?”

In other words, all of those real-world pieces have to be connected the right way for the system to work in physical space, and not just digitally.

Finally, Rus asked each panelist their timeline for AI taking over most human tasks. The lower numbers represent when these panelists think that the simple tasks can be adopted by AI. The second number is a projection of when AI would take over the more complex tasks. The verdict?

“Quarters, not years.”

I thought all of this was very instructive in showing some of what we have to contend with as we anticipate the rest of the AI revolution.

It’s been a long time coming, but the exponential curve of the technology is finally here, and likely to be integrated into our worlds quickly almost suddenly. Job displacement is an enormous concern. So is the potential for runaway systems that could do more harm than good. Let’s continue to be vigilant as 2025 rolls on.