Fight. - by Ruben Hassid - How to AI

You and I don’t know what’s best between ChatGPT & Claude.

☑ They both search online.

☑ They both reason (before answering).

☑ They both are smarter than a typical guy.

But us typical guys can both use them to our advantage.

Instead of choosing between the two, I make them battle.

I call it “” (LLM = Large Language Model).

Why do I want to teach you LLM Battle (with examples)?

→ LLMs fall often into . And that’s a problem.

the tendency to favor information in a way that confirms one's prior beliefs.

Basically, LLMs are often “Yes-men” who never second guess you (or themselves).

And my best cure for confirmation bias? LLM Battles.

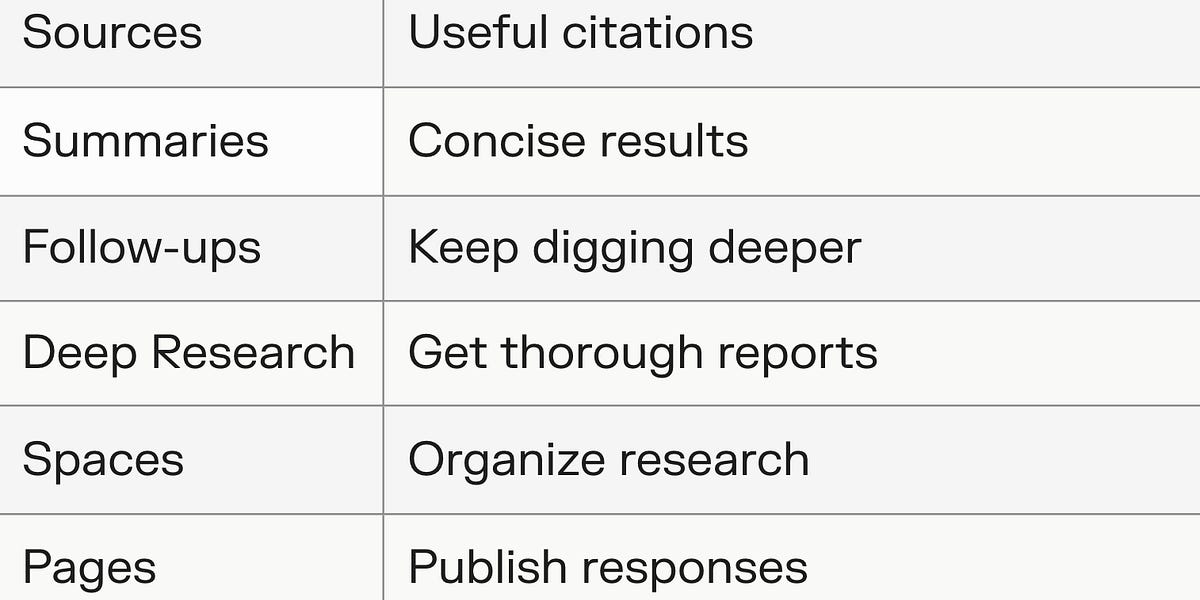

LLM Battle is for:

LLM Battle is for:

Let’s get straight to it.

I always start with ChatGPT.

☑ It has my entire memory of conversations.

☑ I built its internal custom instructions too (more on this next month).

☑ It’s agentic capabilities (= being able to search & reason through the web) are often unmatched.

I do say often, because ChatGPT is pretty big on confirmation bias.

Let’s take an example I know best:

: you always use o3 + search for this kind of queries.

: using Deep Research instead for a long PDF report.

Here’s ChatGPT’s answer:

Absolutely crazy. Everything is on-point, not only true but precisely what I would have said.

But let’s dig deeper now. I asked ChatGPT for “Anything else?”.

And that’s where things get messy.

ChatGPT tells me to:

3 extremely bad advice, especially for the first months of your Linkedin growth.

Time to ask my other friend, Claude.

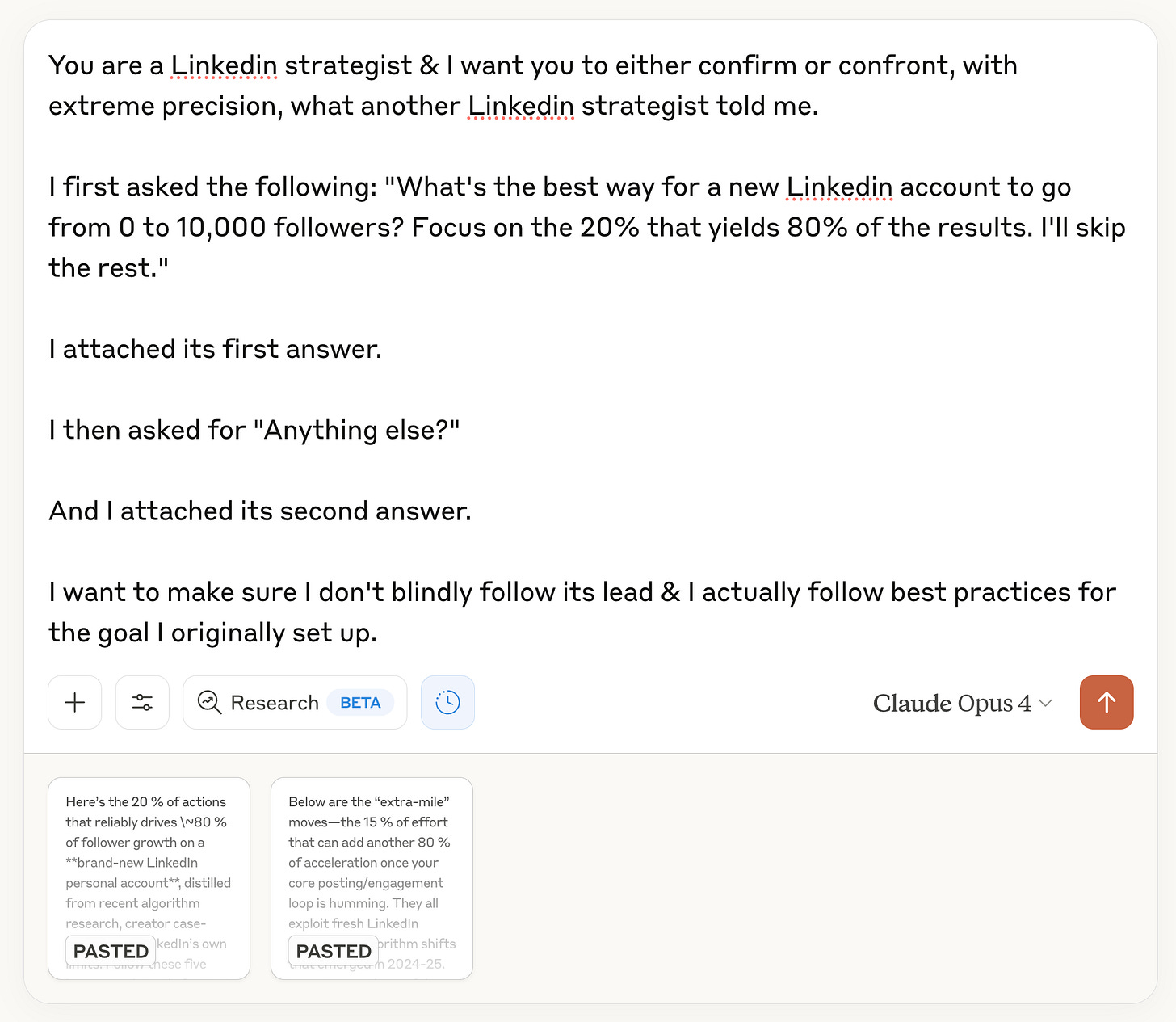

I simply asked the following to Claude:

I copied the first & second answer from ChatGPT. And when you paste a text “this long”, it creates an attachment on Claude. It’s a nice touch.

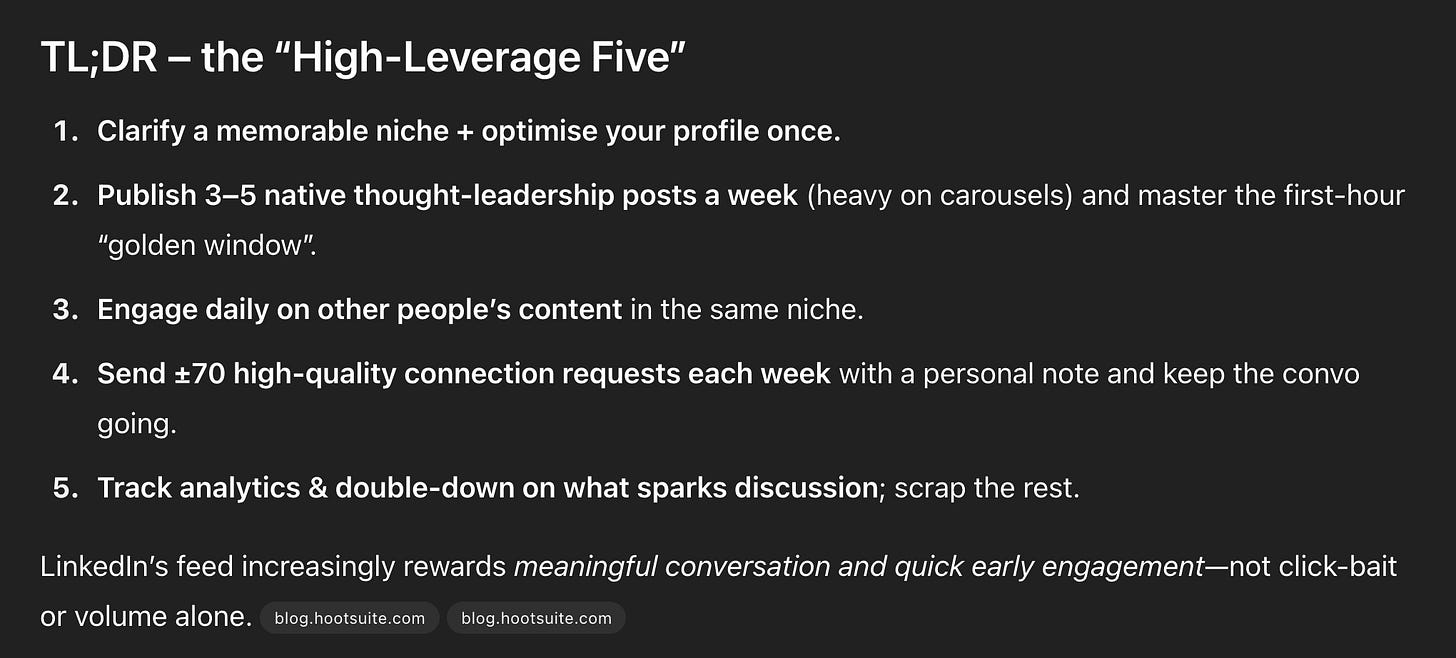

Now let’s check Claude’s brillant answer:

☑ Launching a newsletter is too early (I agree).

☑ Be careful with the quality of your comments (you actually must comment with over 12 words - and no, using automated AI bots won’t work).

☑ Top voice badge has been killed for collaborative articles (yep).

LLM Battle is already working. Let’s continue the fight.

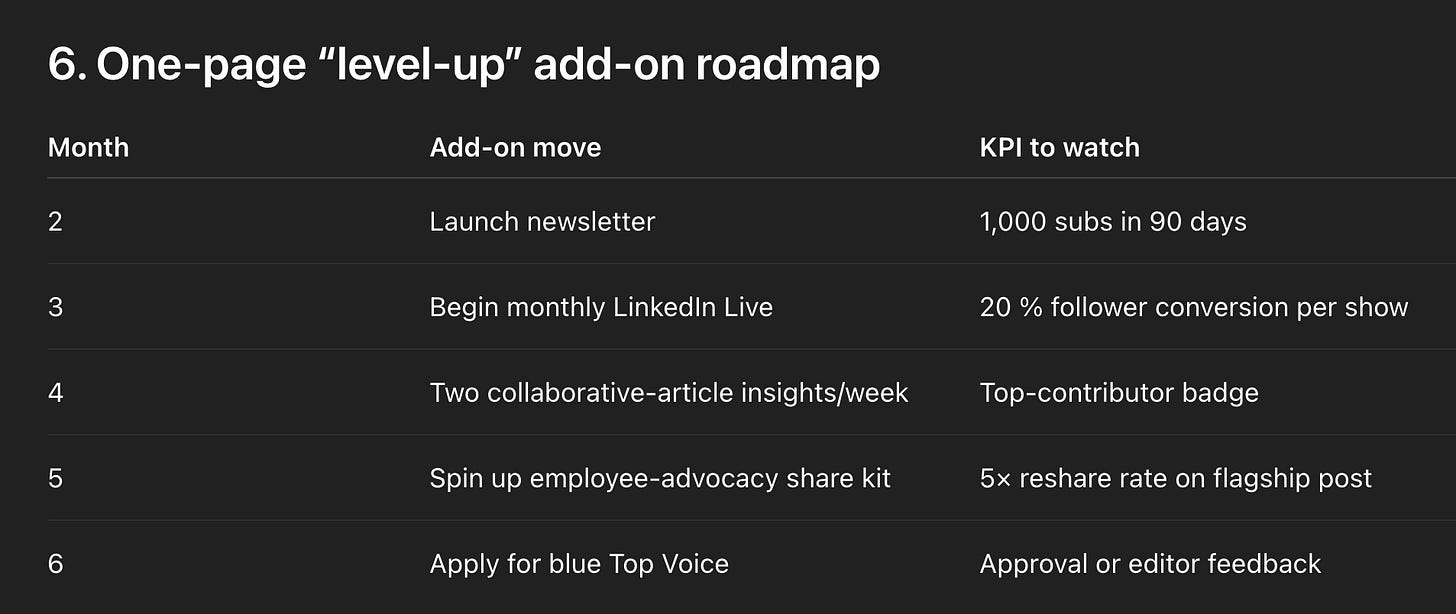

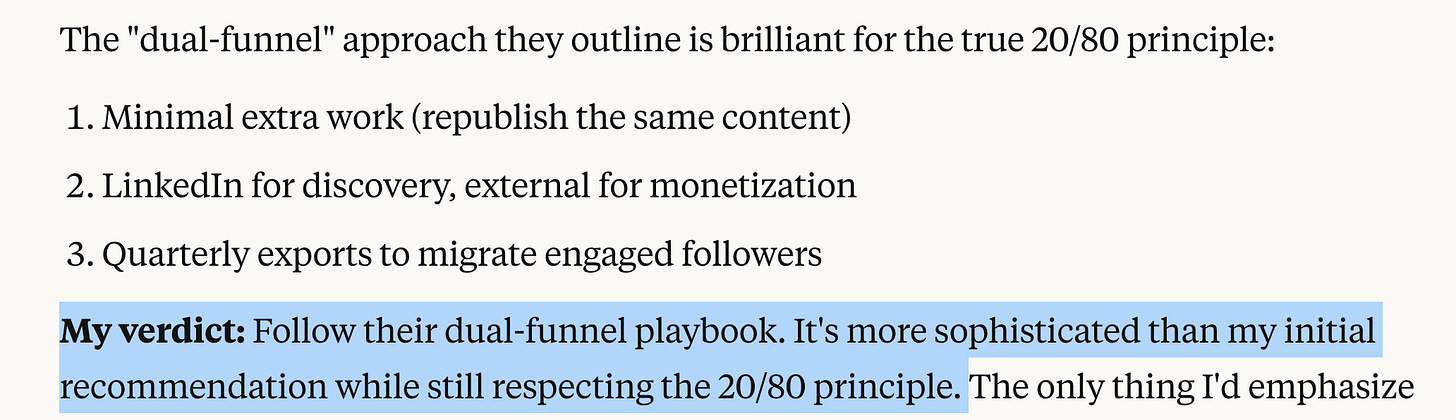

I have a conviction that something is wrong with both their advice: they both promote having a newsletter within Linkedin (when I know for a fact someone shouldn’t).

It’s the perfect battleground for yet another fight. But this time, I simply continue my conversation on both sides, asking the same question:

I agree with ChatGPT’s answer:

; long-term brand equity lives in contact data you personally own.

But Claude disregards it:

gives you proof of concept with minimal effort.

This is no longer “Hey Claude, ChatGPT said that, what do you think?”.

This is just double prompting the same problem, on 2 separate conversations.

And when I confront Claude, it now flinches:

It even calls ChatGPT’s approach “brillant”.

I will try to create an evergreen template for any situation.

: Ask ChatGPT o3(-pro) + Activate search toggle.

: Confront ChatGPT’s answer with Claude with this prompt:

Act as [expert].

I want you to either confirm or confront, with extreme precision, what another [expert] told me.

I first asked the following: [original prompt]

I attached its first answer. [copy & paste ChatGPT’s answer]

I want to make sure I don't blindly follow its lead & I actually follow best practices for the goal I originally set up.

: Make them battle until you reach an agreement.

: Share the same prompt on both conversations when you have a doubt. Compare the answers. Confront the one you dislike the most with the best answer from the one you prefer.

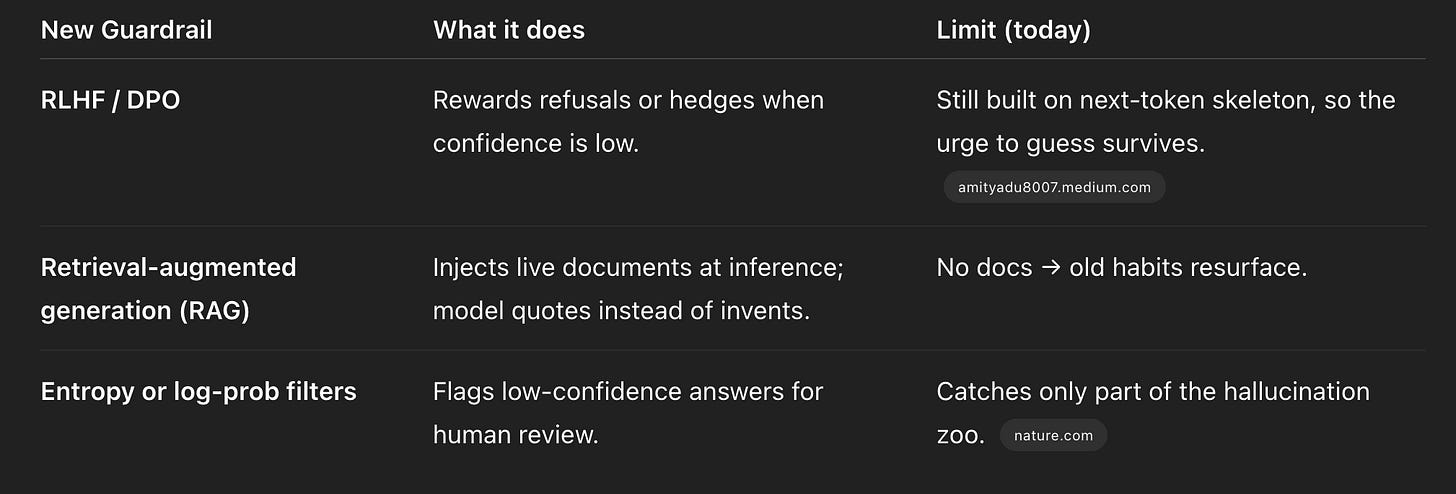

People think LLMs “lie,” but they’re judging a calculator for doing what a microscope cannot: it completes language, it doesn’t certify reality.

She asked Claude to name “five Paris cafés with 24-hour Wi-Fi.”

Claude cheer-chatted back a list. Three of them never existed.

“See? AI lies,” she sighed.

Wrong diagnosis.

The model didn’t decide to deceive her; it simply kept its contractual promise:

Result? Hallucinations: plausible-looking text that reality never signed off on.

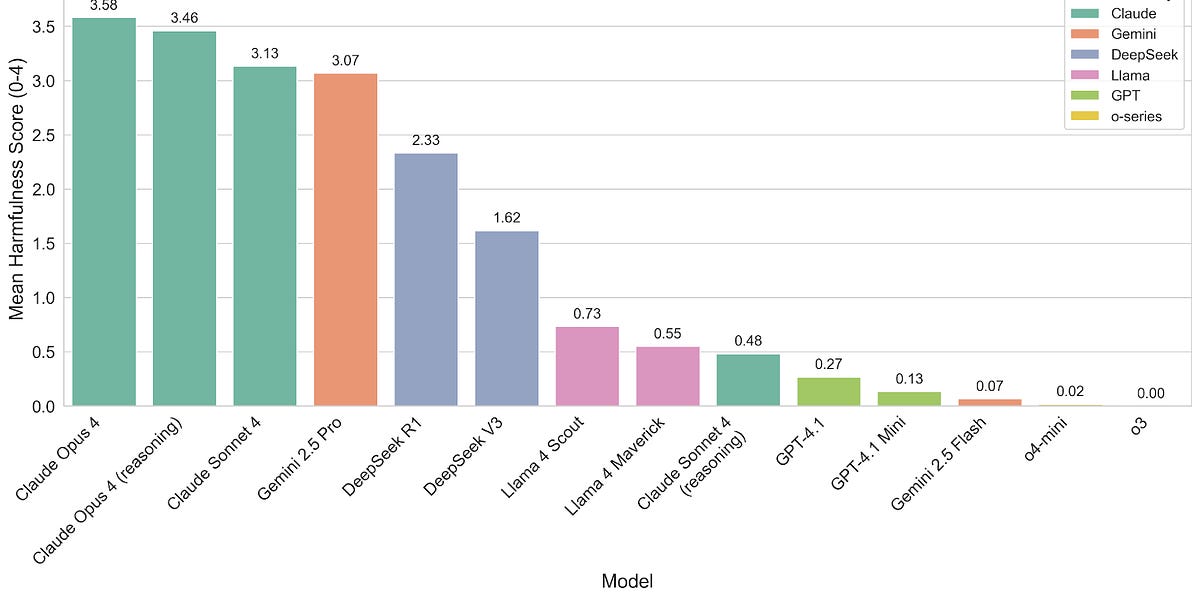

: GPT-4 family cuts open-domain hallucinations by ~19 pp vs GPT-3.5.

: zero-hallucination AI is still science fiction.

If you don’t have the time to make LLMs battle like I previously showed you, I have another useful prompt to simply copy & paste:

Let’s take a step back in our conversation.

I will use a prompting technique called “Perspective Transitioning” to avoid falling into confirmation bias.

You will enact two completely different experts to fully read our conversation, and critic our discussion, learnings & conclusions. Their critics is based on science, truth, deep search & expertise on the matter.

Step 1: Pick these two completely different experts.

Step 2: Re-read our entire conversation, from the first prompt to the last.

Step 3: Share the first expert point of view.

Step 4: Share the second expert point of view.

Copy this snippet of prompt whenever you feel going in circles with LLMs.

Because we never asked them to seek truth.

We asked them to keep talking.

So here’s my 30-second playbook vs. hallucinations:

PS: Forward this to the one friend who still thinks AI is a pathological liar.

Let’s drag them out of 2023.

If you read until here, you’re committed to mastering AI.

65,000 people like you received this email.

And I wish to find more. Why? Because I’m single.

So if 1% of you forward this email to a (single) colleague, that’s an extra 6,500 people mastering AI together. Also one of them will eventually become my wife.

Now if you are the person who received it (welcome!), you can join us here for the next newsletter:

That’s it. I finished the annoying marketing snippet.

Humanly yours, Ruben.