BioMedical Engineering OnLine volume 24, Article number: 64 (2025) Cite this article

Neurological disorders, ranging from common conditions like Alzheimer’s disease that is a progressive neurodegenerative disorder and remains the most common cause of dementia worldwide to rare disorders such as Angelman syndrome, impose a significant global health burden. Altered facial expressions are a common symptom across these disorders, potentially serving as a diagnostic indicator. Deep learning algorithms, especially convolutional neural networks (CNNs), have shown promise in detecting these facial expression changes, aiding in diagnosing and monitoring neurological conditions.

This systematic review and meta-analysis aimed to evaluate the performance of deep learning algorithms in detecting facial expression changes for diagnosing neurological disorders.

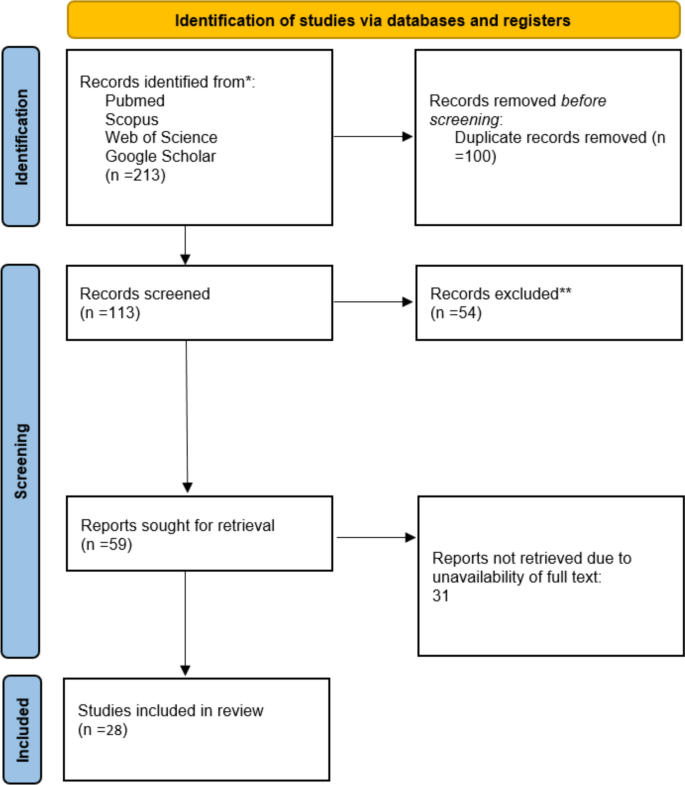

Following PRISMA2020 guidelines, we systematically searched PubMed, Scopus, and Web of Science for studies published up to August 2024. Data from 28 studies were extracted, and the quality was assessed using the JBI checklist. A meta-analysis was performed to calculate pooled accuracy estimates. Subgroup analyses were conducted based on neurological disorders, and heterogeneity was evaluated using the I2 statistic.

The meta-analysis included 24 studies from 2019 to 2024, with neurological conditions such as dementia, Bell’s palsy, ALS, and Parkinson’s disease assessed. The overall pooled accuracy was 89.25% (95% CI 88.75–89.73%). High accuracy was found for dementia (99%) and Bell’s palsy (93.7%), while conditions such as ALS and stroke had lower accuracy (73.2%).

Deep learning models, particularly CNNs, show strong potential in detecting facial expression changes for neurological disorders. However, further work is needed to standardize data sets and improve model robustness for motor-related conditions.

Neurological diseases are a spectrum of conditions that may involve any neuronal tissue in our body, including the brain, spine, or peripheral neural system (PNS) [1]. Structural, chemical, and other abnormalities of nervous tissues can be the etiology of these disorders. They are classified under various categories, ranging from common disorders like Alzheimer’s disease to as rare as Angelman syndrome [2, 3].

From an epidemiological aspect, neurological disorders have posed a huge burden on global health. According to GBD 2021, neurological disorders and other conditions affecting the nervous system were the leading cause of disability-adjusted life years (DALY), affecting 3.40 billion individuals [4]. Another study by Yi Huang et al. demonstrated that 805.17 million individuals suffered from neurological disorders in 2019 [5]. Some types of neurological disorders have greater significance. For instance,6 neurological conditions encompassing AD and other dementias, PD, epilepsy, MS, MND, and headache disorders ranked 9th among the leading causes of DALY [6]. Analyzing previous studies reveals that there is a significant increase in the global and territorial prevalence of neurological disease; this may be related to several factors, including increased life expectancy, improvement in socioeconomic status, advancement in screening and diagnostic devices, etc. [5, 6].

Symptoms of neurological disease vary based on the underlying cause, but some common signs and manifestations are observed across several neurological disorders. Altered facial expression is a common sign of many neurological disorders. This is due to several reasons, such as abnormalities in neuron–muscle performance [7]. For example, in Parkinson's disease, muscle stiffness leads to a characteristic symptom called a masked face [8]. In other conditions, such as a stroke, ischemia in particular brain and motor cortex regions leads to abnormalities in facial muscle control, which causes changes in facial expression [9]. Similarly, in autism spectrum disorders, abnormalities in neural processing lead to problems in the production of facial expressions [10]. In other words, it is difficult to recognize emotion based on facial expressions when there is a neurodegenerative disease [11]. Based on the previous statement, it can be inferred that facial expression changes may serve as a significant indicator for a range of neurological diseases. In this domain, analyzing facial expressions and improving facial emotion recognition tools may help diagnose and monitor these disorders [12,13,14].

Like other diseases, Effective management is one of the main goals of health care in neurological disorders. The complexity and difficulty of them make their management challenging [15]. Several factors contribute to this problem, including the nature of the disorder, diagnostic delays [16], comorbidities [17], and the need for multidisciplinary care [18]. Given these challenges, we need access to methods that facilitate screening, prediction, and even early diagnosis of neurological diseases before symptoms become apparent. Such advances lead to better prognoses and improved treatment outcomes. We can, therefore, address this issue using deep learning technology, since it has been widely adopted in recent years [19,20,21,22,23]. Deep learning may play a significant role in the field of neural disorders, especially in neuroimaging processes. One of the most common models that has been used for processing medical images is the Convolutional Neural Network(CNN), which is utilized for processing medical images like MRI scans [20, 24, 25].

As we said previously, altered facial expression is a common symptom of various neurological disorders; in addition, many studies have indicated the effectiveness of deep learning/machine learning-based methods for the identification and prognosis assessment of neurological disorders. Afsaneh Davodabadi et al. indicated that artificial intelligence may be useful for diagnosis of Alzheimer's disease [11].in another study, done By Yang Wang et al., it has been demonstrated that a new CT base method utilizing DL, in terms of Cranial Automatic Planbox Imaging Towards AmeLiorating neuroscience (CAPITAL–CT), can simplify the work of radiologists and assist in neuroscience research and management of stroke [26]. Gozde Yolc et al. proposed a system consisting of two CNN steps that assist in image classification and analysis [20].

Therefore, we conduct a systematic review and meta-analysis of deep learning applications in detecting facial expression changes, aimed at diagnosis and monitoring of neurological diseases.

This systematic review follows the principles of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA2020) statement [1]. The study protocol has been registered on the Open Science Framework (OSF) (registration doi:).

We collected original articles in this field by searching through PubMed, Google Scholar, Web of Science, and Scopus databases for English language literature published up to the 31st of August 2024. The search was conducted based on " deep learning ", "convolutional neural network", "CNN", "DBN" and "facial expression", "FER", "Facial recognition", "neurological disorder", "neurological disease", "Alzheimer's Disease", "Acute Spinal Cord Injury", "Amyotrophic Lateral Sclerosis", "ALS", "Ataxia", "brain tumor", "Bell's Palsy", "Cerebral Aneurysm", "Epilepsy", "seizure", "Headache", "Head Injury", "Hydrocephalus", "Meningitis", "Multiple Sclerosis", "Muscular Dystrophy", "neurocutaneous syndrome", "Parkinson's Disease", "stroke", "brain attack", "cluster headache", "tension headache", "migraine headache", "migraine", "Encephalitis", "Myasthenia Gravis", "Lumbar Disk Disease", "Herniated Disk" or "Guillain–Barre Syndrome” as keywords (Table 1). The reference lists of included articles and relevant studies were manually reviewed for further relevant studies.

This study searched Web of Science, Scopus, and PubMed until August 2024. Studies were screened by their title and abstract using the Rayyan intelligent tool for systematic reviews. Data were extracted based on predefined variables and presented in (Table 2). Duplicated records were eliminated using EndNote ver.21.

After excluding animal-based studies, non-English articles, conference abstracts, reviews, posters, case reports, case series, and studies not related to biomarker imaging, the remaining studies were included in the review if they met the following criteria: they were human-based studies, original peer-reviewed articles, and utilized or were relevant to imaging biomarkers.

Two reviewers (NW, SHY), Using the RAYYAN intelligent tool for systematic reviews, analyzed and screened titles and abstracts to identify similar papers in a blinded manner. Full texts of them were obtained to assess the qualification of the “Yes” and “Maybe” groups. In case of conflicts, a third reviewer (MAA)was involved and then reached an agreement to overcome differences and disagreements. Conflicts have been resolved through discussion between them. Quality assessment of each article was performed independently by two authors using the JBI checklist. For each included study, the quality assessment and risk of bias were performed and evaluated using JBI’s critical appraisal tools.

A meta-analysis was performed to calculate pooled accuracy estimates for evaluating the performance of deep learning algorithms in facial expression recognition for detecting neurological disorders. The heterogeneity among studies was assessed using the Chi-square test and quantified by the I2 statistic, which indicates the proportion of variability due to heterogeneity rather than random fluctuation. The I2 statistic was computed with the formula: 100% × (Q − df)/Q. Study weights were determined using the inverse variance method. A random-effects model was applied to combine data from the studies, reducing the impact of heterogeneity. Statistical significance was set at P < 0.05. Data from graphical figures in the studies were digitized using WebPlot Digitizer (Automeris LLC, Frisco, TX, USA).

Regression models and imputation techniques were employed to address missing accuracy values in the meta-analysis. These methods were utilized to handle missing data systematically, ensuring a more accurate synthesis of the study results. Regression models predicted missing values based on the relationships observed in the data, while imputation methods (e.g., multiple imputation) replaced missing values with plausible estimates, considering the uncertainty inherent in the imputation process. These approaches were selected to reduce bias and enhance the accuracy of the pooled estimates, ensuring the meta-analysis results offer a reliable representation of the underlying data. In addition, these methods help preserve the statistical power of the analysis by incorporating all available data rather than excluding studies with missing values, which could lead to bias or affect the generalizability of the findings. For future analyses, methods such as maximum likelihood estimation and Bayesian techniques could also be explored to more effectively handle missing data.

This systematic review and meta-analysis included 28 studies published between 2019 and 2024, investigating the application of deep learning-based facial expression analysis for detecting neurological disorders (Fig. 1). The studies were conducted across multiple countries, including Australia, China, the United Kingdom, Saudi Arabia, the United States, and Canada, with test sample sizes ranging from 26 to 437 images or video recordings (Table 2).

The neurological conditions assessed in the included studies encompassed dementia, Bell’s palsy, stroke, amyotrophic lateral sclerosis (ALS), functional neurological disorders (FND), and motor impairments. While demographic data were inconsistently reported, studies that included age distributions showed mean participant ages ranging from 63.2 to 82.5 years. Certain studies stratified participants by neurological condition, such as Andrea Bandini et al., who reported mean ages of 63.2 years (healthy control), 61.5 years (ALS), and 64.7 years (post-stroke). However, several studies lacked explicit reporting of age, sex, and training sample characteristics, which may impact the generalizability of findings.

The studies employed a range of deep learning methodologies, including landmark-based models, convolutional neural networks (CNNs), facial action unit (AU) analysis, and hybrid approaches. Facial components analyzed included eyebrow and mouth regions, full-face expressions, near-eye regions, and action unit-based facial dynamics.

The classification performance of the deep learning models varied significantly across studies. Reported accuracies ranged from 1.8% to 94.8%, with some studies reporting alternative performance metrics, such as Pearson correlation coefficients, rather than conventional accuracy rates.

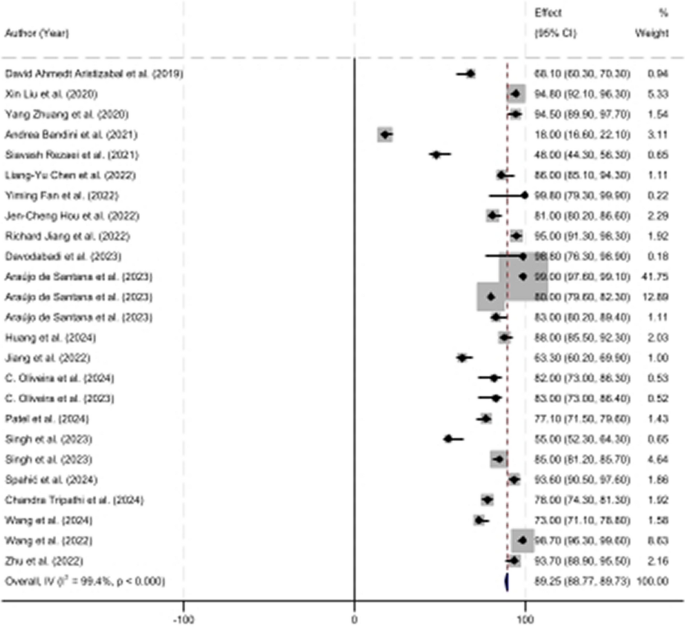

A random-effects meta-analysis was conducted to assess the pooled accuracy of deep learning models across all included studies. The overall estimated accuracy was 89.25% (95% CI 88.75–89.73%), indicating a high predictive capability of deep learning-based facial expression analysis for neurological disorder detection (Fig. 2).

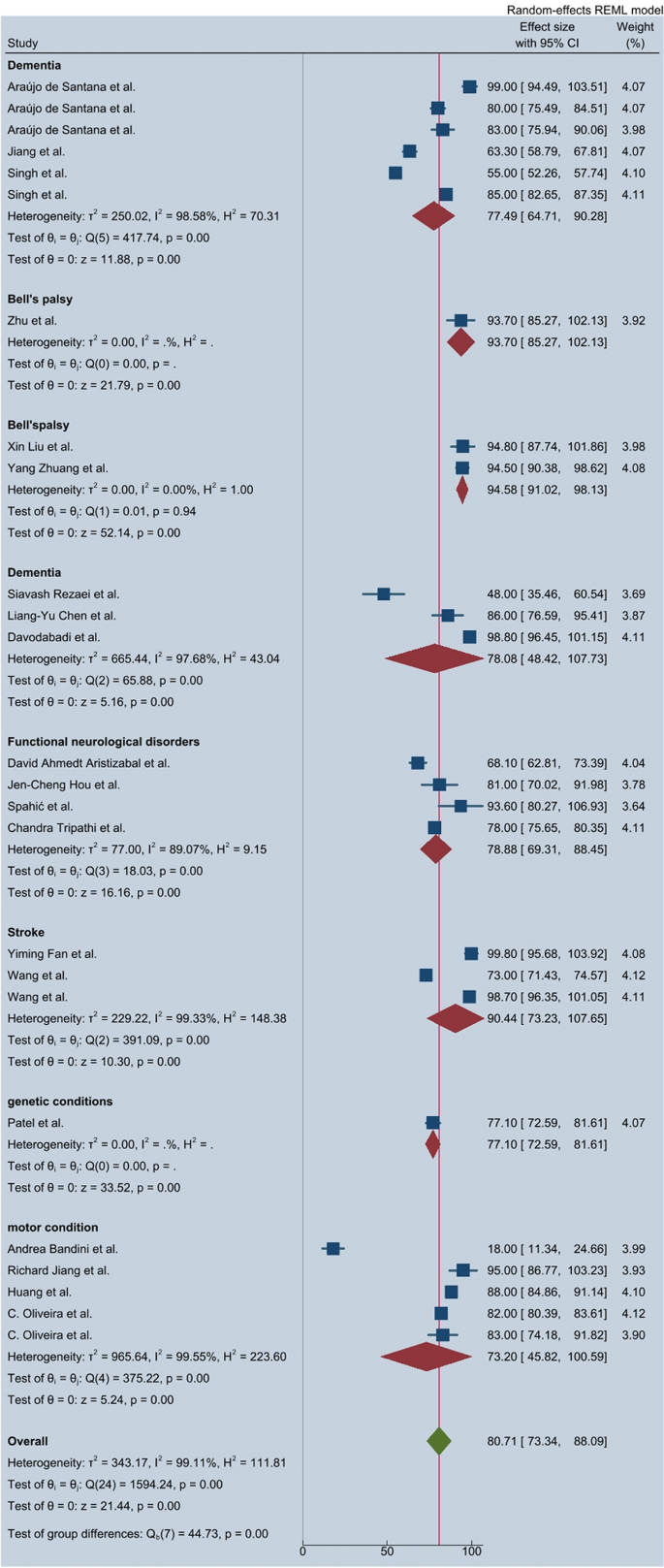

A subgroup analysis based on neurological condition categories revealed significant variations in classification performance (Fig. 3):

These results indicate that deep learning algorithms exhibit high classification performance for dementia and Bell’s palsy, while performance for motor-related conditions remains comparatively lower.

The heterogeneity analysis indicated substantial variability in the reported effect sizes across studies, with an I2 statistic of 99.11%, confirming high statistical heterogeneity. The Q test (Q = 1594.24, p < 0.0001) further supported the presence of significant variation among included studies, suggesting that differences in algorithm types, data set sizes, and neurological disorder classifications contributed to the observed heterogeneity.

A funnel plot analysis (Fig. 4) demonstrated that most studies fell within the pseudo-95% confidence interval, indicating a low likelihood of publication bias. However, a minor asymmetry was observed, suggesting the potential influence of selective reporting.

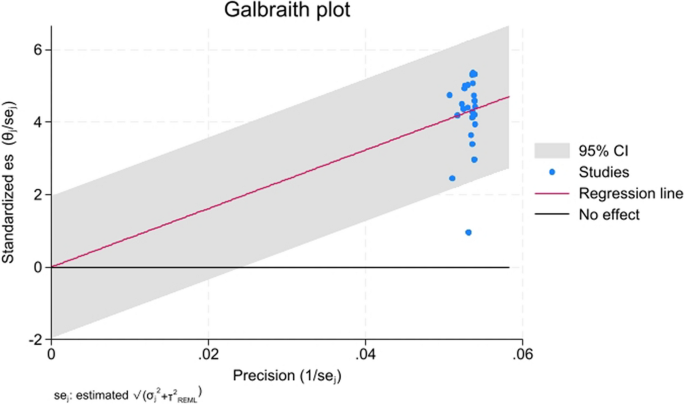

The Galbraith plot (Fig. 5) further confirmed that while most studies aligned along the regression line, a subset exhibited outlier behavior, suggesting inconsistencies in reported classification accuracies.

The Cochrane Risk of Bias Tool was used to assess the methodological quality of the included studies. While most studies demonstrated low risk concerning participant selection and outcome assessment, some exhibited unclear or high risk in domains, such as allocation concealment and blinding procedures. In addition, studies with small test sample sizes and incomplete demographic reporting were classified as having a higher risk of bias, potentially affecting the generalizability of findings.

This systematic review and meta-analysis evaluated the application of deep learning (DL) models, particularly convolutional neural networks (CNNs), in recognizing facial expression changes associated with various neurological disorders. The pooled accuracy of 89.25% across 28 studies underscores the potential of facial expression analysis as a diagnostic aid. However, significant variability in performance was observed depending on the type of neurological condition, the complexity of facial alterations, and the deep learning architecture used.

Studies investigating Parkinson’s disease (PD) consistently highlighted the utility of deep learning (DL) for detecting hypomimia and evaluating treatment response. Jiang et al. achieved over 95% accuracy using a model to distinguish pre- and post-DBS treatment expressions, outperforming EEG-based methods [14]. In contrast, Huang et al.’s MARNet model achieved 88% accuracy across PD stages by analyzing six core emotions, demonstrating robustness for staging purposes [27]. Models combining facial data with voice biomarkers, such as the smartphone-based approach by Lim et al., achieved an AUROC of 0.90—suggesting that multimodal inputs enhance diagnostic precision [28]. Furthermore, Oliveira et al. boosted classification performance from 62 to 83% using Conditional Generative Adversarial Networks (CGAN) for synthetic data generation and test-time augmentation for model stability [9, 29]. Collectively, while all models showed promise, those integrating multimodal data or data augmentation strategies offered greater generalizability and resilience across PD symptom presentations.

Stroke-related studies demonstrated a wide range of performances based on data set characteristics and model complexity. Fan et al.’s FER–PCVT model achieved near-perfect accuracy (99.81%) on a private data set but dropped to 88.2% and 89.4% on public data sets, revealing potential overfitting to task-specific expressions [30]. Bandini et al. emphasized the importance of domain-specific fine-tuning; their model initially underperformed in post-stroke patients until calibrated, after which normalized RMSE improved significantly [31]. In addition, Wang et al.’s CAPITAL–CT model provided rapid, low-dose diagnostic imaging with over 98% accuracy using CNN segmentation for facial asymmetry detection, highlighting the promise of medical image-based DL in real-time stroke diagnostics [26, 32]. These findings suggest that while CNNs and transformer hybrids can effectively classify stroke-related facial impairments, model calibration to clinical contexts and data set diversity is essential for optimal performance.

In disorders, such as facial nerve palsy, muscular dystrophy, and general facial asymmetry, performance varied based on model architecture and the visibility of facial cues. Zhuang et al. achieved up to 89.7% accuracy for detecting facial weakness using a landmark-based model combined with HoG features and SVM classifiers [33]. Tripathi et al. introduced a metric that correlated with manual FACS scoring (R2 = 0.78), validating its use for identifying asymmetry in stroke or ALS [34]. Zhu et al. improved diagnostic performance by combining surface electromyography (sEMG) with multiview CNNs, which also supported treatment evaluation in Bell’s palsy [35]. These results highlight the importance of combining facial features with biosignal-based or task-specific cues to improve DL performance in less overt neurological presentations.

Research on epileptic seizures using facial expression analysis is emerging but promising. Ahmedt-Aristizabal et al. demonstrated that region-based models outperformed landmark-based approaches (79.6% vs. 68.1%) for differentiating epileptic seizures from functional neurological disorder (FND), due to better recognition of complex motion patterns [11]. Hou et al. confirmed this through a dual-model system using adaptive graph convolutional and temporal convolutional networks (AGCN + TCN), achieving ~ 80% accuracy in detecting dystonia and emotional expressions during hyperkinetic seizures [36]. These findings suggest that video-based temporal models capturing subtle dynamic changes are particularly suited for seizure-related applications, although broader data set validation is still needed.

Dementia-related studies reinforced the diagnostic and monitoring potential of emotion recognition. Chen et al. developed the Facial Expression Recognition System (FERS), which predicted behavioral and psychological symptoms of dementia (BPSDs) with high accuracy (r = 0.834), especially for negative emotional states [37]. Jiang et al. used CNNs to differentiate cognitively impaired (CI) from unimpaired individuals with 90.1% accuracy in structured environments, and improved classification when eye-tracking data was added [14]. Rezaei et al. also addressed pain expression in dementia, showing that deep learning models could identify subtle facial cues of discomfort in non-verbal populations [31]. Overall, these studies demonstrate the effectiveness of integrating facial analytics into emotion-based monitoring systems for dementia care.

Facial expression recognition has shown considerable promise in Alzheimer’s disease (AD) as well as in other neurological and genetic conditions. In the context of AD, emotion classification and cognitive monitoring through facial cues were frequently explored. Davodabadi et al. compared support vector machines (SVM) and deep convolutional neural networks (DCNN), finding superior performance with the DCNN model (testing accuracy: 98.8%), particularly for classifying core emotions relevant to dementia progression [11]. Singh et al. extended this line of work by modeling AD-associated face recognition deficits using Siamese networks, demonstrating the resilience of this architecture to simulated neural degradation and drawing parallels with cognitive decline patterns in AD patients [38].

Chen et al.'s ensemble approach predicted behavioral and psychological symptoms of dementia (BPSDs) in Alzheimer's patients by analyzing emotional variability, achieving strong correlation with neuropsychiatric inventory scores (r = 0.834) [37].Complementing this, Tripathi et al. developed a Consistent Response Measurement (CRM) framework to detect emotional engagement via facial key points during emotionally evocative stimuli, providing a low-cost and scalable method for early cognitive screening [34].

Beyond AD, deep learning was applied to detect facial markers in genetic and neurodevelopmental conditions. Patel et al. demonstrated that facial expressions significantly impact diagnostic performance in syndromes, such as Williams and Angelman, where accuracy dropped when typical expressions (like smiling in WS) were altered—underscoring the diagnostic weight of facial affect [39]. Similarly, Spahić et al. introduced the TRUEAID system, which used 4D ultrasound to analyze fetal neurobehavioral movements, achieving 93.8% accuracy in detecting early neurological disorders, such as autism, cerebral palsy, and epilepsy [40].

These diverse applications suggest that facial expression analysis via DL is not only valuable in neurodegenerative diseases like AD, but also holds diagnostic and prognostic utility across developmental, genetic, and pediatric neurological conditions, especially when paired with multimodal data streams or expression-specific model training.

The application of multimodal cascade transformers in the context of facial expression recognition for neurological disorders holds great promise for enhancing diagnostic accuracy and robustness. In recent years, transformers have shown significant potential in handling sequential data, such as time-series information and multimodal inputs, making them highly suitable for complex tasks like analyzing facial expressions and other clinical data [41,42,43].

Multimodal cascade transformers are designed to process multiple types of data (e.g., facial expression videos, speech signals, and physiological data) in a cascading manner, allowing for better integration and contextual understanding. This approach could offer several advantages in detecting neurological disorders, where facial expressions alone may not provide sufficient information due to the subtlety of changes in certain conditions or the complexity of interpreting these expressions in isolation [44].

For example, a cascade structure allows the model to first process facial expressions using a dedicated transformer block, followed by the integration of complementary modalities, such as speech, physiological data (e.g., heart rate and skin conductance), and neuroimaging data in subsequent stages. Each modality can be processed separately by specialized networks, with their outputs then combined through a series of transformer layers to capture cross-modal interactions effectively. This combination could improve diagnostic performance in disorders like Parkinson's disease, where facial expressions (such as hypomimia) and speech patterns (e.g., hypophonia) are often closely linked [45].

Moreover, cascade transformers can help address neurological disorders' temporal dynamics. For example, deep learning models could analyze how facial expressions evolve over time while accounting for other signs of neurological decline from video or audio data. This multimodal processing can also lead to more accurate disease progression and treatment outcomes.

By incorporating contextual information from multiple sources, multimodal cascade transformers could reduce the limitations posed by relying on a single modality, such as facial expression alone. This makes them well-suited for applications in clinical settings, where complex, multimodal data are often available. It could lead to more holistic, reliable, and personalized diagnostic tools for neurological disorders [46].

The use of multimodal cascade transformers represents an exciting frontier in the integration of deep learning techniques for neurological disorder diagnosis. By leveraging the synergy between different data modalities, these models could significantly improve the accuracy and robustness of facial expression recognition systems, enabling better monitoring, diagnosis, and treatment planning for neurological patients [47].

Several prior reviews have addressed deep learning applications in neurology, yet few have focused specifically on facial expression recognition (FER) across a broad spectrum of neurological disorders. Valliani et al. offered a general overview of deep learning in neurology, highlighting use cases such as neuroimaging and EEG analysis but did not delve into facial dynamics or expression-based biomarkers [48]. Similarly, Gautam and Sharma conducted a meta-analysis on deep learning in neurological disorder detection, yet it centered predominantly on imaging and lacked an analysis of behavioral markers like facial expression [49].

Yolcu et al. presented a CNN-based FER system for monitoring neurological disorders but limited their focus to architecture design without performing a meta-analysis or comparing performance across conditions [12]. More recently, Alzubaidi et al. and Sajjad et al. compiled comprehensive reviews on facial expression recognition techniques, data sets, and CNN variants, but neither study explicitly focused on neurological disorder populations or provided disease-specific accuracy benchmarks [50, 51].

What distinguishes the current review is its systematic integration of 28 studies involving FER applications across both common (e.g., Alzheimer’s disease and Parkinson’s disease) and rare (e.g., Angelman syndrome and Williams syndrome) neurological conditions. This study also provides:

Moreover, the current review uniquely examines the clinical translational value of FER-based AI tools, particularly their utility in early detection, remote monitoring, and multidisciplinary neurological care. In doing so, it addresses the growing demand for non-invasive, low-cost diagnostic adjuncts—a focus not yet emphasized in most prior literature.

The clinical implications of using deep learning algorithms, specifically convolutional neural networks (CNNs) and multimodal models like cascade transformers, in facial expression recognition for detecting neurological disorders, are profound and far-reaching. These models can transform diagnostic practices, offering more efficient, accurate, and non-invasive methods for assessing neurological health [52].

Neurological disorders often present with subtle symptoms that may not be immediately evident in traditional clinical assessments. Deep learning-based facial expression recognition systems can detect early signs of conditions such as Parkinson's disease, Alzheimer's disease, and stroke by identifying minute facial expression changes that may go unnoticed by clinicians. The ability to diagnose these disorders earlier can lead to earlier interventions, which are crucial for improving patient outcomes, slowing disease progression, and enhancing quality of life. For example, the recognition of hypomimia (masked face) in Parkinson’s disease could aid in diagnosing the condition before more pronounced motor symptoms appear [50].

Traditional diagnostic techniques for neurological disorders, such as neuroimaging or extensive motor assessments, can be invasive, costly, and time-consuming. Facial expression recognition offers a non-invasive alternative that can be used as a complementary diagnostic tool. Using video recordings or real-time monitoring, these deep learning systems can provide continuous, low-cost monitoring of patients. This is particularly useful for tracking disease progression in conditions, such as dementia or ALS, where regular assessment is needed to adjust treatment plans and manage symptoms effectively [51].

In clinical settings, deep learning models could be integrated into decision-support systems that assist healthcare providers by analyzing facial expressions in real-time during consultations. For instance, a deep learning algorithm could analyze a patient’s facial expressions during a routine check-up and provide insights into potential neurological conditions, allowing for more timely interventions. This integration could reduce diagnostic delays, especially in settings with limited access to specialist care, improving patient outcomes through earlier and more accurate assessments [53].

The use of deep learning in facial expression analysis can enhance personalized medicine. By integrating facial expression data with other clinical information (e.g., medical history and cognitive tests), algorithms can help create tailored treatment plans that are specific to each patient’s condition and disease stage. This personalized approach could optimize therapeutic strategies, ensuring that patients receive the most effective interventions based on their unique presentation of symptoms.

Implementing deep learning models in clinical practice can also help reduce healthcare disparities by making advanced diagnostic tools more accessible to underserved populations. In remote or resource-limited settings, facial expression recognition systems can provide affordable and effective screening tools without the need for expensive equipment or specialist expertise. For example, in rural areas, patients could use mobile devices equipped with facial recognition software to undergo preliminary assessments, with results analyzed remotely by healthcare professionals [54].

Neurological disorders often require multidisciplinary care, including neurologists, speech therapists, psychologists, and rehabilitation specialists. Facial expression recognition tools could serve as a valuable bridge for coordinating care by providing objective data on facial dynamics and helping clinicians monitor patients’ emotional well-being, cognitive function, and motor control over time. This data could be shared across the care team to improve communication and ensure that all aspects of the patient’s condition are addressed holistically.

The integration of facial expression recognition into clinical trials could improve the monitoring of patient responses to experimental treatments. By tracking changes in facial expressions over time, researchers could gain objective insights into how patients are responding to interventions, especially in conditions, where self-reporting is unreliable (e.g., dementia). This can lead to more robust, real-time data collection during trials, which is essential for evaluating new therapies' efficacy.

In conclusion, the application of deep learning algorithms for facial expression recognition in neurological disorders offers a range of clinical benefits. These technologies have the potential to revolutionize diagnostic practices, offering earlier, more accurate, and non-invasive detection, enhancing patient care, and improving treatment outcomes. As technology advances and becomes more integrated into clinical workflows, it could lead to more efficient, accessible, and personalized care for patients with neurological conditions [55].

While this study provides valuable insights into the potential of deep learning algorithms for detecting facial expression changes associated with neurological disorders, there are several limitations. First, the study only included peer-reviewed, open-access articles in English, which may have excluded relevant research published in other languages or databases. In addition, the studies included in this meta-analysis varied widely in terms of the neurological conditions studied, sample sizes, and methodologies, which introduced substantial heterogeneity. The lack of standardized data sets and inconsistent reporting of participant demographics, including age and sex distributions, further limited the generalizability of the findings. The high heterogeneity observed across the included studies in this meta-analysis is a notable challenge that affects the interpretation of the results. This variability stems from differences in study methodologies, such as variations in deep learning models used (e.g., CNNs and hybrid approaches), the specific neurological disorders studied, and the facial components analyzed (e.g., full-face expressions vs. specific facial regions, such as the eyes or mouth). In addition, discrepancies in sample sizes, participant demographics, and diagnostic criteria further contribute to this heterogeneity. While the random-effects model and I2 statistic were used to quantify and mitigate the impact of this heterogeneity, the substantial variation in study designs and outcomes remains a limitation. To address this issue, future studies should aim to standardize the data collection process, including the use of consistent deep learning models, facial expression recognition protocols, and participant characteristics, which will enhance the reliability and comparability of findings across different studies. Moreover, the use of alternative performance metrics (such as Pearson correlation coefficients) instead of traditional accuracy rates in some studies made direct comparisons challenging. Finally, while multiple imputation methods were used to handle missing data, potential biases due to incomplete or missing information may persist, affecting the accuracy of the pooled estimates.

Another critical limitation not yet widely addressed in this field is the privacy and security of facial expression data, particularly in clinical applications involving sensitive neurological conditions. While a few studies such as Jiang et al. have explored privacy-preserving frameworks using homomorphic encryption for Parkinson’s disease monitoring [14], most deep learning models in facial expression recognition lack built-in privacy safeguards. This gap is concerning given the sensitive nature of facial data and the ethical implications of real-time patient monitoring. Moreover, data compression and optimization strategies—such as model pruning, quantization, and knowledge distillation—remain underutilized in the reviewed literature, despite their potential to make FER models more efficient and deployable in low-resource or mobile clinical settings.

The recent review by Jafari et al. [56]highlights the increasing relevance of lightweight, privacy-aware transfer learning models in biomedical contexts. Incorporating these methods into FER systems could improve model generalizability, reduce computational cost, and mitigate data-sharing risks in multicenter research and telehealth deployment.

Future research should focus on developing standardized data sets encompassing a wide range of neurological disorders to ensure better comparability across studies. In addition, future studies should explore the integration of multimodal data (e.g., speech, physiological signals, and neuroimaging) with facial expression recognition models to enhance diagnostic accuracy and robustness. More efforts are needed to refine deep learning models, improve their generalizability across different patient populations, and reduce biases related to demographic variations. In addition, exploring the application of advanced techniques such as Bayesian models or maximum likelihood estimation could improve the handling of missing data, thereby increasing the reliability of conclusions drawn from these models. Finally, as the field progresses, incorporating real-time facial expression recognition tools in clinical settings could provide more timely and accurate assessments of neurological conditions, offering significant benefits for patient care.

A critical challenge in deploying deep learning-based facial expression recognition (FER) systems in clinical neurology lies in ensuring data privacy and security. The sensitive nature of facial data necessitates robust privacy-preserving mechanisms, yet only a limited number of studies have explored this. For instance, Zhang et al. developed a wearable, depth-sensing FER device that analyzes facial skin deformation without capturing identifiable features, thereby maintaining patient anonymity and allowing on-device inference with no data transmission to external servers [57]. Similarly, Jiang et al. introduced a privacy-aware Parkinson’s diagnostic system employing partial homomorphic encryption to perform facial analysis directly on encrypted data, showcasing the potential of edge computing in safeguarding patient confidentiality [14].

In addition to privacy, optimization for real-world implementation remains underexplored. Many high-performing FER models require significant computational resources, posing barriers in low-resource settings or mobile deployments. Li et al. reviewed model compression techniques, such as pruning, quantization, and knowledge distillation, which can reduce model size and improve efficiency with minimal performance trade-offs [58]. Likewise, Surianarayanan et al. emphasized the role of lightweight neural architectures and memory-efficient processing for edge-AI applications like FER in clinical monitoring. These strategies are essential for scaling FER tools into routine clinical use without sacrificing speed or accessibility [58, 59].

Furthermore, federated learning presents a compelling approach for enabling collaborative model training across institutions without compromising patient privacy. Rieke et al. outlined how federated learning can facilitate decentralized data utilization, making it particularly well-suited for sensitive healthcare applications [60]. Building on this, Zhuang et al. discussed combining foundation models with federated architectures to manage data heterogeneity and optimize communication efficiency. Applying such frameworks to FER could accelerate progress in building generalizable, privacy-conscious diagnostic systems [61].

Together, these advancements underscore the need for future FER research to move beyond accuracy benchmarks and prioritize ethical deployment, computational efficiency, and cross-institutional scalability.

This systematic review and meta-analysis highlights the considerable promise of deep learning (DL), particularly convolutional neural networks (CNNs), in detecting facial expression changes associated with a wide spectrum of neurological disorders. The pooled accuracy of 89.25% and consistent high performance in disorders such as dementia and Bell’s palsy affirm that facial expression recognition (FER) can serve as a valuable non-invasive biomarker for neurological screening, diagnosis, and monitoring.

The clinical implications of these findings are substantial. FER systems powered by DL offer a scalable, low-cost alternative to traditional neurological assessments, with potential applications in telemedicine, continuous monitoring, and early detection—particularly in resource-limited or underserved settings. Moreover, integrating FER with multimodal data (e.g., speech, eye tracking, or physiological signals) and optimization techniques like quantization or federated learning can further enhance model performance, privacy, and deployment feasibility.

By mapping model performance across different neurological conditions and identifying key architectural and methodological drivers of success, this study not only synthesizes current capabilities but also lays a foundation for future research and innovation. As the field progresses, DL-based FER tools are poised to become integral to next-generation neurological diagnostics—offering clinicians objective, interpretable, and real-time insights into complex neurocognitive and neuromotor disorders.

The data is available upon reasonable request from the corresponding author.

No institution supported or funded this study.

Not applicable.

Not applicable.

The authors declare no competing interests.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Yoonesi, S., Abedi Azar, R., Arab Bafrani, M. et al. Facial expression deep learning algorithms in the detection of neurological disorders: a systematic review and meta-analysis. BioMed Eng OnLine 24, 64 (2025). https://doi.org/10.1186/s12938-025-01396-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12938-025-01396-3