BBC threatens AI firm with legal action over unauthorised content use

Pointing to findings of significant issues with representation of BBC content in some Perplexity AI responses analysed, it said such output fell short of BBC Editorial Guidelines around the provision of impartial and accurate news.

"It is therefore highly damaging to the BBC, injuring the BBC's reputation with audiences - including UK licence fee payers who fund the BBC - and undermining their trust in the BBC," it added.

Chatbots and image generators that can generate content response to simple text or voice prompts in seconds have swelled in popularity since OpenAI launched ChatGPT in late 2022.

But their rapid growth and improving capabilities has prompted questions about their use of existing material without permission.

Much of the material used to develop generative AI models has been pulled from a massive range of web sources using bots and crawlers, which automatically extract site data.

The rise in this activity, known as web scraping, recently prompted British media publishers to join calls by creatives for the UK government to uphold protections around copyrighted content.

Many organisations, including the BBC, use a file called "robots.txt" in their website code to try to block bots and automated tools from extracting data en masse for AI.

It instructs bots and web crawlers to not access certain pages and material, where present.

But compliance with the directive remains voluntary and, according to some reports, bots do not always respect it.

The BBC said in its letter that while it disallowed two of Perplexity's crawlers, the company "is clearly not respecting robots.txt".

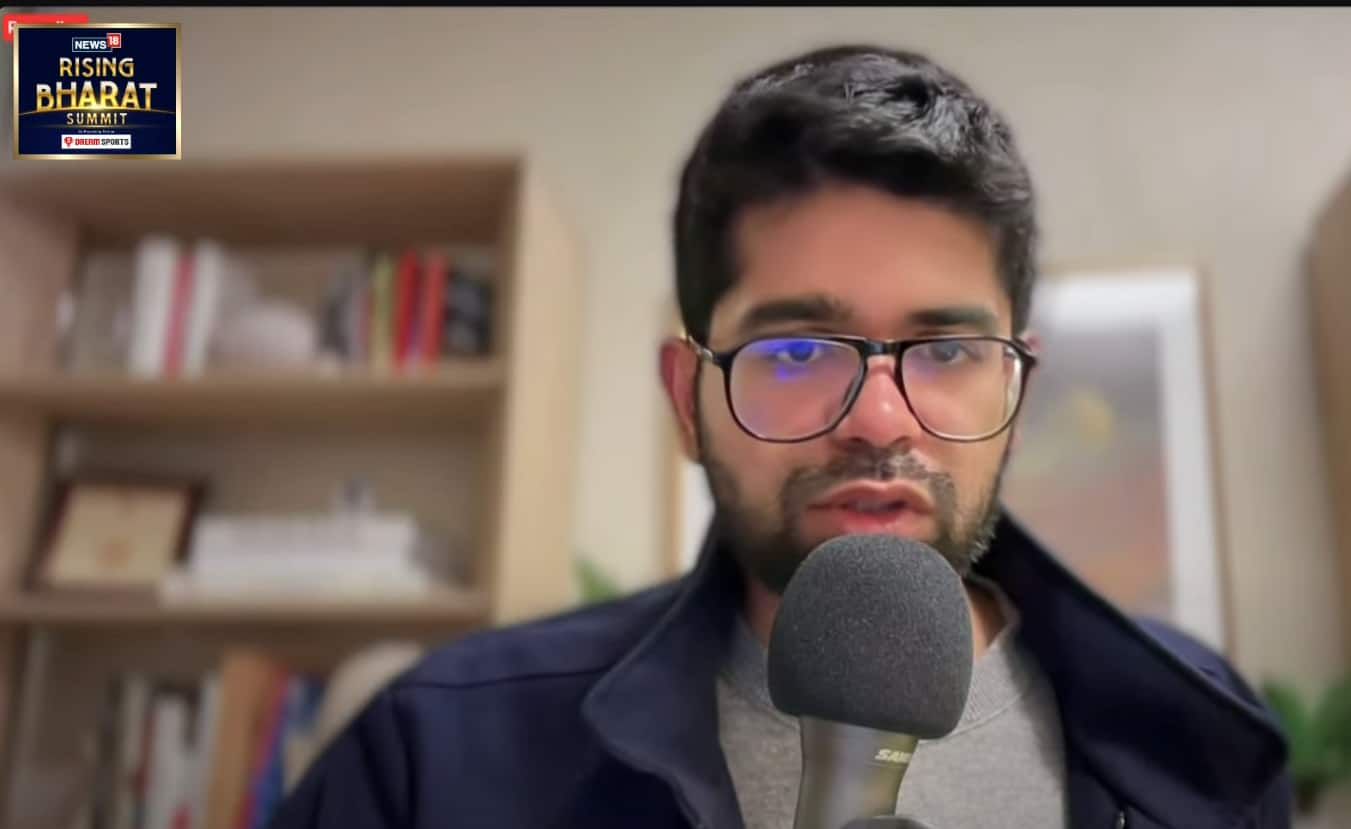

Mr Srinivas denied accusations that its crawlers ignored robots.txt instructions in an interview with Fast Company last June.

Perplexity also says that because it does not build foundation models, it does not use website content for AI model pre-training.

The company's AI chatbot has become a popular destination for people looking for answers to common or complex questions, describing itself as an "answer engine".

It says on its website that it does this by "searching the web, identifying trusted sources and synthesising information into clear, up-to-date responses".

It also advises users to double check responses for accuracy - a common caveat accompanying AI chatbots, which can be known to state false information in a matter of fact, convincing way.

In January Apple suspended an AI feature that generated false headlines for BBC News app notifications when summarising groups of them for iPhones users, following BBC complaints.