Apple's AGI Research Paper Sparks Debate in AI Community

A recent research paper from Apple, titled "The Illusion of Thinking," has ignited a significant debate within the generative AI community regarding the true reasoning capabilities of large AI models and the future direction of artificial general intelligence. Published earlier this week, the paper asserts that even the most advanced large reasoning models, such as OpenAI's o3 Mini, DeepSeek's R1, Anthropic's Claude 3.7 Sonnet, and Google Gemini Flash, do not genuinely think or reason in a human-like way. Instead, Apple's research suggests these models primarily excel at pattern recognition and mimicry, generating responses that appear intelligent but lack true comprehension or conceptual understanding.

The study employed controlled puzzle environments, including the popular Tower of Hanoi puzzle, to systematically evaluate the reasoning abilities of these large models across varying complexities. The findings indicated that while these models could handle simple or moderately complex tasks, they experienced complete failure when confronted with high-complexity problems, despite having sufficient computational resources. This suggests a fundamental limitation in their ability to truly reason beyond learned patterns.

Apple's findings have garnered widespread support from prominent figures in the AI field. Gary Marcus, a cognitive scientist and a vocal skeptic of the grand claims surrounding large language models, views Apple's work as providing compelling empirical evidence that current models largely repeat patterns learned during training from vast datasets, lacking genuine understanding or true reasoning. Marcus emphasized that if a billion-dollar AI system cannot solve a problem that AI systems solved in 1957 or that first-semester AI students routinely solve, the prospect of current models like Claude or o3 achieving Artificial General Intelligence (AGI) seems remote. These arguments resonate with earlier comments from Meta's chief AI scientist, Yann LeCun, who has also characterized current AI systems as sophisticated pattern recognition tools rather than true thinkers.

Despite the strong findings, Apple's paper has also faced criticism, leading to a polarized debate. Researchers from Anthropic and San-Francisco based Open Philanthropy published a critique arguing that the study has issues in its experimental design, specifically overlooking output limits. They conducted an alternate demonstration where models were tested on the same problems but were allowed to use code, resulting in high accuracy across all tested models. Other AI commentators and researchers, including Matthew Berman, have echoed this critique, highlighting that while state-of-the-art models failed the Tower of Hanoi puzzle at a complexity threshold of more than eight discs using natural language alone, they flawlessly solve it up to seemingly unlimited complexity when permitted to write code.

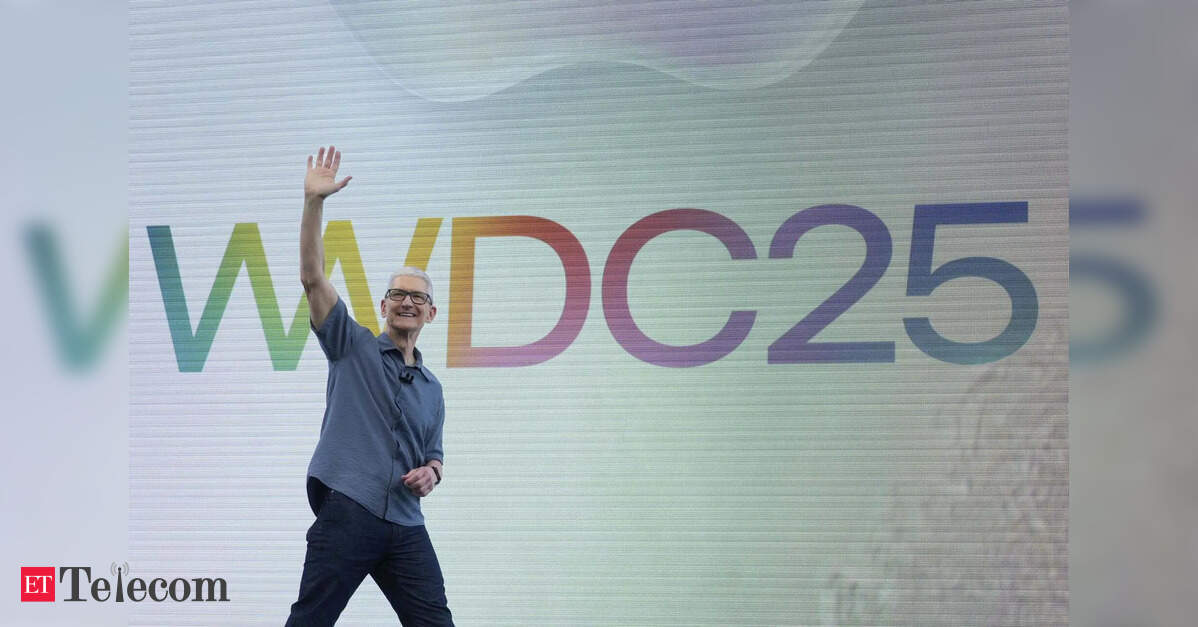

This study also sheds light on Apple's more cautious approach to AI integration compared to rivals like Google and Samsung, who have aggressively integrated AI into their products. The research seemingly explains Apple's hesitancy to fully commit to the prevailing industry narrative of rapid AI progress. The timing of the paper's release, coinciding with Apple's annual WWDC event where it unveils its next software updates, also sparked speculation in online forums that the study might be a strategic move to manage expectations regarding Apple's own AI advancements. Nonetheless, practitioners and business users largely contend that these findings do not diminish the immediate utility of current AI tools for everyday applications.

You may also like...

Diddy's Legal Troubles & Racketeering Trial

Music mogul Sean 'Diddy' Combs was acquitted of sex trafficking and racketeering charges but convicted on transportation...

Thomas Partey Faces Rape & Sexual Assault Charges

Former Arsenal midfielder Thomas Partey has been formally charged with multiple counts of rape and sexual assault by UK ...

Nigeria Universities Changes Admission Policies

JAMB has clarified its admission policies, rectifying a student's status, reiterating the necessity of its Central Admis...

Ghana's Economic Reforms & Gold Sector Initiatives

Ghana is undertaking a comprehensive economic overhaul with President John Dramani Mahama's 24-Hour Economy and Accelera...

WAFCON 2024 African Women's Football Tournament

The 2024 Women's Africa Cup of Nations opened with thrilling matches, seeing Nigeria's Super Falcons secure a dominant 3...

Emergence & Dynamics of Nigeria's ADC Coalition

A new opposition coalition, led by the African Democratic Congress (ADC), is emerging to challenge President Bola Ahmed ...

Demise of Olubadan of Ibadanland

Oba Owolabi Olakulehin, the 43rd Olubadan of Ibadanland, has died at 90, concluding a life of distinguished service in t...

Death of Nigerian Goalkeeping Legend Peter Rufai

Nigerian football mourns the death of legendary Super Eagles goalkeeper Peter Rufai, who passed away at 61. Known as 'Do...